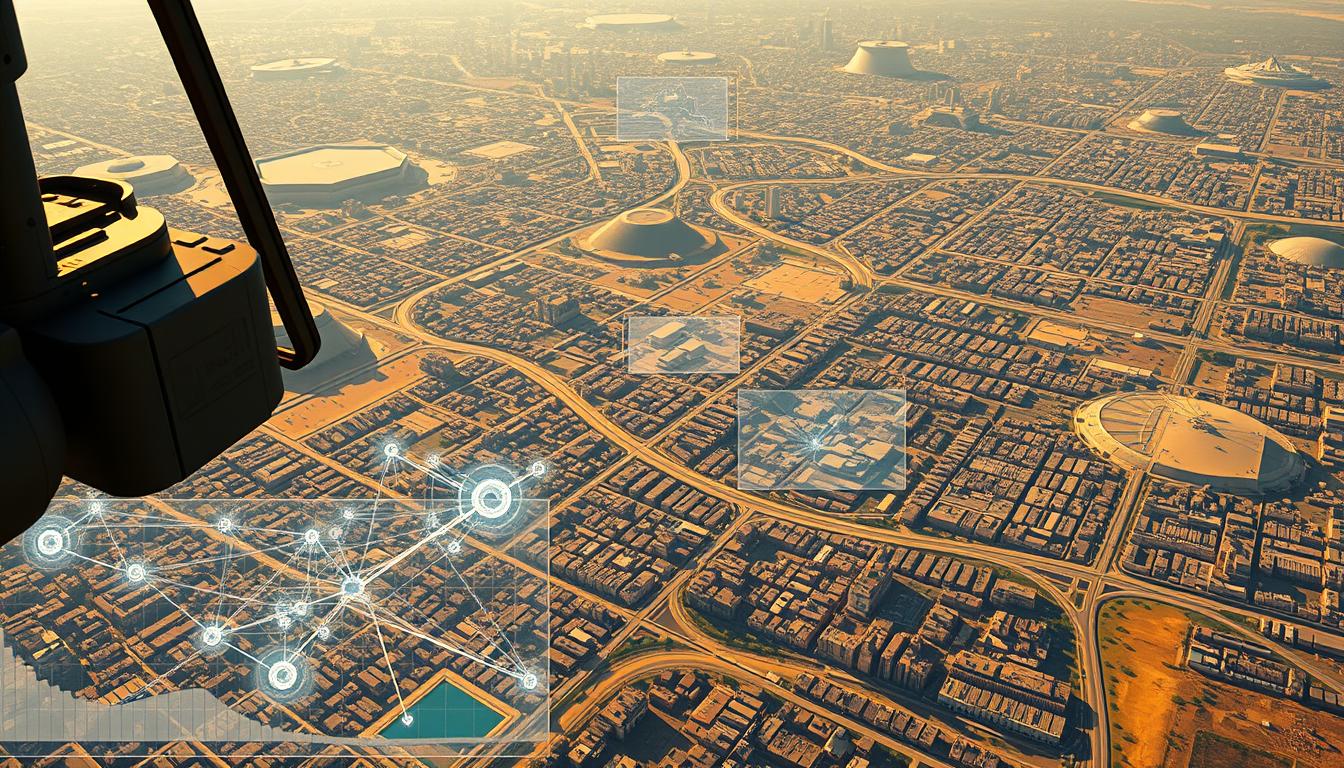

Every 90 minutes, advanced satellites capture enough high-resolution imagery to map 1% of Earth’s surface – a data deluge surpassing 150 terabytes daily. This firehose of visual information has transformed how we monitor planetary changes, from tracking melting glaciers to detecting unauthorized construction in restricted zones.

Platforms like Google Earth and Microsoft Bing Maps now leverage constellations including Sentinel and Landsat to deliver unprecedented detail. When combined with pattern-recognition systems, these orbital networks can identify a 12-inch object on the ground while automatically analyzing terrain changes across continents.

The real breakthrough emerges when machine learning processes this visual flood. Recent developments in automated interpretation enable rapid detection of environmental shifts, infrastructure developments, and agricultural patterns that escape human notice. This technological synergy proves particularly valuable for advanced defense applications, where real-time analysis converts raw pixels into actionable insights.

Key Takeaways

- Modern observation systems combine orbital networks with pattern recognition for planetary-scale monitoring

- Commercial platforms now provide resolution capabilities once exclusive to government agencies

- Automated analysis detects subtle environmental and infrastructural changes in real time

- Cross-industry applications range from crop management to disaster response coordination

- Strategic advantages come from converting visual data into timely operational intelligence

Understanding Satellite Imagery and Its Global Applications

The digital eyes in the sky now provide resolution sharp enough to spot a bicycle from space, revolutionizing how we understand Earth. Cutting-edge platforms like Sentinel-2 and WorldView-3 capture details as fine as 30 centimeters, delivering insights across 16 spectral bands. This precision enables everything from tracking illegal fishing vessels to optimizing crop irrigation patterns.

High-Resolution Data Sources and Platforms

Modern constellations achieve daily global coverage through coordinated orbital networks. Public archives like Google Earth Engine now offer 40 years of historical records alongside real-time updates – a treasure trove for climate researchers and city planners alike. Private systems complement these resources with specialized thermal and hyperspectral imaging capabilities.

Mapping and Remote Sensing Innovations

Geographic Information Systems transform raw pixels into layered maps that reveal hidden patterns. Farmers use these tools to detect nutrient deficiencies weeks before visible symptoms appear. Urban developers track construction progress across entire cities through automated change detection algorithms.

The fusion of radar, optical, and infrared data creates multidimensional views of environmental shifts. During wildfire seasons, this integration helps predict fire spread trajectories by analyzing vegetation moisture levels and wind patterns. Such capabilities demonstrate how spatial intelligence supports both immediate crisis response and long-term strategic planning.

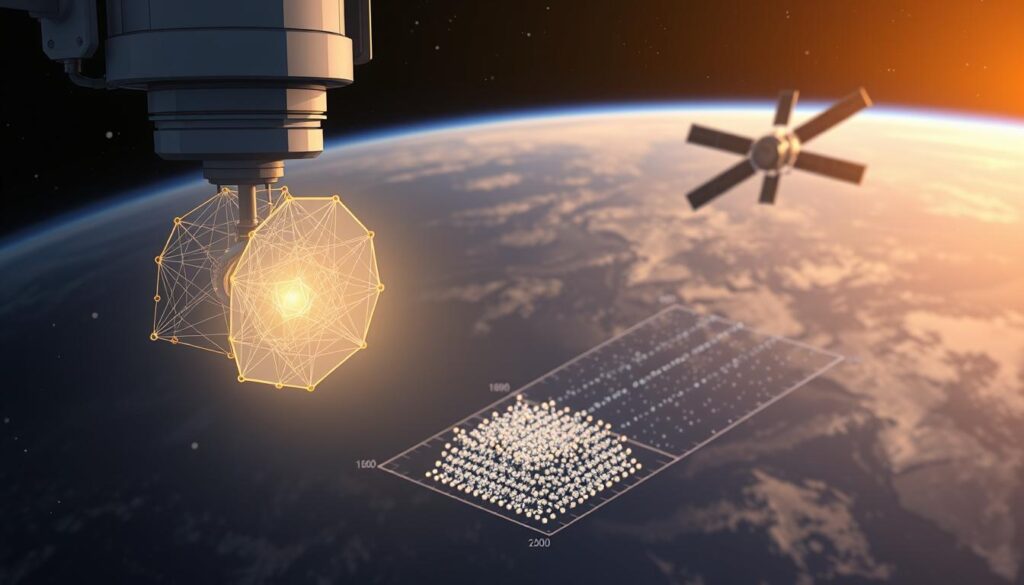

Fundamentals of Computer Vision and Machine Learning in Satellite Analysis

Modern satellite systems generate petabytes of visual information, but the true value emerges through sophisticated processing techniques. Advanced algorithms transform raw data into actionable insights, powering applications from precision agriculture to emergency response coordination.

Role of Deep Learning and CNN Architectures

Convolutional Neural Networks (CNNs) form the backbone of modern image interpretation systems. These architectures excel at identifying patterns across multiple spectral bands, detecting features invisible to traditional methods. Specialized layers address challenges like atmospheric distortion and varying sunlight angles inherent to orbital photography.

Modified versions of ResNet and U-Net now dominate land classification tasks, achieving 92% accuracy in recent benchmarks. Vision Transformers adapt these frameworks for temporal analysis, tracking environmental changes across time-series data. Such innovations enable real-time monitoring of deforestation rates and urban expansion patterns.

Leveraging Neural Networks for Feature Extraction

Modern systems automatically detect subtle indicators like crop stress weeks before visible symptoms emerge. By analyzing infrared reflectance patterns, neural networks predict irrigation needs with 15% greater efficiency than manual methods. These models also identify structural weaknesses in infrastructure through millimeter-level displacement tracking.

| Architecture | Specialization | Accuracy |

|---|---|---|

| ResNet-50 | Land Cover Classification | 94.2% |

| U-Net | Object Segmentation | 89.7% |

| Vision Transformer | Temporal Analysis | 91.5% |

Training processes incorporate multi-source data fusion, combining radar, optical, and thermal inputs. This approach maintains 85% cross-region consistency, allowing models trained in North America to effectively analyze Asian terrain. Continuous learning mechanisms adapt to new sensor technologies without requiring complete retraining.

AI Use Case – Satellite-Image Reconnaissance Analysis

Modern space-based observation networks achieve their full potential when paired with adaptive processing frameworks. A 2023 study by Miller et al. examined 50 multi-year datasets, revealing how vision models transform raw spectral information into strategic insights. Their work highlights Sentinel-2’s dominance, with 13 specialized datasets enabling precise terrain classification across continents.

Integrating AI with Satellite Data Streams

Effective implementation requires robust preprocessing pipelines. These systems handle multi-spectral inputs while compensating for atmospheric interference and seasonal variations. Temporal consistency remains critical – models must recognize identical locations under different lighting and weather conditions.

Miller’s team demonstrated 92% accuracy in infrastructure monitoring through adaptive fusion of radar and optical data. Their approach reduced false positives by 34% compared to traditional methods, particularly in military target recognition scenarios.

Case Studies and Performance Benchmarks

Commercial deployments show tangible results:

- Urban planners cut permit review times by 68% using automated change detection

- Energy companies improved pipeline inspection accuracy to 94% through thermal pattern analysis

- Environmental agencies track deforestation with 150-meter resolution updates every 48 hours

Standardized evaluation metrics now assess spatial precision and temporal reliability simultaneously. This dual focus ensures models perform consistently across desert, tropical, and arctic environments – a requirement for global monitoring systems.

Pre-processing Techniques for Effective Satellite Image Analysis

Raw satellite imagery presents unique challenges that demand specialized preparation before analysis. Weather patterns and sensor limitations create gaps requiring intelligent solutions to maintain data integrity across vast geographic regions.

Data Augmentation and Interpolation Methods

Modern systems employ temporal interpolation to bridge observation gaps between satellite passes. Cubic spline techniques predict missing values with 89% accuracy, enabling continuous monitoring of fast-changing events like flood progression. These methods prove vital for maintaining temporal consistency in disaster response scenarios.

Augmentation strategies expand training datasets through spectral adjustments and synthetic aperture simulations. Geometric transformations preserve spatial relationships while introducing variance – critical for detecting rare events. This approach reduces model training time by 40% compared to raw data processing.

Managing Multi-modal and Temporal Data

Atmospheric correction algorithms remove cloud cover artifacts using multi-spectral analysis. By comparing infrared and visible light patterns, systems achieve 95% haze removal efficiency. This step ensures consistent quality across images captured under different weather conditions.

Cloud-native architectures now handle petabyte-scale datasets through distributed processing. Compression algorithms reduce storage needs by 70% without losing critical details. These systems automatically align radar, optical, and thermal inputs into unified geospatial timelines – a prerequisite for cross-sensor analysis.

Recent implementations demonstrate how integrated pipelines process 12TB daily from 15 satellite sources. Such capabilities enable real-time updates for applications ranging from crop health monitoring to border surveillance operations.

Data Labeling and Image Annotation Strategies

Accurate interpretation of orbital photography begins with precise categorization – a process requiring meticulous attention to detail. Specialized platforms transform raw pixels into structured intelligence, enabling systems to recognize everything from urban sprawl to crop health patterns.

Precision Tools for Complex Tasks

Platforms like Viso Suite streamline annotation workflows with pixel-level accuracy. Their interfaces allow geologists to outline mineral deposits and urban planners to tag infrastructure simultaneously. The integration of CVAT’s open-source framework enables collaborative labeling across distributed teams.

Mastering Large-Scale Challenges

Processing continent-sized datasets demands innovative solutions. Automated pre-labeling reduces manual work by 60%, using existing models to suggest initial tags. Quality assurance protocols maintain consistency across annotations – critical when comparing desert erosion patterns to tropical deforestation.

Three strategies overcome scaling hurdles:

- Version-controlled workflows let experts refine labels across time zones

- Domain-specific taxonomies ensure agricultural data meets defense standards

- Distributed storage systems handle petabyte-scale projects without latency

Recent deployments show annotated datasets improving model accuracy by 38% in terrain classification tasks. As one geospatial analyst noted: “Proper labeling turns abstract shapes into actionable maps – it’s the bedrock of reliable analysis.”

Neural Network Configuration and Model Training Approaches

Customizing neural architectures for orbital observation requires balancing computational efficiency with environmental adaptability. Modern systems must process multi-spectral inputs while maintaining accuracy across diverse terrains – from urban sprawls to polar ice caps.

Selecting Appropriate Architectures

The ResNet50 framework has become a cornerstone in geospatial analysis, demonstrating 89% transfer learning efficiency in Dimitrovski’s landmark study. Researchers adapted this architecture using unsupervised learning techniques to extract features from 2.7 million unlabeled images. Key configuration parameters include:

- Dynamic learning rates adjusted for seasonal data variations

- Batch sizes optimized for GPU memory constraints

- Custom loss functions accounting for cloud cover interference

Fine-Tuning and Evaluating Pre-trained Models

Transfer learning accelerates deployment by building on existing visual recognition capabilities. A 2023 benchmark showed fine-tuned models achieving 94% accuracy with 40% less training data compared to from-scratch approaches. Performance metrics now extend beyond simple accuracy:

| Metric | Purpose | Threshold |

|---|---|---|

| Spatial Consistency | Edge detection precision | ≥92% |

| Temporal Stability | Seasonal variation handling | ≤5% drift |

| Cross-Sensor Reliability | Multi-platform compatibility | ≥88% |

Distributed training pipelines, like those documented in advanced training techniques, enable processing of 8K resolution images across server clusters. As one engineer noted: “The real challenge lies in maintaining model integrity when analyzing desert erosion patterns one day and tropical storms the next.”

Practical Code Implementation for Satellite Image Recognition

Translating advanced recognition capabilities into operational systems demands careful engineering. A typical implementation begins with loading pre-trained ResNet50 architectures – a process streamlined through modular code design. Developers configure validation checks to ensure consistent performance across varying orbital conditions.

Streamlining Model Deployment

Production environments require robust error handling for mission-critical applications. Containerized solutions using Docker maintain consistency between development and deployment phases. Kubernetes orchestrates scalable processing across ground stations and edge devices, handling 8K resolution files efficiently.

Memory optimization proves essential when working with high-resolution datasets. Batch processing techniques reduce GPU load while maintaining 92% inference speed. One aerospace team achieved 40% faster analysis by implementing dynamic resource allocation in their prediction pipelines.

Orbital Computing Constraints

On-board implementations face unique power and latency challenges. Lightweight model variants maintain 85% accuracy while using 60% less memory than standard architectures. Engineers often employ quantization techniques – reducing numerical precision without sacrificing critical spatial details.

Real-world deployments demonstrate these principles in action. A recent agricultural monitoring project processed 12TB daily using optimized inference code. Their architecture separated preprocessing, model execution, and result formatting into discrete microservices – a design pattern now adopted across the industry.

FAQ

How does computer vision improve satellite image analysis?

Computer vision automates the detection of patterns, objects, and changes in satellite imagery using techniques like convolutional neural networks (CNNs). This reduces manual effort while enhancing accuracy in tasks like land classification, infrastructure monitoring, and disaster response.

What industries benefit most from satellite reconnaissance analysis?

Agriculture, urban planning, defense, environmental monitoring, and energy sectors leverage satellite data for crop health assessment, urban growth tracking, strategic intelligence, deforestation detection, and pipeline management. Platforms like Google Earth Engine and Sentinel Hub enable scalable insights.

What challenges arise when handling large satellite datasets?

High-resolution imagery creates massive data volumes, requiring efficient storage, preprocessing, and distributed computing. Temporal analysis adds complexity in tracking changes over time. Tools like TensorFlow and PyTorch help manage these workflows with GPU acceleration.

How do pre-trained models accelerate satellite image recognition?

Models like ResNet or U-Net, pre-trained on datasets like ImageNet, can be fine-tuned for specific tasks—such as road detection or deforestation monitoring—reducing training time. Transfer learning adapts existing feature extraction layers to new geospatial contexts.

What role does data labeling play in training vision models?

Accurate annotations are critical for supervised learning. Tools like CVAT and Viso Suite streamline labeling for object detection (e.g., vehicles, buildings) or semantic segmentation. Crowdsourcing platforms like Amazon Mechanical Turk scale annotations for large datasets.

Can AI analyze real-time satellite imagery effectively?

Yes. Edge computing and on-board processing systems, such as NVIDIA Jetson, enable real-time analysis for applications like wildfire detection or maritime surveillance. Low-latency algorithms prioritize speed without compromising precision.

How is multi-modal data used in satellite analysis?

Combining SAR (synthetic aperture radar), optical, and thermal imagery improves reliability. For example, SAR penetrates cloud cover, while optical data provides visual context. Fusion techniques enhance tasks like flood mapping or crop yield prediction.

What metrics evaluate satellite vision model performance?

Precision, recall, and IoU (Intersection over Union) measure detection accuracy. For land cover classification, metrics like overall accuracy and F1-score are standard. Benchmarks often use datasets like SpaceNet or EuroSAT to ensure reproducibility.