Someone once lost trust after a single, flawless message convinced them to click a link. That quiet moment of doubt is where technical risk meets human cost.

This report stitches together research from leading teams to show how actors speed operations. GTIG, IBM X-Force, and TRM Labs describe models that help attackers with code troubleshooting, multilingual content, and rapid reconnaissance.

The core insight: this is a productivity shift, not a magic leap—yet tempo and volume of attacks rise, raising the threat for American enterprises and critical services.

Readers will find clear, actionable guidance for security teams: how to spot scaled phishing, protect identity, and use detection to outpace attackers. We frame defenses as opportunity—use analytics, adversarial testing, and continuous training to regain the advantage.

Key Takeaways

- Models amplify attacker productivity; speed and scale are the main risk.

- APT groups and coordinated actors use tools for localization and troubleshooting.

- Platform safety blocks some harms; many permissive tasks still help attackers.

- Phishing, deepfakes, and synthetic identities reshape social engineering baselines.

- Security leaders can counter with behavioral analytics and proactive testing.

Executive overview: What the data says about AI-powered threat activity

Across multiple reports, the evidence is straightforward: threat actors are applying models to speed reconnaissance, code troubleshooting, and content localization. This raises the number of attacks and broadens campaign reach without producing novel, breakthrough capabilities.

Key takeaways

- GTIG notes heavy use by Iranian and Chinese groups for research and localization; public jailbreaks largely failed.

- IBM X-Force documents phishing that can be crafted in minutes—an “intern effect” that lifts less-skilled operators.

- TRM maps maturity tiers—Horizon, Emerging, Mature—highlighting a trajectory toward greater autonomy and fraud risk.

For U.S. defenders the response is tactical: scale detection, harden identity checks, and run adversarial evaluations of models. Focus on behavioral analytics and intelligence-led controls to spot higher volumes of phishing and synthetic identity schemes.

| Report | Primary finding | Defender implication |

|---|---|---|

| GTIG | Localization, recon, code help; jailbreaks failed | Emphasize model hardening; monitor multilingual signals |

| IBM X-Force | Phishing created rapidly; supply-chain focus | Harden email controls; simulate scaled phishing |

| TRM / Treasury | Maturity tiers; deepfakes and synthetic identity risk | Strengthen KYC; integrate cross-signal fraud detection |

For further context and practical guidance, consult TRM’s detailed analysis on the rise of model-enabled crime: model-enabled crime report.

Scope and methodology of this trend analysis/report

Our method blends telemetry, financial reporting, and offensive testing to build a clear signal. The goal: convert varied inputs into actionable findings for U.S. security teams.

Sources analyzed

We triangulated three primary streams: GTIG platform telemetry, TRM Labs’ financial-sector research, and IBM X-Force red-team observations. Each source contributes distinct visibility across the attack lifecycle.

- GTIG: direct logs showing attempts to use models for code and reconnaissance.

- TRM: maturity framework and sector alerts on deepfakes and synthetic identities.

- IBM X-Force: empirical tests that document time compression on phishing workflows.

“We privilege examples that are repeatable and verifiable, focusing on actor behavior observed in the wild.”

We structured findings by lifecycle stage, actor typology, and sector impact. This makes the report useful for teams that must prioritize limited resources.

| Source | Primary evidence | Defensive implication |

|---|---|---|

| GTIG | Telemetry on prompts, recon, and code assistance | Monitor multilingual signals; harden model-exposed flows |

| TRM Labs | Maturity tiers; financial alerts on synthetic identity | Strengthen KYC; integrate cross-signal fraud detection |

| IBM X-Force | Red-team phishing tests and agent trends | Simulate scaled phishing; update training and DMARC |

Limitations are explicit: datasets cover specific time windows and platforms. Still, cross-source agreement raises confidence that observed threats merit operational controls.

For deeper context on model-related adversarial concerns and sector guidance, consult a technical brief on adversarial issues and a strategic report on cyberwarfare trends: adversarial threat intelligence and cyberwarfare trends analysis.

AI Misuse in Hacking

The most notable shift is tempo: operations move faster and at greater scale, not necessarily with new exploits.

Generative tools speed routine tasks—reconnaissance, multilingual content, and script troubleshooting—so actors can run higher-volume campaigns with polished material.

Threats stem from volume and quality. Attackers now draft persuasive narratives, localize pretexts, and refine technical notes that support operations. Safety filters often block overtly malicious code, but content scaffolding helps attackers move through the lifecycle faster.

Security teams should assume flawless prose: poor grammar no longer signals fraud. Oversharing of corporate information fuels tailored attacks and raises success rates for social engineering.

- Focus defenses: layered controls, behavioral analytics, and telemetry over signature-only rules.

- Elevate training: test multilingual pretexts and polished social-engineering scenarios.

- Governance: reduce public data exposure and enforce out-of-band verification for critical requests.

“Operational risk is less about novel zero-days and more about efficient reuse of known tactics, wrapped in convincing content.”

How government-backed threat actors are leveraging generative models

Several nation-state operators leverage model-driven workflows to move from scouting targets to refining payloads more quickly.

Research and reconnaissance tasks dominate early-stage activity. Iranian-linked actors led use cases, combining defense-focused research, CVE review, and localized content for Farsi, Hebrew, and English audiences.

Chinese groups concentrated on post-compromise objectives: lateral movement, privilege escalation, data exfiltration, and detection-evasion research. These tasks speed goal-oriented operations and lower labor needs.

From technical refinement to payload development

North Korea’s profile showed practical development: infrastructure mapping, scripting support, payload development, and even drafting employment cover letters to aid placement efforts.

Heaviest use patterns and contrast

Russian activity was limited during the study window, focused on code conversion and added encryption—suggesting different doctrine or tool choices.

Information operations and amplification

IO campaigns used models to craft personas, translate messaging, and scale reach across social media. Localization improved trust signals and broadened campaign audiences.

- Key point: vulnerabilities research targeted public CVEs and known techniques, emphasizing speed over novelty.

- Operational impact: access and data goals remain constant—actors probe organizations, refine code snippets, and hunt hosting options.

“The net effect is efficiency: more campaigns iterate with less friction while content quality and localization raise success rates.”

Inside jailbreaks and prompt injection attempts

Low-effort jailbreak prompts remain a common probe, with actors copying public recipes and making minor edits to map model responses.

GTIG observed many such attempts. Requests for DDoS Python scripts, ransomware-like steps, Chrome infostealers, and Gmail phishing techniques were all met with filtered outputs or declines.

Key defenses include strict input/output validation, adversarial testing, and continuous model hardening. These layers stop many basic attacks.

Detection must treat prompt sequences as context: a benign code-conversion request can escalate into disallowed content over several turns. Telemetry on blocked attempts helps tune filters and prioritize fixes.

- Document failed jailbreak chains to teach users and refine policy.

- Use red-team tools and tests to simulate evolving threats.

- Enforce sandboxes and role-based constraints as models gain tools and autonomy.

“Treat model abuse surfaces as living systems that require ongoing hardening.”

| Observed probe | Outcome | Defensive action |

|---|---|---|

| DDoS script requests | Blocked / safe decline | Input validation; deny code execution |

| Phishing technique details | Filtered / refusal | Policy alignment; telemetry logging |

| Malware / infostealer outlines | Declined output | Adversarial testing; red-team simulations |

For deeper technical context on prompt injection and practical guidance, consult prompt injection guidance. Organizations should turn blocked-attempt data into security awareness and acceptable-use rules for model access.

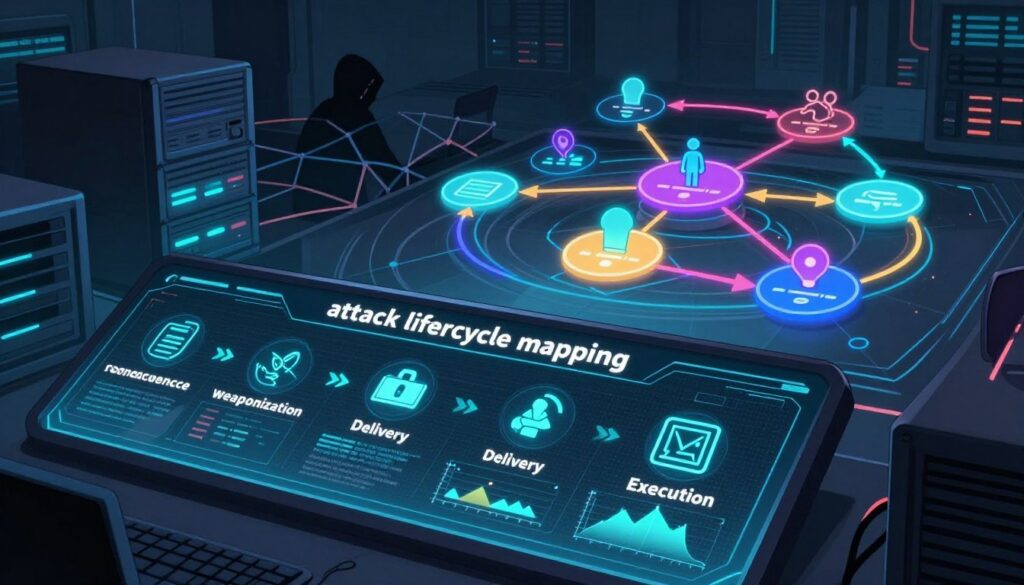

Attack lifecycle mapping: Where AI accelerates campaigns

Small, stage-specific efficiencies now compound across a campaign. That change shortens timelines and raises volume.

Reconnaissance and weaponization

Recon accelerates when models summarize targets, list hosting options, and map public vulnerabilities to priorities.

Weaponization benefits from code conversion, debugging, and adding encryption—reducing trial-and-error for tooling and malware development.

Delivery and exploitation

Delivery improves through higher-quality messaging: multilingual phishing and tailored templates that match current events.

Exploitation assistance focuses on understanding EDR components and protocol weak points, such as WinRM, while direct exploit code is generally blocked.

Installation, C2, and actions on objectives

Installation research targets stealth methods—signed plugins, certificate tricks, and credential tooling—to sustain access.

C2 planning uses configuration snippets and event-log techniques to manage networks and route commands. Actions on objectives grow more automated with Selenium workflows and scripted exfiltration.

Defender priorities:

- Pivot detection to behavior: watch for abnormal authentication and scripted process chains.

- Break stage chains: harden email, enforce plugin signing, and monitor lateral movement markers.

- Turn small probes into telemetry: blocked guidance and example chains inform tuning and training.

| Stage | Example uses | Defender focus |

|---|---|---|

| Recon | Target summaries; CVE context | Limit exposed data; monitor reconnaissance signals |

| Weaponization | Code conversion; encryption add-ons | Scan build pipelines; detect unusual tooling |

| Delivery & Exploitation | Phishing emails; EDR analysis | Harden mail systems; simulate multilingual campaigns |

| Installation & C2 | Signed plugins; event-log C2 | Enforce signing; monitor event-log anomalies |

| Actions | Selenium automation; bulk uploads | Detect scripted behaviors; limit bulk transfer paths |

“Incremental guidance at each stage compounds into materially faster operations.”

From assistants to agents: The evolution of AI-enabled operations

Models now act as a rapid assistant for routine tasks, shortening attacker timelines across development and operations.

Today’s “intern” role supports code debugging, content drafting, and multilingual edits. IBM X-Force found that effective phishing can be crafted in minutes rather than hours—language quality removes classic red flags and speeds delivery.

Emerging agentic workflows

Tools and plugins let models chain actions across browsers, storage, and communications. That ecosystem growth hints at semi-autonomous agents that can identify targets, plan steps, and execute sequences with limited oversight.

Research functions scale rapidly: models synthesize documentation, error logs, and stack traces so less experienced attackers can act with confidence. Media manipulation—voice, image, and video—folds into these workflows, widening the scope of social engineering.

- Operational risk: assistance blurs into action as autonomy rises.

- Defender insight: preemptive adversarial testing should cover full tool-use paths, not just prompts.

- Governance: pair technical guardrails with business-process checks like out-of-band verification.

“Decisive governance and rigorous development lifecycle testing will determine who adopts agentic tools safely and who faces escalating threats.”

We recommend scenario planning and adversarial evaluations to anticipate emergent behaviors. This way, defenders gain practical insights and can shape safer adoption of these tools and agents.

Maturity tiers of AI-enabled crime: Horizon, Emerging, and Mature

Tiers map how tools, services, and autonomy combine to change campaign speed and scale. This framework helps security teams link observations to concrete controls and tests.

Horizon: autonomous attacks and scaled laundering as future risks

The Horizon tier frames near-term risks where agents discover vulnerabilities and run automated laundering flows. These systems can chain tools and wallets to move value with little human oversight.

Action: plan governance for tool access, objective functions, and fail-safes now.

Emerging: deepfakes, phishing at scale, and enhanced fraud operations

The Emerging tier captures current shifts: deepfakes used for onboarding fraud, high-volume phishing, and fraud workflows augmented by third-party services.

Operators boost operations with polished content and translated pretexts. We see more campaigns that are faster and harder to spot.

Mature: when systems surpass human-driven campaigns

The Mature tier envisions systems coordinating end-to-end attacks, interacting with browsers, wallets, and marketplaces autonomously. The “Terminal of Truths” example shows an agent accumulating digital assets without ongoing human direction.

Defender priority: simulate agentic workflows and build revocation and audit paths for misused services.

“Early indicators—sustained multi‑modal disinformation and autonomous asset flows—signal a shift toward mature operations.”

- Actors’ trajectories vary; monitor service adoption to spot tipping points.

- Link each tier to controls, test scenarios, and escalation playbooks.

- Regulators and providers should define standards for agent behavior and auditability.

Social engineering in the age of flawless prose

Phishing no longer relies on sloppy grammar; today’s messages are crafted to read like trusted notes from colleagues.

Quality and speed matter: IBM X-Force found that effective phishing can be generated in minutes and in many languages. In 2024, the U.S. recorded roughly $12.5B in losses tied to fraud and phishing, illustrating how scaled, polished content raises risk.

Phishing emails, messages, and multilingual localization at scale

Phishing emails and short messages now show impeccable tone and role-aware details. Public profiles and social media produce target maps that make pretexts believable across markets.

Deepfakes and synthetic media: voice, video, and identity manipulation

Deepfake video and voice increase impersonation threats—supplier calls and C-level requests may bypass simple checks. FinCEN and Europol note rising use of synthetic media in fraud.

Rethinking training: emotional triggers, pretexts, and cadence

Training should move from “spot the typo” to simulated scenarios that expose urgency cues and scripted manipulation. Require out-of-band verification for payments and credential changes.

“Teach individuals to verify process, not trust prose.”

| Threat | Characteristic | Best defense |

|---|---|---|

| Phishing | Localized, polished content | Simulate multilingual campaigns; require second approvals |

| Deepfake video | Convincing voice and visual traits | Out-of-band verification; video authentication checks |

| Cross-channel scams | Emails, chat, calls blended | Standardize escalation paths; limit public information |

For practical playbooks and simulation templates, consult a focused guide on social-engineering enterprise threats at LLM social engineering enterprise scams.

Sector impacts: Finance, critical infrastructure, and defense

Sector leaders are confronting a new normal: scaled, multi-step fraud that targets core services and trust flows.

Financial services face layered exposure. Deepfake-enabled onboarding and synthetic identities strain compliance and push support teams to their limits.

Organizations must upgrade identity assurance and continuous authentication across services where access and data overlap. Anomaly detection should span payments, account creation, and customer support channels.

Critical infrastructure and supply chains

Attacks exploit vendor trust: a single compromise can cascade through networks and impact multiple organizations.

Examples include spoofed supplier changes and fake executive requests validated by voice or video. These patterns demand strict out-of-band verification and contractual security obligations for third parties.

| Sector | Primary impact | Recommended control |

|---|---|---|

| Financial services | Deepfakes, synthetic identities, large-scale scams | Stronger KYC; continuous auth; transaction monitoring |

| Critical infrastructure | Supply-chain compromise; cascading outages | Segmentation; supplier risk controls; shared telemetry |

| Defense & gov suppliers | Targeted theft and tooling for remote access | Harden remote management; vet vendor access; recovery tests |

“Reported theft volumes tied to nation-state actors show how strategic campaigns target high-value financial ecosystems.”

- Threats favor convincing narratives over novel exploits; process integrity and signal correlation are key defenses.

- Malware targeting remote management remains an enduring risk; monitor for misuse and unusual access patterns.

- Security leaders should prioritize segmentation, supplier controls, and recovery testing tuned to sector scenarios.

Detection and intelligence: Defenders’ AI versus attackers’ AI

Detection systems must blend content signals and behavioral telemetry to expose fluent, high-volume campaigns.

Safety filtering, model hardening, and adversarial testing

Model safety fallbacks and strict role controls reduce risky tool calls and limit escalation. GTIG recommends continuous hardening and telemetry on blocked prompts.

Apply adversarial testing to probes, prompt sequences, and tool invocation. That practice reveals gaps before attackers exploit them.

Behavioral analytics and anomaly detection across networks and media

Layered intelligence pairs content analysis with network signals to spot polished but abnormal behavior. TRM and IBM X-Force emphasize signatures that combine voice markers, JWT routing oddities, and device fingerprints.

- Use model-assisted triage to rank alerts and cut analyst fatigue.

- Correlate benign assistance artifacts with suspicious sequences to detect malware-adjacent activity.

- Share intelligence and align taxonomies to speed response to emerging threats.

Practical defenses: automated guardrails, continuous monitoring dashboards, and simulation exercises that validate end-to-end alerting.

Vulnerabilities, tools, and techniques shaping current attacks

Many campaigns now pair public CVE research with rapid code tooling to shorten the path from discovery to access. Actors research WinRM flaws, IoT bugs, and EDR components, then translate findings into scripts that query event logs or adjust Active Directory.

Public CVEs remain central: models and automation help summarize exploit chains and rank vulnerabilities by likely impact. That focus lets attackers prioritize targets and speed remediation-avoidance tactics.

Tools for code conversion and encryption augmentation streamline repackaging of existing malware and complicate signature-based detection. Developers and defenders should watch how AES is added and which processes spawn unusual child tasks.

Recon leverages open-source person and org data to craft tailored pretexts and post‑access objectives. Scripting assistance often links reconnaissance to lateral steps—automating log queries, credential use, and bulk transfers.

Detection must track technique change: monitor telemetry for missing logs, unusual process trees, and sudden configuration edits. Cross-functional development of rapid patch maps and purple-team drills narrows exposure windows.

| Focus | Observed use | Defender action |

|---|---|---|

| Public CVEs | Prioritization and exploit summaries | Integrate CVE feeds with asset inventories |

| Tools & scripting | Code conversion; AES addition to malware | Behavioral EDR rules; script signing |

| EDR evasion | Telemetry gaps; process tampering | Monitor child processes; log integrity checks |

| OSINT targeting | Personnel profiling; tailored phishing | Reduce public exposure; simulate campaigns |

“Speed matters: aligning CVE intelligence with fast remediation and purple-team practice closes the window attackers exploit.”

Policy, regulation, and cross-sector collaboration

Cross-sector rules and shared playbooks offer a practical path to reduce fraud and media manipulation. Public bodies and industry groups now press for common standards that make risky model behavior auditable and accountable.

Risk management frameworks aligned to NIST and sector guidance

Organizations benefit from NIST-aligned risk frameworks that codify controls for model governance, data integrity, monitoring, and incident response.

Solutions should combine technical guardrails, human-in-the-loop approvals, and auditable decision pathways.

Global cooperation to counter transnational threats

Global bodies—INTERPOL and UN forums—advocate harmonized regulation and shared alerts. FinCEN and sector reports stress coordinated responses to deepfake-enabled fraud.

“Harmonized standards and timely information sharing shorten windows of opportunity for cross-border campaigns.”

| Policy focus | Practical requirement | Benefit to company |

|---|---|---|

| Model governance | Provenance logs; usage audits | Faster incident triage; vendor accountability |

| Identity assurance | Minimum identity checks; anomaly detection | Lower onboarding fraud; safer services |

| Public education | Media literacy and awareness campaigns | Reduced success of social manipulation |

A balanced way forward preserves innovation while imposing clear accountability: regulators should require transparency for high-risk behaviors, fund joint exercises, and incentivize safe-by-design practices.

Operational playbook for organizations in the United States

Tactical routines—simple, rehearsed, and measurable—cut the window attackers have to exploit good prose and public data.

Harden email and identity layers; verify high-risk requests out-of-band

Prioritize layered defenses for emails and identity: phishing-resistant MFA, conditional access, and explicit out-of-band verification for wires, payroll, and vendor changes.

Ensure DMARC/DKIM/SPF are set, enable impersonation protection, and detonate links/files in real time.

Red team, purple team, and continuous security awareness training

Security teams should run frequent red and purple team exercises that simulate multilingual spear-phishing and deepfake-enabled pretexts.

Training must be continuous and context-rich—focus on urgency, authority, and emotional triggers rather than grammar alone.

Content governance: reduce oversharing in job posts and media

People are a key detection layer. Make it easy to report suspicious messages and reward early escalations that stop losses.

Limit public content: blur org charts, avoid badge images, and remove unnecessary tech-stack details from posts and social media.

“Measure outcomes: simulation failure rates, mean time to detect, and training cadence—then adjust quarterly.”

| Control | Expected outcome | Key metrics | Tools / owners |

|---|---|---|---|

| Phishing-resistant MFA & conditional access | Fewer credential takeovers; tighter access | Auth failures; blocked login rate | Identity team; conditional access tools |

| Red / purple team simulations | Realistic tests of people and systems | Simulation failure rate; MTTR | Red team; SOC; training program |

| Content governance & reporting | Less OSINT for attackers; faster escalation | Public post counts; reports submitted | HR; comms; security awareness tools |

Conclusion

Across sectors, observed behavior shows that modest tooling gains translate to larger strategic risk. Research from GTIG, TRM, and IBM X-Force offers clear insights: actors use models to speed localization, polish, and campaign tempo. The practical result is higher-volume operations, not novel breakthroughs.

Defenders must shift to behavioral detection and intelligence-led controls. Threat actors and attackers favor workflow acceleration; organizations should respond by automating detection, triage, and response orchestration. Implement safeguards for tool access, permissions, and auditability now.

Practical way forward: break the chain early—harden identity, email, and plugin controls; reduce public information that scripts convincing pretexts; prioritize patching by exploitability and watch for code transformation that hides malware. Move first, test often, and iterate defenses as the threat evolves.

FAQ

What does the report titled "When Hackers Use GPT: The Dark Side of AI" cover?

The report examines how generative models are being adopted across threat actor toolchains, from reconnaissance and payload development to delivery and post-compromise actions. It synthesizes findings from threat intelligence sources — including GTIG, TRM, and IBM X-Force — and maps where models accelerate campaigns, such as phishing, social engineering, malware development, and automation of repetitive tasks.

What are the key takeaways from recent threat intelligence about generative model–assisted attacks?

Recent reporting shows increased use of models for crafting convincing phishing emails, localized messaging, persona creation, and tooling assistance. While attackers have scaled capabilities, these techniques have not yet produced a single “breakthrough” capability that replaces skilled operators; instead, models amplify efficiency, reach, and speed across reconnaissance, weaponization, and delivery stages.

How are government-backed threat actors leveraging generative models differently than criminal groups?

Nation-state actors focus on research, high-fidelity reconnaissance, tailored payload development, and information operations that emphasize persona building, localization, and amplification. Patterns vary: Iran and China show heavier adoption for tooling and targeting; Russia has more limited use to date; North Korea pursues unique financial goals through automation and fraud. All exploit open-source intelligence, social media, and synthetic media when useful.

Where in the attack lifecycle do models provide the greatest advantage to adversaries?

Models accelerate multiple stages: reconnaissance (target profiling using OSINT), weaponization (scripting, CVE analysis, and payload refinement), delivery (high-quality phishing and messaging), and actions on objectives (automation of lateral movement, evasive scripting, and data exfiltration). The biggest measurable gains are speed, scale, and reduced operational friction.

What is the role of jailbreaks and prompt injection in adversary operations?

Jailbreaks and prompt injection attempts aim to cause models to produce disallowed outputs or reveal sensitive prompts and system instructions. Attackers test model boundaries to extract code snippets, exploit templates, or steps that aid intrusion, and they may combine these techniques with malware and C2 tooling to operationalize findings.

How should defenders prioritize detections against model-enhanced campaigns?

Prioritize behavioral analytics and anomaly detection across email, identity, and network layers. Harden model deployment with safety filtering and adversarial testing. Focus on high-risk vectors: credential theft, spear phishing, supply-chain signals, and rapid tooling that targets known CVEs. Combine detection with threat intelligence to spot emerging playbooks and infrastructure.

What practical mitigations should organizations implement immediately?

Harden email and identity controls (MFA, DMARC, DKIM, strong SSO policies), verify high-risk requests out-of-band, and limit exposure from public job posts and corporate content. Conduct red-team and purple-team exercises, continuous security awareness training focused on emotional triggers and sophisticated pretexts, and adopt content governance to reduce oversharing.

How do deepfakes and synthetic media factor into social engineering threats?

Synthetic voice and video increase believability and enable impersonation at scale. Threat actors use localized scripts and flawless prose to bypass intuition-based defenses. Defenders must expand training to recognize cadence and context cues, deploy media verification tools, and require multi-factor validation for sensitive requests.

What sectors are most at risk from model-enabled attacks?

Financial services face immediate pressure from fraud, synthetic identities, and compliance risks. Critical infrastructure and supply chains face systemic risk from targeted disruption and espionage. Defense and government entities are also high-value targets due to intelligence and operational impact.

How should policy makers and industry collaborate to reduce transnational threats?

Align risk management frameworks with NIST and sector guidance, share threat intelligence across public-private partnerships, and invest in global cooperation to trace infrastructure and disrupt laundering and monetization chains. Regulation should balance innovation with mandatory disclosure, resilience standards, and cross-border incident response playbooks.

Are autonomous, model-driven attacks a near-term existential threat?

Autonomous attacks are a plausible future risk but currently sit on the horizon tier. Today’s greatest harms arise from scaled social engineering, enhanced fraud, and tooling that augments human operators. Organizations should prepare for maturity tiers by strengthening detection, governance, and operational resilience now.

What role do threat intelligence and red-teaming play against these threats?

Threat intelligence identifies emerging TTPs, infrastructure, and actor profiles; red and purple teams test controls against realistic, model-enhanced scenarios. Together they validate defenses, refine playbooks, and inform training that targets the nuanced social-engineering and technical tactics used by modern adversaries.

Which defensive technologies benefit most from model-aware enhancements?

Behavioral analysis platforms, email and identity protection, EDR/XDR solutions, and media verification tools improve most when augmented with model-aware features — such as adversarial testing, anomaly scoring for messaging cadence, and automated correlation of OSINT signals to detect targeting and toolchain reuse.

How can organizations balance innovation with the risk of enabling misuse?

Implement governance around model use, restrict sensitive prompt access, apply safety filters, and require adversarial evaluation before deployment. Encourage responsible research partnerships with vendors like OpenAI, Anthropic, and Google to harden models while enabling productive uses such as automation for coding, triage, and threat analysis.