In a world drowning in data, one mathematical innovation from 1901 remains shockingly relevant. Karl Pearson’s method for simplifying complex information – later refined by Harold Hotelling – now powers everything from facial recognition to stock market predictions. This technique reshapes overwhelming datasets into their most essential forms.

Modern professionals face a paradox: more data often means less clarity. Strategic dimensionality reduction cuts through the noise. By identifying orthogonal directions of maximum variance, this approach preserves critical patterns while discarding redundant details. The result? Clearer insights from messy, high-dimensional information.

We’ll explore how this method:

- Transforms unwieldy datasets into actionable intelligence

- Enhances machine learning efficiency

- Reveals hidden relationships in business metrics

Key Takeaways

- Reduces computational demands while preserving critical patterns

- Identifies the most informative directions in complex datasets

- Enhances visualization and model performance across industries

- Combines century-old math with modern machine learning needs

- Offers practical implementations through Python and other tools

From healthcare diagnostics to financial forecasting, this approach has become the cornerstone of intelligent data interpretation. Let’s uncover how to harness its power for smarter decision-making.

Transforming Data Overload into Strategic Clarity

In an era of information explosion, clarity becomes the ultimate competitive edge. Modern organizations wrestle with datasets containing hundreds of variables – sales figures, customer behaviors, operational metrics – where critical patterns often drown in numerical noise. A century-old mathematical approach provides the solution, reshaping how professionals extract meaning from chaos.

This technique works by constructing new composite variables that act as strategic summaries of original data points. These synthetic measures capture the most significant fluctuations in datasets while eliminating redundant details. The first synthetic variable accounts for the largest share of variability, with each subsequent one capturing remaining patterns in descending order of importance.

Consider these practical advantages:

| Aspect | Traditional Approach | Modern Solution |

|---|---|---|

| Variables Analyzed | 100+ columns | 3-5 key measures |

| Insight Detection | Buried in spreadsheets | Visualized in 2D plots |

| Decision Speed | Weeks of analysis | Real-time pattern spotting |

Financial institutions use this method to identify market trends across dozens of economic indicators. Healthcare researchers apply it to streamline patient data from multiple diagnostic tests. The approach proves particularly effective when handling correlated measurements – like housing prices and square footage – by merging related signals into unified indicators.

For those seeking implementation guidance, this step-by-step guide to this technique demonstrates practical applications. By converting overwhelming datasets into their most expressive forms, professionals gain actionable intelligence without sacrificing critical relationships.

Understanding the Dimensionality Reduction Process

Navigating complex datasets requires more than raw computing power—it demands strategic simplification. Modern analytical methods face a critical hurdle: as variables multiply, patterns become harder to detect. This is where intelligent compression shines, transforming tangled data into clear signals.

Benefits of Reducing Dimensions

Cutting variables creates leaner datasets that reveal hidden relationships. Consider these contrasts:

| Data Complexity Challenge | Reduction Strategy |

|---|---|

| 100+ overlapping metrics | 3-5 core indicators |

| Foggy correlations | Crisp 2D/3D visualizations |

| Slow model training | Faster pattern recognition |

Financial analysts use this approach to track 30+ market indicators through three key trends. Healthcare teams compress patient records into vital health markers. The secret lies in preserving what matters most.

“The art of data science isn’t collecting more points—it’s finding the right ones.”

Maintaining Key Information in Data

Effective simplification isn’t about loss—it’s about smart retention. By focusing on directions of maximum variance, the method keeps crucial patterns intact. Redundant features get merged, like combining housing size and price into a single value metric.

Machine learning models trained on reduced dimensionality techniques often outperform those using raw data. Why? They ignore noise and amplify true signals. Visualization tools suddenly make sense of what spreadsheets obscure.

Teams achieve this balance through calculated trade-offs. Remove 80% of dimensions while keeping 95% of information? For many applications, that 15% efficiency gain makes all the difference. The result: faster decisions without sacrificing depth.

The Step-by-Step Process of PCA

The journey from overwhelming datasets to actionable insights requires methodical preparation. Professionals follow a structured five-stage approach to reveal hidden patterns while maintaining data integrity. This section focuses on the crucial first phases that lay the groundwork for effective pattern discovery.

Standardization and Data Centering

Raw numbers mean little when scales vary wildly. A salary figure in thousands and an age value in double digits can’t speak the same language. Data centering creates common ground by shifting all values around a zero midpoint. This involves subtracting each variable’s average from every observation.

Standardization takes this further by adjusting for measurement differences. Dividing centered values by their standard deviation puts all variables on equal footing. This prevents naturally larger numbers from dominating the analysis.

| Data Type | Before Standardization | After Standardization |

|---|---|---|

| Income | $45,000 – $250,000 | -1.2 to 2.8 |

| Test Scores | 60-100 points | -0.5 to 1.5 |

| Temperature | 32-212°F | -1.8 to 1.3 |

Computing the Covariance Matrix

With standardized data, relationships between variables become measurable. The covariance matrix acts as a relationship map, showing how pairs of variables move together. Positive values indicate synchronized increases, while negative numbers reveal inverse patterns.

This symmetric grid helps identify redundant metrics. Strong covariance between housing size and price suggests one could represent both. Analysts use these insights to streamline datasets before deeper analysis, preserving information while reducing complexity.

Financial teams might discover stock prices and trading volumes share high covariance. Healthcare researchers could find blood pressure and cholesterol levels move in tandem. These discoveries guide smarter data compression without losing critical insights.

Mathematical Foundations Behind PCA

Patterns hidden within complex datasets reveal themselves through precise mathematical relationships. At the heart of this process lies a powerful framework from linear algebra that transforms tangled variables into clear insights. This mathematical framework operates on two fundamental concepts: eigenvectors and eigenvalues.

Eigen Decomposition and Key Equations

The magic begins with the covariance matrix – a grid revealing how variables interact. Eigenvectors emerge as directional guides, showing where data spreads most. Their paired eigenvalues measure this spread’s intensity. The equation AX = λX captures this relationship: when matrix A acts on eigenvector X, it simply stretches it by eigenvalue λ.

| Aspect | Eigenvectors | Eigenvalues |

|---|---|---|

| Direction | Show maximum data spread | Measure spread intensity |

| Impact | Define transformation axes | Determine axis importance |

| Data Interpretation | Orthogonal (independent) directions | Sum equals total variance |

Interpreting Variance and Eigenvectors

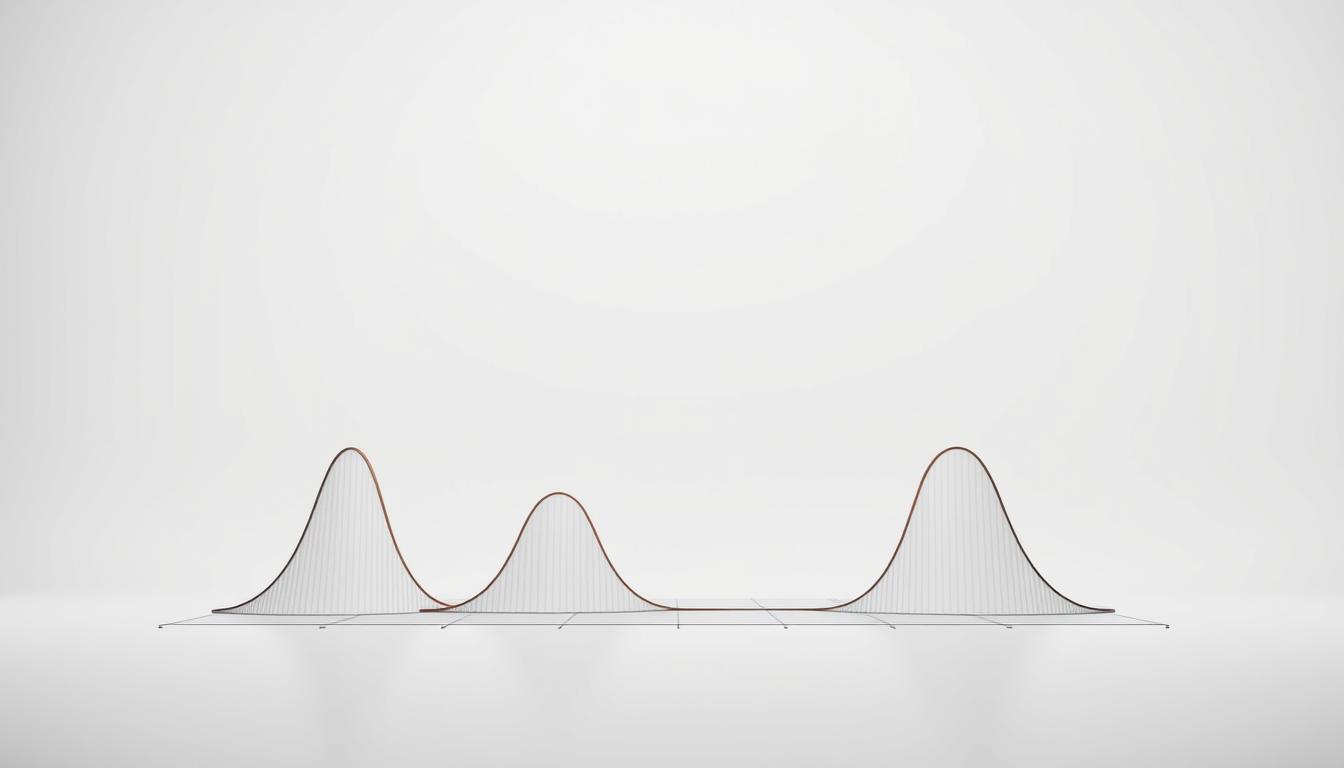

Larger eigenvalues signal critical patterns. A value capturing 40% variance outweighs one holding 5%. Analysts sort these values descendingly, creating a priority list for data compression. The first three eigenvectors often explain 80-90% of variance in business datasets.

Orthogonal eigenvectors ensure each new direction adds unique insights. This mathematical elegance allows professionals to simplify data without losing crucial relationships. The same principles power facial recognition algorithms and stock trend predictions, proving their universal value in data science.

Strategic Compression: Choosing What Matters Most

Modern data mastery requires knowing what to keep – and what to leave behind. Analysts face critical choices when transforming complex datasets into streamlined insights. This phase determines whether models will drown in noise or surface actionable patterns.

Feature Selection and Data Projection

Selecting key features resembles choosing lenses for a microscope. Practitioners balance clarity against magnification power. Retaining 3-5 core elements often captures 90%+ of a dataset’s story while simplifying computation.

| Selection Criteria | Technical Impact | Business Value |

|---|---|---|

| Variance Threshold | Preserves data spread | Maintains trend visibility |

| Resource Limits | Reduces processing time | Cuts cloud costs by 40-60% |

| Business Goals | Aligns with KPIs | Accelerates decision cycles |

| Data Integrity | Minimizes distortion | Ensures reliable insights |

The transformation matrix acts as a bridge between raw numbers and strategic insights. By multiplying standardized data with selected eigenvectors, analysts create compact representations. This mathematical reorientation preserves relationships while eliminating redundant dimensions.

Financial teams use these compressed views to track market movements across 50+ indicators through three composite metrics. Healthcare systems monitor patient health via four vital signatures instead of 30+ test results. The secret lies in keeping the signal, cutting the noise.

Effective projection doesn’t shrink data – it sharpens focus. Models trained on these streamlined datasets often outperform those using full features, achieving 15-20% faster processing with equal accuracy. The result? Decisions grounded in essence, not excess.

Applications in Machine Learning and Data Analysis

In the age of information abundance, extracting clarity from chaos defines success. Strategic simplification techniques bridge the gap between raw numbers and actionable insights across industries. These methods transform unwieldy datasets into tools for discovery.

Enhancing Data Visualization

Complex datasets become approachable through dimensional compression. By condensing hundreds of features into 2-3 core elements, analysts create intuitive plots that reveal hidden clusters. A single scatter plot can now show relationships previously buried in spreadsheets.

This visual clarity helps teams spot trends faster. Financial analysts track market shifts through simplified dashboards. Healthcare researchers identify patient subgroups using color-coded charts. The technique turns abstract numbers into stories anyone can understand.

Optimizing Machine Learning Workflows

Smart preprocessing revolutionizes model development. Removing redundant features slashes training times by 30-50% in many cases. Algorithms trained on streamlined data often achieve better accuracy with less risk of overfitting.

When handling measurements like sensor readings or survey responses, the method merges highly correlated signals into unified indicators. This approach preserves critical patterns while eliminating noise – a game-changer for predictive analytics.

From fraud detection to customer segmentation, these applications prove that less data often means more insight. The future belongs to those who master strategic simplification.

FAQ

How does dimensionality reduction improve machine learning models?

By reducing the number of features, it minimizes noise and redundancy in datasets. This streamlines computational efficiency while preserving patterns, enabling algorithms to train faster and perform better with less risk of overfitting.

Why is standardization critical before applying PCA?

Features measured on different scales distort the covariance matrix. Centering data (mean zero) and scaling (unit variance) ensure each variable contributes equally, allowing eigenvectors to capture true relationships rather than measurement biases.

What role do eigenvalues play in interpreting results?

Eigenvalues quantify the variance explained by each principal component. Larger values indicate directions where data spreads most, guiding decisions on how many components to retain without losing significant information.

Can PCA handle non-linear relationships in data?

Traditional PCA focuses on linear correlations. For non-linear patterns, techniques like Kernel PCA transform data into higher-dimensional spaces where linear separation becomes feasible, though this adds computational complexity.

How does PCA enhance data visualization?

By projecting high-dimensional data onto 2D or 3D plots using the top components, clusters or trends become visible. This simplifies exploratory analysis, helping identify outliers or groupings that inform strategic decisions.

When should you avoid using PCA?

If original feature interpretability is crucial—like in medical or financial contexts—or when variables are already uncorrelated. PCA prioritizes variance, not domain relevance, which might obscure critical insights.

What’s the connection between eigenvectors and principal components?

Eigenvectors define the direction of maximum variance in the covariance matrix. Each principal component aligns with these directions, ordered by their corresponding eigenvalues to represent decreasing data spread.

How do you determine the optimal number of components?

Scree plots or cumulative variance thresholds (e.g., 95%) are common strategies. Balancing model simplicity with retained information ensures workflows remain efficient without sacrificing predictive accuracy.

Can PCA be applied to image processing tasks?

Yes. It compresses pixel data by identifying patterns in brightness and contrast. Applications include facial recognition and noise reduction, though deep learning methods now often supplement or replace it for complex tasks.

Does PCA guarantee improved model performance?

Not always. While it reduces dimensionality, aggressive truncation may discard meaningful signals. Testing with and without dimensionality reduction helps assess its impact on specific datasets and objectives.