Did you know 83% of predictive models in healthcare and finance rely on a foundational algorithm for binary decisions? This unsung hero powers everything from fraud detection to medical diagnoses—yet most professionals only scratch its surface.

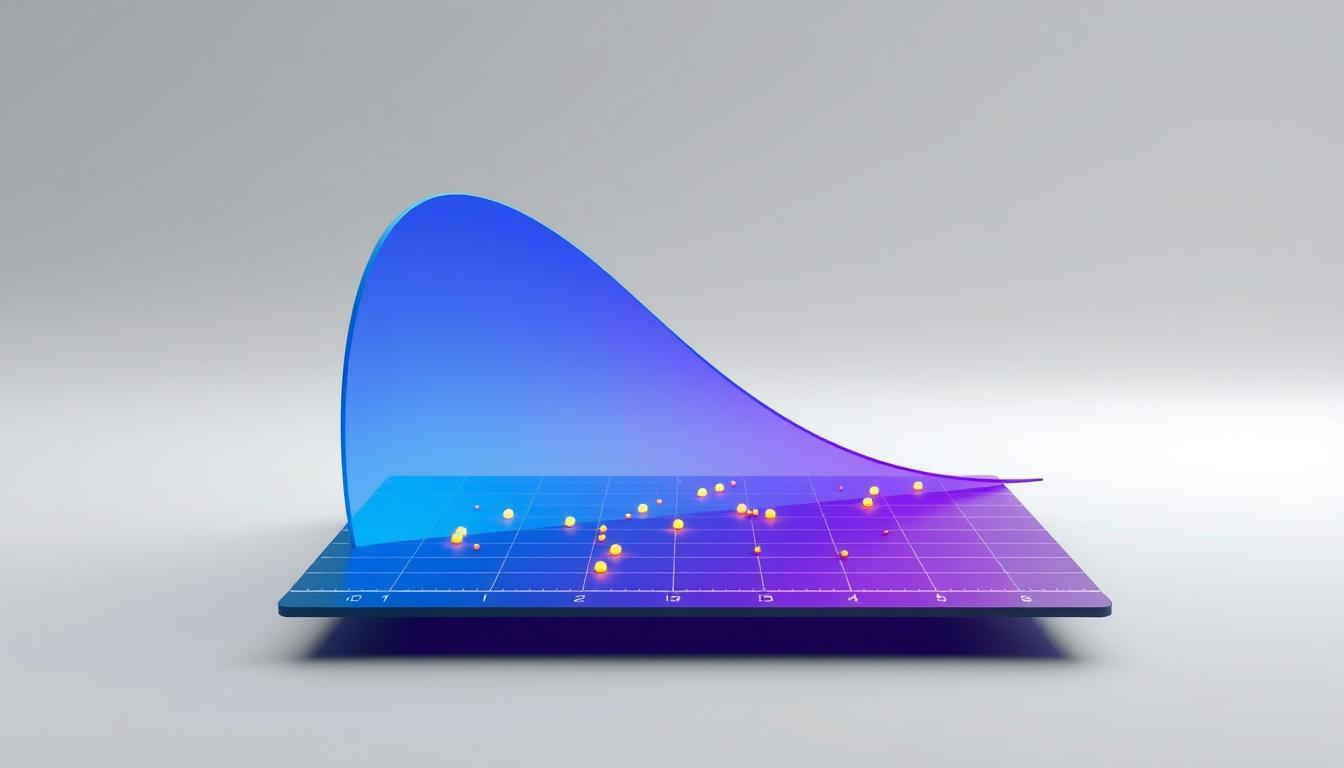

At its core, this classification method transforms linear relationships into probability scores. Unlike traditional approaches that predict numerical values, it answers yes/no questions with calculated certainty. The secret lies in its s-shaped curve, which converts raw data into actionable insights.

Why does this matter? Modern industries demand solutions that balance simplicity with precision. By mastering both the theory and implementation of this technique, professionals gain a versatile tool for countless scenarios. From email filtering to credit scoring, its applications redefine decision-making processes.

This guide demystifies the algorithm’s inner workings through three critical lenses:

- The mathematical magic behind probability conversion

- Practical coding strategies for real-world deployment

- Optimization techniques that bridge theory and practice

Key Takeaways

- Converts linear outputs into probabilities using advanced mathematics

- Essential for binary classification tasks across industries

- Sigmoid function acts as the probability translator

- Combines theoretical depth with coding practicality

- Serves as foundation for more complex algorithms

- Requires simultaneous mastery of concepts and implementation

Introduction to Logistic Regression and Its Mathematical Foundations

Behind every ‘yes/no’ decision in AI lies a mathematical engine that converts data into confident choices. This method excels where outcomes have only two possibilities—approve or deny, positive or negative, success or failure. Unlike standard approaches that predict exact values, it calculates likelihood percentages between 0% and 100%.

The secret weapon? A unique s-shaped curve called the sigmoid function. This mathematical tool takes any input number and squeezes it into a 0-1 range. When the output crosses 50%, the model shifts its prediction from one category to the other with calculated certainty.

Consider how this compares to traditional approaches:

| Feature | Linear Method | Logistic Approach |

|---|---|---|

| Output Type | Continuous values | Probability scores |

| Function Used | Straight line | S-shaped curve |

| Outcome Interpretation | Direct relationship | Threshold-based decision |

| Common Use Cases | Sales forecasting | Risk assessment, classification |

This dual nature makes the technique invaluable across sectors. Banks use it to flag suspicious transactions. Healthcare systems employ it to predict patient outcomes. Marketing teams leverage it for customer conversion predictions.

The true power emerges from its interpretability. Each variable’s impact appears as adjustable weights in the equation—a feature lacking in more complex machine learning models. Professionals can literally see how changing an input value affects the final probability score.

Fundamentals of Logistic Regression

Behind every successful binary decision model lies a framework of critical assumptions. These rules govern how predictors interact with outcomes, transforming raw numbers into actionable insights. Professionals who master these principles unlock consistent results across applications like risk assessment and medical diagnosis.

Core Concepts and Assumptions

The model demands independent observations – each data point must offer unique information. Imagine analyzing loan applications: duplicate records or related borrowers would violate this requirement.

Binary outcomes form the heartbeat of this approach. The dependent variable can only have two states, like “approved” or “denied.” This constraint shapes data collection strategies and validation processes.

Linear relationships between independent variables and log-odds enable precise predictions. Credit scoring models exemplify this – higher income linearly increases approval odds, while debt levels decrease them.

Outliers act as silent saboteurs. A single extreme value in medical test results could skew predictions for an entire patient group. Robust data cleaning becomes essential.

Key Terminologies and Definitions

Odds represent success-to-failure ratios. In marketing campaigns, 3:1 odds mean three conversions per non-conversion.

Log-odds (logits) linearize probability relationships. This transformation allows standard regression techniques to handle binary outcomes effectively.

The maximum likelihood estimation process identifies coefficients that maximize prediction accuracy. It’s the engine powering parameter optimization.

Sample size requirements ensure stability. Models analyzing rare events need thousands of observations – a key consideration for fraud detection systems.

Mathematical Derivation of the Logistic Regression Model

The journey from linear predictors to probability scores is paved with elegant mathematical transformations. These steps convert raw numerical inputs into bounded values that guide critical decisions in machine learning systems.

Derivation of the Logistic Equation

Start with the odds ratio: success probability divided by failure probability. This ratio undergoes a natural logarithm transformation, creating the logit function – the bridge between probabilities and linear predictors. The logit transformation enables optimization through standard regression techniques.

Algebraic rearrangement reveals the final probability equation: p(X) = 1 / (1 + e-(w·X + b)). This formula maps any real-number input to the 0-1 range, creating the distinctive S-curve that powers classification models.

Understanding the Log-Likelihood Function

Maximum likelihood estimation drives parameter optimization. The function combines observed outcomes through a product of probabilities. However, multiplying tiny values risks computational instability.

Applying logarithmic conversion solves this issue. The log-likelihood formula transforms products into sums: Σ[yilog(p) + (1-yi)log(1-p)]. This stable expression allows efficient calculation of model accuracy during training.

Three critical advantages emerge:

- Preserves relationships between variables and outcomes

- Enables gradient-based optimization methods

- Provides measurable improvement metrics

Types of Logistic Regression

Not all classification challenges are created equal. Three specialized approaches adapt the core algorithm to different data scenarios while maintaining predictive accuracy. Each variant addresses specific patterns in the dependent variable, from simple yes/no decisions to complex multi-tiered rankings.

Binomial Logistic Regression

This foundational method tackles binary classification problems with two possible outcomes. Banks use it to approve/deny loans—where each application becomes a probability score. Medical teams apply it to predict disease presence based on test results.

Key features include:

- Single decision boundary at 50% probability

- Clear interpretation of variable impacts

- Compatibility with statistical assumptions for reliable results

Multinomial and Ordinal Approaches

When outcomes exceed two classes, multinomial regression handles unordered categories like product types. Marketing teams might predict which of five new items customers will prefer. The model calculates probabilities for each option independently.

Ordinal methods preserve natural sequences in data. Customer satisfaction surveys (poor → excellent) benefit from this approach. It recognizes that “good” holds more weight than “neutral” through weighted calculations.

Three critical selection factors:

- Number of possible outcomes

- Presence of ranking in categories

- Sample size per class

The Sigmoid Function and Probability Mapping

What transforms raw numerical scores into decisive yes/no predictions? The answer lies in a mathematical workhorse that shapes countless AI decisions daily. This critical component converts limitless values into clear probabilities—like turning a credit score into an approval likelihood.

Transforming Linear Outputs into an S-Curve

The sigmoid function acts as nature’s own probability calculator. Defined as σ(z) = 1/(1+e⁻ᶻ), it takes any number and outputs values between 0 and 1. Extreme inputs approach these limits asymptotically—a safety feature preventing mathematical chaos.

Three unique properties make it indispensable:

- Smooth differentiability enables precise model adjustments during training

- Symmetry around 0.5 creates balanced decision thresholds

- Bounded outputs align perfectly with probability rules

Consider loan approvals: A score of 0.73 doesn’t just mean “likely.” It quantifies risk, allowing banks to set custom thresholds. The S-curve’s gentle slope near mid-range values ensures small data changes don’t cause erratic predictions.

This function also solves optimization challenges. Its derivative simplifies to σ(z)(1-σ(z))—a computational gift that accelerates gradient calculations. Engineers leverage this property to build efficient algorithms that scale across industries.

From medical diagnostics to spam filters, the sigmoid’s probability mapping turns abstract math into real-world decisions. Its elegant design remains unmatched for binary classification tasks requiring both precision and interpretability.

From Odds to Log-Odds: Interpreting the Logistic Equation

Imagine translating “maybe” into precise numbers that drive business decisions. This alchemy happens through three connected concepts: probability, odds, and log-odds. Probability measures chance (20% rain), while odds compare success to failure (1:4 ratio).

The logit function completes this chain by applying natural logarithm to odds. This transforms bounded values into unlimited scales – crucial for modeling real-world relationships. A marketing campaign with 3:1 conversion odds becomes ln(3) = 1.1 log-odds.

| Metric | Range | Interpretation |

|---|---|---|

| Probability | 0-1 | Direct likelihood estimate |

| Odds | 0-∞ | Success/failure ratio |

| Log-Odds | -∞-∞ | Unbounded linear scale |

Coefficients reveal each variable’s impact in this equation. A 0.5 weight means: “One unit increase in X adds 0.5 log-odds.” Financial analysts use this to quantify how credit scores affect approval chances.

Exponentiating coefficients creates odds ratios – powerful tools for stakeholders. An odds ratio of 2.0 translates to “double the success likelihood per unit change.” This bridges complex math with actionable business insights.

The equation log(p/(1-p)) = β₀ + β₁X₁ becomes a universal translator. It converts raw data into decision-ready formats while maintaining interpretability – a rare combination in predictive modeling.

Maximum Likelihood Estimation and Cost Functions in Logistic Regression

What if algorithms could learn decision patterns by reverse-engineering success? This is the power of maximum likelihood estimation – the mathematical compass guiding classification models toward optimal performance. Unlike trial-and-error approaches, MLE systematically identifies coefficients that make observed data most probable.

The likelihood function acts as a truth detector. It multiplies individual outcome probabilities, creating a single measure of model accuracy. But multiplying countless decimals risks computational zeros – a crisis averted by converting to log-likelihood. This transformation turns precarious products into manageable sums while preserving critical relationships.

Three key advantages emerge:

- Enables gradient-based optimization for efficient training

- Quantifies improvement through measurable score changes

- Maintains stability with large datasets

Real-world applications range from credit risk models to medical diagnostics. Sports analysts employ similar principles when predicting game outcomes, demonstrating how probabilistic frameworks transcend industries. The function‘s design ensures predictions align with actual event frequencies.

Mastering these concepts transforms practitioners from model users to strategic architects. By understanding how algorithms learn rather than just calculate, professionals unlock deeper insights into their data – turning theoretical machinery into decision-making superpowers.

FAQ

How does logistic regression differ from linear regression?

While both methods model relationships between variables, linear regression predicts continuous outcomes, whereas logistic regression estimates probabilities for binary or categorical classification. The latter uses a sigmoid function to constrain outputs between 0 and 1, making it ideal for classification problems like spam detection.

Why is the sigmoid function critical in this model?

The sigmoid function maps linear combinations of inputs into probabilities ranging from 0 to 1. This S-shaped curve converts raw scores into interpretable likelihoods, enabling clear decision boundaries for classification tasks such as medical diagnosis or customer churn prediction.

What role does the log-likelihood function play?

Maximum likelihood estimation optimizes model parameters by maximizing the log-likelihood function. This method quantifies how well predicted probabilities align with observed outcomes, ensuring the model accurately separates classes in datasets like credit risk assessments.

When should multinomial logistic regression be used?

Multinomial approaches handle scenarios with three or more unordered categories, such as predicting product categories or election outcomes. Ordinal regression applies when target classes follow a natural order, like rating scales in customer feedback analysis.

How do coefficients relate to odds ratios?

Coefficients represent log-odds changes per unit increase in predictors. Exponentiating them yields odds ratios, simplifying interpretation. For example, a coefficient of 0.5 in marketing analytics implies a 64.9% higher odds of conversion for each additional ad impression.

What assumptions must hold for valid results?

Key assumptions include linearity between log-odds and features, independence of observations, and minimal multicollinearity. Tools like Python’s scikit-learn help validate these conditions through residual analysis and variance inflation factor checks.

Why avoid ordinary least squares here?

Least squares assumes normality and homoscedasticity, which binary outcomes violate. Maximum likelihood estimation better handles the binomial distribution of classification tasks, improving reliability in applications like fraud detection systems.