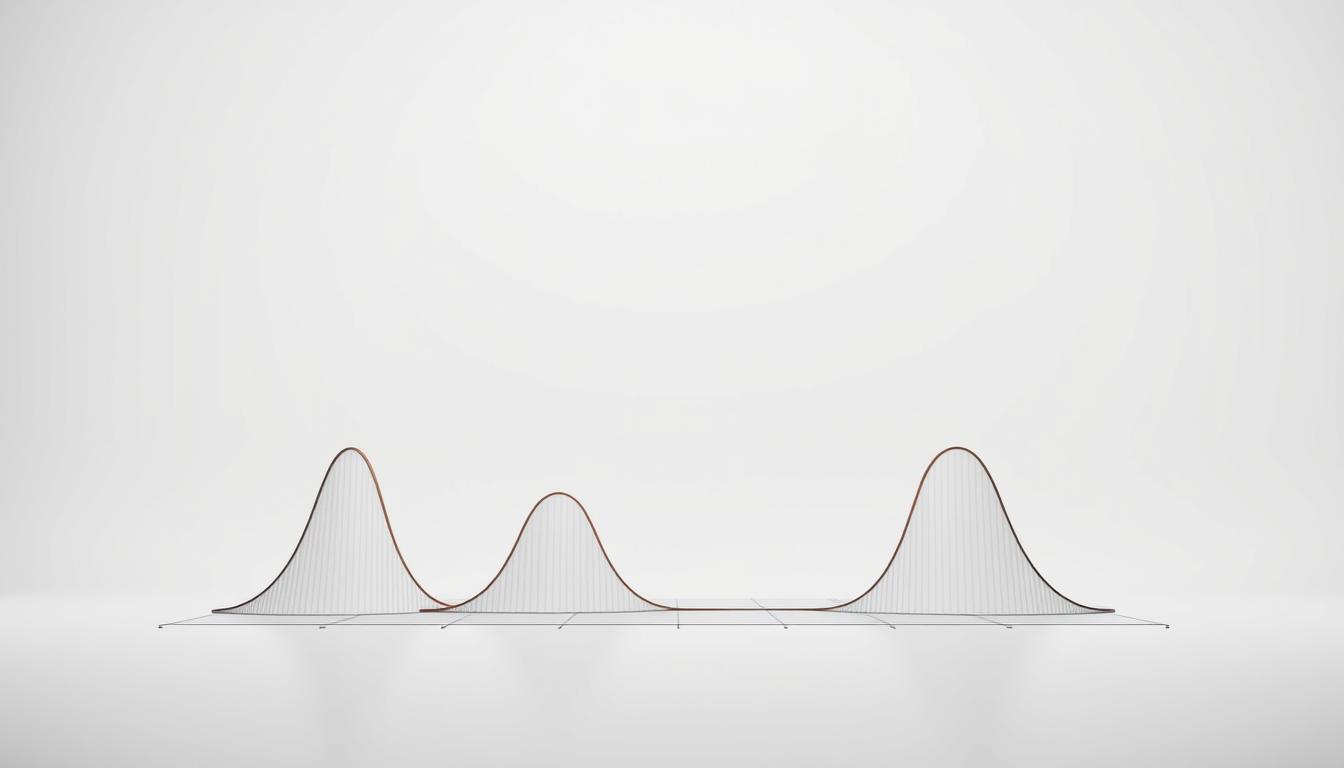

95% of modern statistical analyses rely on a concept developed in 1937 – yet most professionals still underestimate its power. Jerzy Neyman’s introduction of range-based estimation transformed how we interpret data, replacing oversimplified single-number guesses with nuanced, reality-based frameworks.

Traditional point estimates often mislead decision-makers by ignoring natural variability. Imagine predicting election results using only one poll or assessing drug efficacy through a single metric. This approach collapses complex realities into dangerously simplistic conclusions.

Neyman’s method instead asks: What range of outcomes aligns with our evidence? By accounting for sampling differences, this strategy empowers professionals to quantify uncertainty. From pharmaceutical trials to market trend forecasts, it bridges the gap between theoretical models and real-world applications.

Key Takeaways

- Range-based estimates outperform single-point guesses in accuracy

- Sampling variability significantly impacts data interpretation

- Modern industries demand reliability metrics alongside projections

- 1937 innovation remains foundational in analytics

- Decision-making improves when embracing uncertainty

Introduction to Confidence Intervals and Estimation

Every day, crucial decisions are made using incomplete data snapshots—unaware of a more robust statistical framework. Researchers and analysts face a critical challenge: how to translate sample observations into reliable conclusions about entire groups. This is where range-based estimation shines, offering clarity in uncertainty.

Overview of Key Concepts

Statistical inference revolves around two approaches. A point estimate gives a single value, like guessing a city’s average temperature from one weather station. Interval estimates create a range around that value, acknowledging natural fluctuations in data. In drug trials, for example, a medication might show a 15% improvement—but the true effect could reasonably fall between 12% and 18%.

Biomedical teams rarely study whole populations due to cost and logistics. Instead, they analyze samples and use intervals to gauge how closely their results mirror reality. This approach prevents overconfidence in findings that might shift with different participant groups.

Importance in Statistical Analysis

Why do 83% of peer-reviewed journals require interval reporting? Single numbers often hide variability. Imagine two blood pressure drugs with identical average results—but one has tight outcome ranges while the other swings wildly. Intervals expose these critical differences.

Data scientists increasingly prioritize this method because it aligns with real-world complexity. As one researcher notes: “A forecast without a margin of error is like a map without scale—it might look precise, but it’s dangerously incomplete.” Modern analytics demands tools that separate measurable trends from random noise.

Fundamental Concepts and Terminology

Behind every data-driven decision lies a critical choice: trust a single number or embrace a range of possibilities. This distinction separates basic data interpretation from meaningful statistical analysis.

Understanding Population Parameters and Sample Data

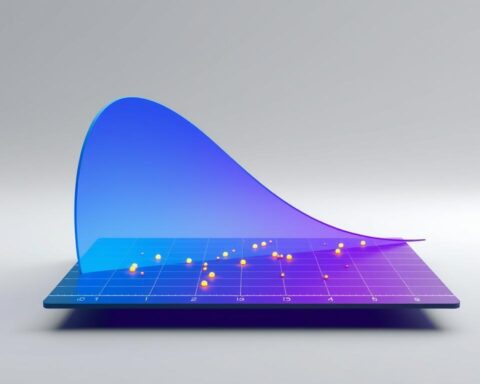

Population parameters represent true values for entire groups—like the average income of all U.S. software engineers. Since studying every individual is impractical, analysts use sample data to estimate these hidden truths. A survey of 1,000 tech workers might reveal a $120,000 average salary, but this remains an approximation of the real parameter.

Point Estimates vs Range-Based Estimates

A point estimate acts like a spotlight—focusing on one value while leaving surrounding areas dark. Consider these examples:

| Approach | Tech Salary Example | Clinical Trial Result |

|---|---|---|

| Point Estimate | $122,500 | 18% improvement |

| Range-Based | $115k-$130k | 15%-21% improvement |

The table reveals why professionals prefer ranges. While point estimates appear precise, they ignore natural data fluctuations. A pharmaceutical researcher explains: “Reporting only average results is like describing a storm with just rainfall totals—you miss the wind speeds and duration.”

Modern analysis prioritizes transparency. By showing plausible value ranges, teams acknowledge sampling limitations while providing actionable insights. This approach transforms raw numbers into strategic tools for decision-making.

Step-by-Step Guide to Calculating Confidence Intervals

Mastering confidence interval calculations transforms raw data into strategic insights—here’s how professionals achieve precision. The process balances mathematical rigor with practical decision-making, requiring careful formula selection and clear understanding of variability.

Choosing the Appropriate Formula

Three factors dictate formula selection: data type, sample size, and population characteristics. For means with large samples (n ≥30), analysts use: Sample mean ± z × (Standard deviation/√n). Critical z-values define range widths:

| Confidence Level | Z-Value | Range Width |

|---|---|---|

| 90% | 1.65 | Narrowest |

| 95% | 1.96 | Balanced |

| 99% | 2.58 | Widest |

Small samples or unknown population parameters demand t-distributions instead. This distinction prevents inaccurate conclusions—like using wrong tools for engine repairs.

Defining Margin of Error and Confidence Level

The margin of error acts as a precision gauge. Consider measuring average website load times: with 500 samples and standard deviation 0.8s, the error margin shrinks to ±0.07s at 95% confidence. Larger samples compress this range, while higher confidence levels expand it.

Researchers often reverse-engineer sample sizes based on desired precision. A clinical trial aiming for ±2% error at 99% confidence requires quadruple the participants of a 90% confidence study. This mathematical trade-off ensures resources align with accuracy needs.

Interpreting Confidence Levels and Estimation Results

What does a 95% confidence level truly guarantee? The answer reveals why 23% of research findings fail replication studies. Statistical certainty operates like weather forecasts—a 70% rain prediction means similar atmospheric conditions yield precipitation 7 out of 10 times, not that today’s storm has a 70% chance.

Assessing Variability and Estimation Accuracy

A common misunderstanding persists: many assume a specific interval has X% probability of containing the true population parameter. In reality, the confidence level describes the method’s track record across infinite samples. Once calculated, your range either captures the hidden value or doesn’t—like a net that either caught fish or remained empty.

| Confidence Level | Z-Score | Practical Implication |

|---|---|---|

| 90% | 1.645 | Tighter range, higher risk of missing truth |

| 95% | 1.960 | Balanced precision/reliability |

| 99% | 2.576 | Wider net, lower error risk |

Pharmaceutical teams face this trade-off daily. A drug trial using 99% confidence might report effectiveness between 12-28%—too broad for clear decisions. Dropping to 90% narrows the range to 15-25%, but increases failure risk in future studies.

Variability analysis through intervals acts as a quality control measure. Manufacturing engineers use similar principles: if 95% of product dimensions fall within tolerance ranges, the production method proves reliable. The true value remains fixed—our measurements dance around it.

Practical Examples and Real-World Applications

From drug development to streaming analytics, statistical ranges shape decisions where precision matters. These scenarios show how professionals convert raw data into actionable insights while respecting inherent variability.

Biomedical Breakthroughs Through Data Ranges

A study of 72 physicians revealed systolic blood pressure averages of 134 mmHg (SD=5.2). Using the formula:

134 ± 1.96 × (5.2/√72)

Researchers calculated a 95% range of 132.8–135.2 mmHg. This tight span helped cardiologists assess hypertension risks without overstating results.

From Music Downloads to Pizza Deliveries

Consider these diverse cases:

| Industry | Sample Data | Calculated Range |

|---|---|---|

| Digital Music | 100 users, 2 avg downloads | 1.8–2.2 (95% CI) |

| Food Service | 28 deliveries, 36-min avg | 34.13–37.87 mins (90% CI) |

Streaming platforms use such ranges to predict server loads. Pizza chains set delivery guarantees while accounting for traffic variables. Both examples demonstrate how calculated uncertainty improves operational planning.

Data scientists emphasize: “Ranges aren’t flaws—they’re fidelity markers.” By quantifying what we don’t know, teams make informed choices amidst real-world complexity.

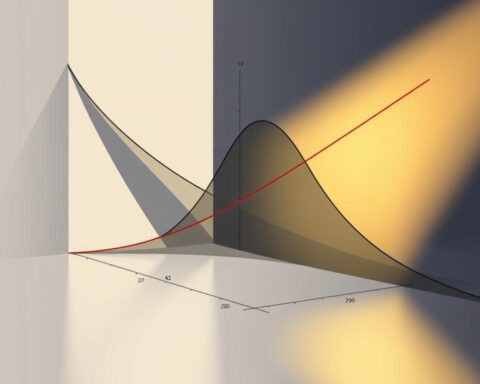

Advanced Considerations in Confidence Interval Estimation

Real-world data rarely fits textbook models—a truth that separates novice analysts from seasoned professionals. When distributions skew or defy normality, standard methods collapse like a house of cards. This demands adaptive strategies that preserve accuracy without sacrificing interpretability.

Handling Skewed Distributions and Non-Normal Data

Biomedical researchers analyzing odds ratios face a common challenge: extreme value ranges. The solution? Logarithmic transformations. By converting ratios to natural logs, calculating standard error, then exponentiating results, teams maintain interpretable ranges. This three-step process rescued a recent vaccine study—raw ratios suggested 2.5x effectiveness, while transformed estimates revealed a more cautious 1.8-3.1 range.

Non-parametric methods shine when traditional assumptions fail. For median calculations in a sample of 100 home prices:

| Method | Calculation | 95% Range |

|---|---|---|

| Parametric | Mean ± 1.96×SE | $285k-$315k |

| Rank-Based | 40th-61st values | $272k-$329k |

The table exposes parametric overconfidence—a $43k vs $57k spread. Financial analysts increasingly prefer rank-based approaches for housing markets, where population distributions often resemble mountain slopes rather than bell curves.

These techniques aren’t mere statistical acrobatics. As one FDA reviewer notes: “Transformed intervals prevent life-saving drugs from being mislabeled as dangerous—or vice versa.” The key lies in matching method to data reality, not textbook ideals.

Confidence Intervals and Estimation Best Practices

In data-driven fields, precision begins long before calculations—it starts with how we collect information. Effective sampling strategies form the bedrock of reliable interval estimates, transforming raw numbers into trustworthy insights.

Mastering Data Collection Fundamentals

Random sampling remains the gold standard for minimizing bias. When studying a population, ensure every member has an equal selection chance. This approach reduces hidden patterns that distort results—like surveying only morning shoppers to estimate population retail trends.

Sample size directly impacts accuracy. A survey of 400 households might reveal voting preferences within ±5% error margins. Doubling the number tightens this range to ±3.5%, while cutting it to 100 widens to ±10%. These trade-offs guide resource allocation across clinical trials and market research alike.

Seasoned analysts match methods to population characteristics. Stratified sampling proves invaluable when subgroups behave differently—like separating urban/rural respondents in policy studies. This technique sharpens interval estimates while maintaining manageable sample sizes.

Technology now enables dynamic adjustments. Adaptive designs let researchers modify sample criteria mid-study based on early results—a practice revolutionizing pharmaceutical development. As one data strategist notes: “Smart collection beats complex correction.”

FAQ

How does a confidence interval differ from a single point estimate?

A point estimate provides one specific value as an approximation of a population parameter, like using a sample mean to estimate the true average. A confidence interval, however, creates a range around that estimate—factoring in variability and uncertainty—to show where the true population value likely lies with a defined confidence level.

Why is the margin of error critical in interpreting results?

The margin of error quantifies the expected variability in an estimate due to random sampling. A smaller margin indicates higher precision, while a larger one reflects greater uncertainty. For example, a 95% confidence interval with a ±3% margin of error means the true population parameter has a 95% chance of being within 3% of the reported estimate.

Can confidence intervals be applied to non-normal data distributions?

Yes. While traditional methods assume normality, techniques like bootstrapping or transformations (e.g., log adjustments) allow statisticians to calculate reliable intervals for skewed data. Tools like the central limit theorem also help approximate normality for large sample sizes, even with underlying non-normal distributions.

How does sample size influence the width of a confidence interval?

Larger samples reduce standard error, narrowing the interval and improving precision. For instance, doubling the sample size typically shrinks the margin of error by about 30%. Smaller samples produce wider intervals, reflecting greater uncertainty about the population parameter.

What role do confidence intervals play in biomedical research?

Researchers use them to assess treatment effects or risk factors. For example, a study might report a 10% reduction in disease recurrence with a 95% confidence interval of 5%–15%. This range helps policymakers evaluate whether the observed effect is statistically and practically significant.

How do skewed datasets affect confidence interval accuracy?

Skewness can bias traditional interval calculations. Analysts often use robust methods like percentile bootstrapping or nonparametric tests to adjust for asymmetry. Tools like SPSS or R offer specialized packages to handle such scenarios while maintaining reliable coverage probabilities.

What best practices ensure valid confidence intervals?

Prioritize random sampling to minimize bias, verify assumptions (e.g., normality, independence), and align confidence levels with study goals—95% for general use, 99% for high-stakes decisions. Tools like StatCrunch or Excel’s Data Analysis Toolpak automate calculations while reducing human error.