There are nights when a teacher keeps grading into the early hours, wondering which comments will actually reach a student. That quiet frustration is where this guide starts.

The aim is clear: explain what automated grading can do well today and what still needs a teacher’s judgment. CoGrader already helps over 1,000 schools by importing prompts and submissions from Google Classroom, generating rubric-aligned scores with detailed justifications, and exporting results back after a teacher reviews them.

Educators retain final control. Platforms can speed routine assessment and deliver fast feedback on assignments and student work while teachers keep the final say on grades and comments.

This introduction previews a practical, data-informed view: where technology saves time and where human insight protects trust and learning. Read on to answer the tough questions with confidence—what to automate, what to keep human, and how to measure impact across a class or district.

Key Takeaways

- Automated tools streamline routine grading and produce consistent feedback.

- Teachers remain the final authority on scores and meaningful comments.

- CoGrader connects with Google Classroom and supports major standards.

- Use tech to free time for instruction, conferencing, and deeper learning.

- Pilot with clear rubrics, audit outputs, and track changes in student work.

Understanding AI for Grading in Today’s Classrooms

Large classes expose where automated scoring shines and where it still needs human oversight. In high-enrollment settings, systems handle numeric responses and problem sets with speed and consistent assessment. Instant feedback helps students correct errors quickly and keeps pacing steady across a class.

What these systems can and cannot do right now

Many tools analyze grammar, sentence structure, and coherence in early drafts of writing. That surface-level feedback frees teachers to target argument, evidence, and higher-order concerns.

Matching capabilities to assignment types

Use automated scoring on structured assignments and initial drafts; reserve teacher judgment for open-ended projects where originality and context matter. Educator-first platforms like CoGrader align to teacher rubrics and produce justification reports teachers review. Student-facing products predict grades and guide revision before submission.

Practical step: pilot features in one unit, observe impact on student work and workflow, then scale. Thoughtful matching of features to tasks preserves trust while boosting efficiency.

When AI Elevates the Grading Process—and When It Falls Short

Quick, consistent scoring tools shine on closed-response tasks while leaving gray areas elsewhere.

Fast, consistent feedback on problem sets and drafts

Strength: Systems return instant feedback on numeric items and basic grammar. That speed helps students iterate and reduces turnaround time for teachers.

Early draft review highlights coherence and surface errors. Use that guidance to focus teacher time on deeper revision and argument strength.

Limits with creativity and real-world context

Weakness: Strategic projects and open-ended writing expose gaps. Systems can miss originality, purpose, and feasibility in applied tasks.

Overreliance risks formulaic comments and missed insights; teachers must interpret nuance and defend equity when datasets lack diversity.

Protecting trust and credibility

Best practice: let tools surface patterns in student work while teachers issue final scores and rationale. Clear communication answers student questions and preserves classroom trust.

- Use tools for routine assessment and pattern detection.

- Reserve judgment to evaluate originality and applied thinking.

AI for Grading

When rubrics are encoded precisely, assessment becomes more consistent and transparent.

Core features include mapping rubrics and criteria to each task, producing detailed feedback tied to evidence, and generating justification reports teachers can audit. CoGrader supports teacher-provided rubrics and major state standards (CCSS, TEKS, B.E.S.T., STAAR, Regents, AP/IB).

Rubrics, criteria alignment, and detailed feedback

The strongest platforms center on features that reduce ambiguity by aligning criteria tightly to assessment items. Detailed feedback cites rubric sections and quotes from student writing, so learners see precise improvement steps.

Personalized feedback vs. formulaic responses

Personalized feedback must reflect a student’s strengths and needs. If comments feel templated, teachers refine criteria and add anchor papers to nudge the tool toward specificity.

- Teachers remain final quality control—they adjust grades and comments before export.

- Platforms that score against trusted benchmarks—such as AP essays—build educator confidence.

- The goal: feedback that drives practice, not just faster scoring.

Learn more about trade-offs and policy implications in this analysis of assisted grading.

How to Implement AI Grading in Your School Workflow

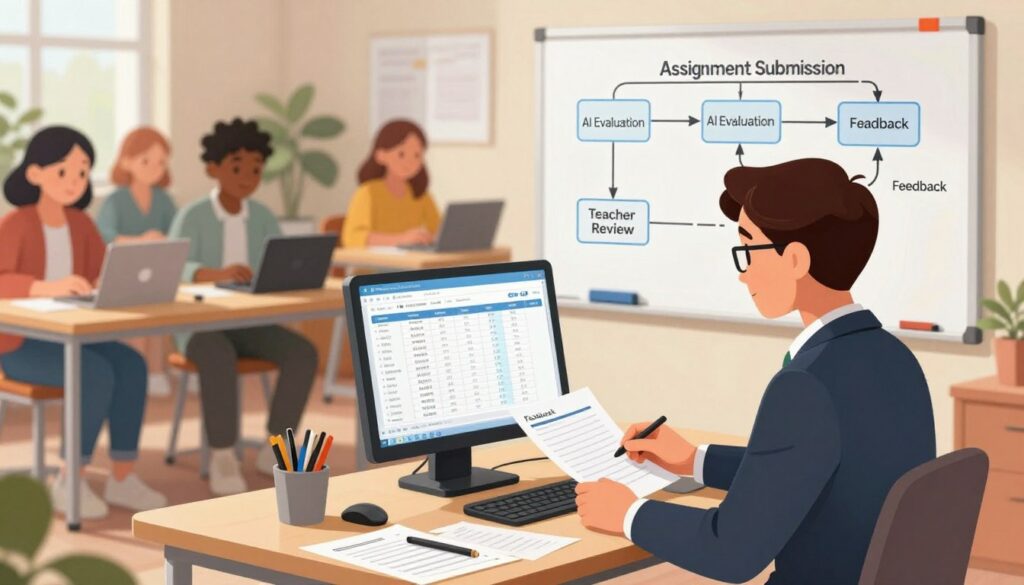

A clear onboarding path makes integration with existing systems practical and fast. Start small, map stages, and agree on decision points so teachers keep control over final scores and comments.

Integrate with your LMS

Connect the platform to Google Classroom first for quick imports and one-click export after teacher review. Districts can add Canvas and Schoology to standardize systems across departments.

Import, review, and export

Establish a process with clear review gates: the system drafts evaluations, teachers verify alignment to the rubric, then results move back to the gradebook.

Align rubrics and define roles

Load rubrics tied to CCSS, TEKS, Florida B.E.S.T., STAAR, Regents, and AP/IB to ensure consistent assessment of assignments and writing.

Prioritize time savings on high-volume work—problem sets and early drafts—so educators can focus on conferences and targeted instruction.

- Use features that import prompts and student work cleanly to preserve context.

- Calibrate feedback tone and actionability so students receive clear next steps.

- Train teams on rubrics, reading justifications, and handling edge cases.

Designing Effective Rubrics and Criteria for Reliable Results

Designing rubrics is the practical step that converts standards into day-to-day feedback. Clear descriptors make expectations visible and reduce guesswork when teachers evaluate student work.

Using state standards or custom rubrics for clarity and consistency

Anchor rubrics to recognized standards—CCSS, TEKS, Florida B.E.S.T., Smarter Balanced, STAAR, Regents, AP/IB—or to vetted custom frameworks. That alignment makes assessment decisions defensible and repeatable across sections.

Tip: include exemplars and anchor papers so expectations match practice. CoGrader accepts teacher-uploaded rubrics and produces justification reports that show how descriptors map to a performance level. Teachers should review those reports to confirm evidence and model high-quality feedback.

Calibrating weightings and descriptors to reduce variance

Set weightings to reflect learning priorities: in argumentative writing, thesis and evidence may outweigh mechanics. Use concise descriptors that describe observable actions at each level.

- Iterate language when feedback reveals blind spots; unclear descriptors invite student confusion.

- Analyze patterns by rubric area to target instruction where it moves results most.

- Keep rubrics living documents—refresh them each term to mirror curriculum shifts.

“Small changes in criteria language and weights often yield big gains in consistency.”

Outcome: a transparent process with shared criteria, exemplars, and student-facing explanations builds confidence in fair grading and makes the review process efficient and instructive.

Responsible Use: Human-in-the-Loop, Transparency, and Auditing

Responsible use means keeping teachers at the center of assessment while systems supply evidence and scale.

Keep humans in the loop: let tools inform recommendations; let teachers make final calls. This stance preserves professional standards and protects student trust.

Complement, don’t replace, teacher judgment

Teachers review suggested marks and comments before any final entry into the grading process. This preserves nuance, equity, and classroom context.

Be transparent with students about tool involvement

Communicate what the system did and what the teacher reviewed. Clear notes on feedback scope reduce confusion and support learning.

Regular audits and privacy-protecting practices

Protect privacy by anonymizing submissions and choosing platforms that secure data and log decision trails. Schedule audits that sample work across classes to check accuracy and bias.

Iterate based on outcomes

Analyze outcome data to spot trends and tune rubrics. Share audit findings with faculty and students so practices improve and trust grows. When teachers, students, and telemetry inform change, assessment becomes fairer and more reliable.

Academic Integrity and AI Detection Without Overreach

Detection tools raise questions, not judgments. When artificial intelligence flags unusual patterns in text, schools should treat that signal as the start of a conversation.

Flagging potential AI-generated content for follow-up conversations

Use flags as triage. CoGrader’s detection feature and student-facing detectors like Grammarly help teachers spot possible concerns. These tools prompt a review and a discussion with the student.

Why detection isn’t definitive—and how to apply policy fairly

Detection scores are probabilistic. They can miss context and produce false positives.

Combine a flag with other indicators: abrupt style shifts, missing drafts, or absent process artifacts. Then gather evidence and document the review.

- Ask open questions and request drafts or notes.

- Offer restorative responses such as coaching or resubmission.

- Use platform logs and version history to corroborate authorship.

| Flag | Next Step | Evidence to Collect |

|---|---|---|

| High detection score | Private teacher-student review | Drafts, outlines, timestamped versions |

| Style shift | Request process artifacts | Previous work, notes, class participation |

| No drafts submitted | Ask for rewrite with sources | Annotated bibliography, research notes |

Policy guidance: avoid automatic penalties. Align department practices, document decisions, and keep the focus on learning through clear feedback.

Privacy, Security, and Data Governance Schools Can Trust

Schools must treat privacy and data governance as non-negotiable elements of any assessment platform. Clear controls reduce risk and keep teachers focused on instruction. Vendors should prove their claims with documentation and audits.

Certifications and controls matter. Choose platforms that hold SOC2 Type 1 reports, meet FERPA obligations, and map to NIST 1.1 controls. Those systems show mature governance and reduce vendor risk.

- Authenticate safely: use OAuth2 to unify access and cut credential exposure.

- Minimize exposure: collect only essential data and store anonymized identifiers.

- Protect content: encrypt text, feedback, and student work at rest and in transit.

Confirm that third-party contracts prohibit training artificial intelligence models on student PII. Require a privacy policy, terms of use, an AI transparency note, and an independent cybersecurity assessment.

Set retention limits that match instructional needs. Train teachers and admins on data handling. Finally, verify language capabilities if multilingual support is needed—without expanding data sharing.

Choosing the Right Tools for Your Needs: Educators vs. Students

Not every tool suits every classroom; choices should map to teacher workflows and student goals.

Educator-first platforms

CoGrader focuses on rubric-based grading, progress tracking, multilingual assessment, LMS integration, and district dashboards. These features support consistent scoring and administrative oversight.

Teachers get clear evidence trails and exportable justifications that help audit decisions and save time.

Student-first tools

Student-facing options emphasize predicted grades, revision guidance, citation help, and expert-style feedback. These tools help students improve writing before submission and build revision habits.

What’s included—and what isn’t

Note: plagiarism checks may be separate from detection tools. AI detection is not the same as plagiarism detection; treat each as a distinct signal during review.

Match features to instructional impact: consider language needs, volume of work, and teacher support. Pilot educator and student tools in parallel and refine adoption based on outcomes and trust.

Scaling Across Classes and Districts

District leaders need clear visibility to compare performance across classes and schools at scale. CoGrader’s district dashboards consolidate data so administrators see trends by school and grade quickly.

Dashboards, analytics, and trend identification

Use analytics to track writing performance and grading consistency over time. Dashboards highlight rubric categories where student work needs support.

Actionable insight: flag areas with persistent low scores and target coaching or curriculum changes. Consolidated reporting shortens review time and drives clearer results.

Policy alignment, PD/training, and role-based control

Align policies so teachers across the district apply consistent practices. Build PD that centers on rubric design, tool configuration, and feedback strategies.

- Implement role-based controls so administrators oversee adoption while teachers keep day-to-day autonomy.

- Standardize platforms and tools to reduce friction and speed support.

- Plan procurement early—accept POs, run privacy reviews, and schedule onboarding before full scale-up.

“Start with a pilot, use analytics to guide each expansion phase, and monitor learning outcomes—not just usage.”

Conclusion

Real progress depends on pairing fast scoring with clear teacher review and coaching. Tools accelerate feedback and consistency, but teachers keep final control of the grading process and the classroom judgment that matters most.

Use saved time to run targeted mini-lessons, conferences, and interventions in areas where students need help. Personalized feedback and detailed feedback lift writing and revision cycles so student work improves faster.

Districts should align policy, protect privacy, and scale tools that show measurable gains in education and learning. Keep asking the right questions about what to automate and what to leave human. Read more on reshaping practice: reshaping grading practices.

FAQ

Can AI grade better than teachers? What are the pros and cons for schools?

Automated grading tools excel at speed and consistency for objective tasks such as multiple-choice, math problems, and basic rubric-aligned drafts. They save teachers time, provide rapid feedback to students, and surface trends across classes. However, they struggle with nuance: evaluating creativity, real-world reasoning, and complex argumentation still requires a teacher’s judgment. The best approach pairs automated scoring with teacher review — technology to scale routine work, educators to finalize grades and mentor students.

What can current automated grading systems do well, and what can’t they do?

These systems reliably score structured responses, check alignment to rubrics, and generate consistent feedback on mechanics and organization. They cannot fully judge originality, deep conceptual insight, or cultural context; nor can they replace professional judgment when a student’s intent or process matters. Expect accurate scoring for objective items and draft-level guidance for writing, but reserve final evaluation for teachers.

How should schools match grading tools to assignment types?

Use automated tools for objective assessments, iterative drafts, and criterion-referenced rubrics. Reserve subjective, open-ended, or performance tasks — like project-based work, creative writing, or oral presentations — for teacher assessment or a hybrid workflow. Align selection of tools to the task’s learning targets and the level of human interpretation required.

When does technology truly elevate the grading process?

Technology adds value when it speeds turnaround, enforces rubric consistency, and delivers formative feedback students can act on. It’s especially effective for problem sets, grammar and structure checks in drafts, and spotting class-wide misconceptions. These gains free teachers to focus on coaching, differentiation, and higher-order feedback.

Where do these systems fall short in assessing learning?

The main limits are assessing originality, nuanced reasoning, and applied, contextual work. Automated feedback can feel formulaic and may miss subtle student thinking or alternative valid approaches. Relying solely on machine output risks undermining trust and neglecting the teacher-student relationship that supports growth.

How should schools protect teacher-student trust and credibility when using these tools?

Make teacher review a required step for final grades, be transparent with students about tool use, and explain what feedback is automated versus human. Use systems as a support — not a replacement — and ensure teachers maintain authority over judgments, comments, and learning conversations.

What core features should educators expect from grading platforms?

Look for rubric-building tools, criteria alignment, inline comments, batch grading, and exportable reports. High-value features include calibrated scoring, revision-tracking, multilingual support, and dashboards that surface trends and gaps at student and class levels.

Can automated systems provide personalized feedback without sounding formulaic?

They can offer tailored comments based on rubric criteria, error patterns, and student history, but personalization improves when teachers edit or augment machine-generated feedback. Hybrid workflows that let educators customize suggestions yield the most meaningful student guidance.

How do schools integrate grading tools with existing LMS platforms?

Choose tools with native integrations for Google Classroom, Canvas, Schoology, or similar systems. Ensure smooth import of assignments and student submissions, and confirm export options for scores and comments. Robust integrations reduce manual work and preserve gradebook integrity.

What workflow controls should schools require when importing and exporting student work?

Require review gates so teachers can inspect and approve automated scores before they sync to gradebooks. Enable audit logs, anonymized exports for analysis, and configurable permissions so only authorized staff can finalize grades or alter comments.

How should rubrics be set up to align with state standards and assessments?

Map rubric descriptors to specific standards such as CCSS, TEKS, Regents, AP, or IB objectives. Use clear performance levels, sample evidence, and weighted criteria. This clarity helps tools produce consistent scores and gives students transparent targets for improvement.

How can schools calibrate weightings and descriptors to reduce variance?

Run blind scoring exercises, compare teacher scores to system outputs, and adjust descriptors for clarity. Use anchor samples and inter-rater calibration sessions to align human scoring with automated criteria and minimize discrepancy.

What does responsible use look like — human oversight, transparency, and auditing?

Responsible use mandates human-in-the-loop processes, clear communication to students about tool scope, and regular audits for accuracy and fairness. Schools should monitor outcomes, document changes, and iterate on settings based on evidence and stakeholder feedback.

How should schools handle detection of potentially generated work without overreaching?

Use detection flags as conversation starters, not definitive proof. Follow up with targeted interviews, process-based evidence requests, or revisions. Apply policy consistently and focus on learning implications rather than punitive measures by default.

Which privacy and security controls should schools demand from vendors?

Require SOC 2 alignment, FERPA compliance, and NIST-informed controls. Insist on OAuth2 or equivalent for authentication, minimal data retention, anonymized identifiers for analysis, and contractual assurances that student data won’t be used to train external models.

How can schools ensure third-party providers do not train models on student PII?

Include explicit contractual clauses prohibiting model training on student data, require vendor transparency about training data sources, and seek technical attestations or independent audits. Prefer vendors that document data handling and provide options for on-prem or private-cloud deployments.

How do educator-first and student-first tools differ?

Educator-first platforms prioritize rubric-based grading, progress tracking, and role-based controls to support instruction and reporting. Student-first tools emphasize predicted grades, revision suggestions, citation help, and learner-facing explanations. Choose products that match your primary user needs and workflow.

What features might be excluded from a grading platform and require separate tools?

Some platforms omit robust plagiarism suites, full AI-detection, or advanced analytics; these may require dedicated vendors. Verify feature lists up front—especially for plagiarism detection, identity verification, and deep psychometric analysis.

How can districts scale grading solutions across schools?

Scale through centralized dashboards, shared rubric libraries, and role-based access. Invest in professional development, policy alignment, and designated admins to manage settings. Use analytics to monitor equity and impact as deployment expands.

What role do dashboards and analytics play in district-wide adoption?

Dashboards reveal trends, flag learning gaps, and support data-driven decisions for curriculum and intervention. Aggregated analytics help district leaders measure equity, usage, and outcomes across classrooms and schools.

Why is professional development important when adopting automated grading tools?

Training ensures teachers know how to calibrate rubrics, interpret system outputs, and maintain assessment integrity. Ongoing PD fosters trust, improves consistency, and helps staff use technology to amplify instructional impact rather than replace professional expertise.