There are moments when a tax notice feels less like paperwork and more like a judgment on a life built by hard work. That unease drives this exploration: how modern systems can protect honest taxpayers and strengthen trust in public finance.

Tax agencies worldwide are testing artificial intelligence to spot fraud, smooth taxpayer experiences, and boost operational efficiency. Early pilots include predictive models that flag odd seasonal payments and gradient-boosting models for GST fraud in Australia.

The narrative balances promise and accountability: scalable models and algorithms can surface insights from vast data while governance prevents harm. We draw on international benchmarks — including examples highlighted in an international review — and practical benefits found in modern tax preparation reporting at Miloriano.

Key Takeaways

- Well-designed systems help agencies detect tax fraud with higher accuracy and fairness.

- End-to-end processes — from intake to decisioning — turn raw data into actionable outcomes.

- Models and tools scale reviews, but governance and explainability are essential to protect taxpayers.

- Real-world pilots show measurable revenue gains and fewer false positives when done responsibly.

- Leaders should build secure data foundations and audit-ready processes before broad deployment.

Context: Why Tax Agencies Need AI-Driven Pattern Recognition Now

Modern tax authorities face a turning point: better data and faster systems can reshape enforcement and service.

From data architecture to decisioning, agencies must build secure foundations that store taxpayer information in governed, auditable formats. Trusted data lets authorities act quickly and defend outcomes.

From data architecture to decisioning: building a secure foundation for taxpayer information

Effective pattern work begins with a governed, integrated system that standardizes returns, payments, registries, and third-party inputs.

Real-time access to clean data gives analysts and models consistent inputs and reduces manual errors.

Three core objectives for authorities

- Fraud detection: algorithms and models flag irregular filings and help identify potential tax gaps early.

- Improved taxpayer experience: streamlined processes shorten cycle times and deliver clearer outcomes.

- Operational efficiency: predictive insights let agencies allocate resources where they matter most.

U.S. focus: IRS modernization and targeted tools

The U.S. revenue service is modernizing e-file and piloting automated tools to triage returns, surface anomalies, and concentrate reviews on high-risk taxpayers.

Policy and privacy must guide technology choices; systems should be auditable end to end to maintain public trust. For a deeper look at tax modernization, see tax modernization.

AI Use Case – Tax-Evasion Pattern Recognition

Agencies now blend ledger records, registries, and transaction feeds to build a single picture of taxpayer activity. This fused data fabric—tax returns, bank and card payments, public registries, and e-invoice/VAT streams such as Greece’s MyDATA—creates reliable signals for risk scoring.

Models and signals in practice

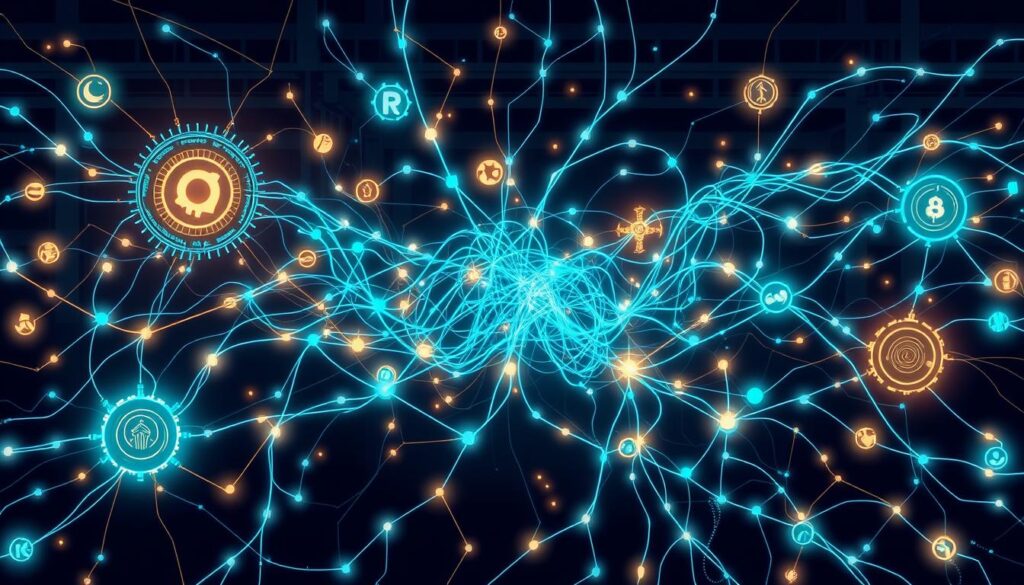

Predictive analytics pairs gradient-boosting and neural learning with graph algorithms to link operations across systems. Australia’s ATO and Italy’s VeRa show how these models surface discrepancies across filings, earnings, property, and accounts.

Natural language and entity analysis

Natural language methods mine annual reports for cues of avoidance—terms like “manufacturing” and “importing” can flag hidden operations, a finding supported by Lillian Mills’ research. Entity resolution then stitches names and companies into network graphs to reveal multi-layered relationships.

Real-time detection and governance

Real-time anomaly detection watches amounts, timing, and seasonal spikes to catch irregular flows or fabricated claims. Graph scoring points auditors to central taxpayers in suspicious clusters.

- Start with comprehensive intake: returns, transaction data, registries, and VAT streams.

- Combine models: gradient boosting, neural learning, and graph analysis for robust signals.

- Maintain oversight: privacy-by-design, bias testing, and human-in-the-loop review keep outcomes fair.

For practical guidance on responsible deployment and emerging methods in language analysis, see research on natural language processing and reporting on how authorities adopt advanced techniques for tax fraud and efficiency.

Results, Benchmarks, and Global Signals of Effectiveness

Real-world pilots have generated concrete revenue gains while improving audit precision. Documented outcomes span recovered unpaid amounts, prevented fraudulent claims, and lift in VAT receipts.

Documented outcomes and operational wins

Australia identified over $530 million in unpaid taxes and prevented $2.5 billion in fraudulent claims using deep learning and gradient-boosting approaches. Italy’s VeRa flagged more than one million high-risk cases by cross-referencing filings, property, bank accounts, and e-payments.

Poland’s STIR ingests daily bank and clearinghouse feeds to catch carousel fraud in days, not months. The IRS and HMRC are expanding data-driven tools to target high-income non-compliance and complex VAT avoidance.

Risk management and governance

Operational gains matter most when paired with oversight. The Dutch childcare benefits scandal and recent privacy actions remind agencies that explainability, bias checks, and audit trails protect taxpayers and sustain trust.

- Key outcomes: stronger revenue recovery, fewer manual errors, and faster, targeted audits.

- Best practice: combine robust data, transparent models, and human review to keep systems fair and effective.

Conclusion

Strong data foundations let agencies turn signals into swift, defensible action. Clean, governed data and transparent models support real-time decisions that improve compliance and optimize taxes collected.

NLP research and graph analysis help surface hidden activity in public filings; combined with anomaly detection, these tools let auditors focus on high-value investigations while protecting taxpayer rights.

Leaders should institutionalize governance—model documentation, bias testing, and audit trails—and train analysts to interpret outputs and refine processes over time.

For architects and practitioners seeking practical guidance on modeling and deployment, see this architect’s guide to modeling detection. A balanced approach—intelligence plus accountability—keeps revenue strong and public trust intact.

FAQ

What is the objective of the AI use case described in "AI Use Case – Tax-Evasion Pattern Recognition"?

The objective is to help tax authorities detect noncompliance and fraud by analyzing tax returns, payments, and public registries with advanced analytics. It focuses on finding anomalous behavior, uncovering hidden relationships among taxpayers and entities, and prioritizing cases for investigation to boost recovery and compliance.

Which data sources are most valuable for building these detection systems?

High-value sources include filed tax returns, bank and payments records, VAT transaction streams (MyDATA-style feeds), corporate filings, and public registries. Combining those with third-party commercial data and behavioral signals strengthens detection and reduces false positives.

What kinds of models and techniques are used in practice?

Common approaches include predictive analytics, gradient boosting, neural networks, graph analysis for network effects, and natural language processing on public filings. Ensemble methods and explainable models help balance accuracy with auditability and legal defensibility.

How does entity resolution and graph analysis improve investigations?

Entity resolution links records that refer to the same person or organization across datasets. Graph analysis then maps relationships—ownership, transactions, shared addresses—revealing layered networks that indicate coordinated avoidance or fraud schemes.

Can these systems detect fraud in real time?

Yes—real-time anomaly detection flags irregular fund flows, mismatches in declared amounts, and atypical seasonal patterns. Streaming architectures and thresholding allow near-instant alerts while batching supports deeper offline analysis.

What governance and privacy safeguards are recommended?

Implement privacy-by-design, rigorous access controls, audit logs, model explainability, and bias monitoring. Legal reviews and impact assessments should guide data retention, purpose limitation, and transparency toward taxpayers.

How do authorities measure effectiveness and return on investment?

Key metrics include unpaid tax identified, fraudulent claims prevented, VAT recovery, audit hit rates, processing time reduction, and lowered manual review overhead. Benchmarks from agencies such as the IRS, HMRC, and national programs provide comparative signals.

Are there examples of successful deployments globally?

Several agencies have reported results: the IRS has modernized enforcement with data-driven tools; HM Revenue & Customs is piloting large language models for case work; Italy, Australia, and Poland have publicized analytic programs that improved detection and collections.

What risks should program leaders watch for?

Risks include model bias, privacy breaches, overreliance on automated decisions, and reputational harm from false positives. Lessons from high-profile incidents—such as the Dutch scandal—underscore the need for strong oversight and corrective processes.

How can organizations start a secure, effective program?

Start with a clear problem statement and pilot on high-quality, well-governed data. Define success metrics, deploy interpretable models, build cross-functional teams (data science, legal, audit), and iterate with continuous monitoring and stakeholder feedback.