Behind every major business pivot and scientific breakthrough lies a powerful truth-finding tool: statistical analysis. Like digital detectives, analysts use structured methods to separate coincidence from meaningful patterns – a process that determines everything from drug approval to stock market predictions.

Modern professionals leverage Python’s intuitive libraries to implement these analytical techniques efficiently. The language’s scipy.stats and pandas packages transform complex calculations into manageable code blocks, as demonstrated in step-by-step Python code examples for real-world scenarios.

This approach traces its roots to 18th-century probability theory but gained prominence through 20th-century quality control innovations. Today, it forms the backbone of machine learning validation and data science workflows, helping teams minimize guesswork in high-stakes environments.

Key Takeaways

- Core statistical method for transforming raw data into reliable conclusions

- Python’s simplicity and powerful libraries streamline implementation

- Historical foundation dating back to early scientific methodology

- Systematic evidence evaluation process resembling investigative work

- Critical skill for tech professionals and business strategists alike

Introduction to Hypothesis Testing with Python

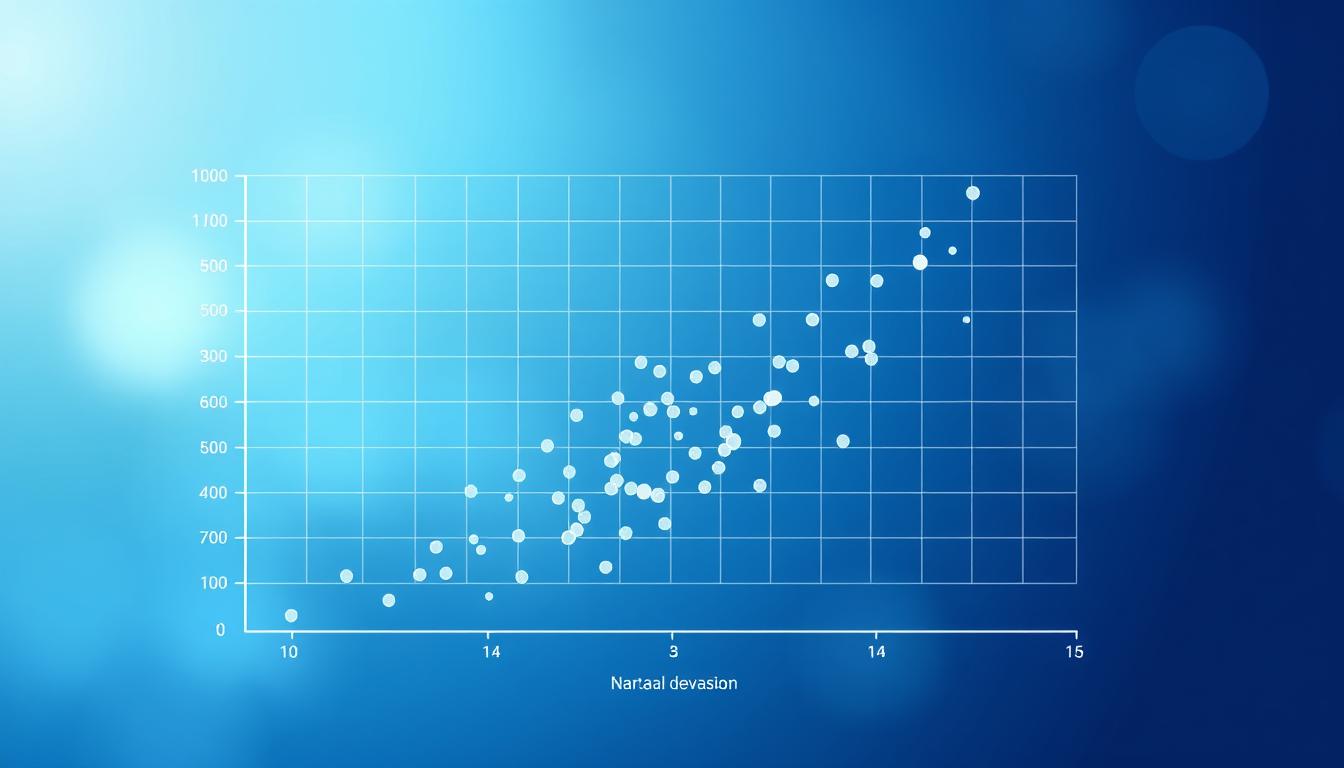

In uncertain environments where numbers speak louder than opinions, analysts wield statistical methods like precision tools. These techniques separate meaningful patterns from random noise—transforming ambiguous data into clear action plans.

The Science of Certainty

At its core, this method evaluates claims through systematic evidence review. Analysts compare sample observations against baseline assumptions—called null hypotheses—to determine whether results show real trends or chance occurrences. Financial institutions use this approach daily to validate market predictions, while healthcare researchers apply it to drug efficacy studies.

Code-Powered Insights

Python’s ecosystem simplifies complex calculations through libraries like SciPy and NumPy. A foundational guide demonstrates how three lines of code can calculate p-values that once required manual computation. This accessibility allows marketers to test campaign variations and engineers to verify product improvements with equal precision.

Modern teams leverage these tools to:

- Convert raw metrics into boardroom-ready conclusions

- Validate machine learning model performance

- Optimize operational processes using empirical evidence

By mastering these techniques, professionals across industries reduce reliance on intuition—making choices backed by mathematical rigor rather than guesswork.

Understanding Statistical Hypotheses

Statistical analysis thrives on structured inquiry—researchers frame competing claims to separate fact from chance. These opposing statements form the foundation of decision-making across industries, from clinical trials to market research.

Null vs Alternative Hypotheses

The null hypothesis (H₀) represents existing assumptions, like “Our new marketing campaign doesn’t increase sales.” Its counterpart—the alternative hypothesis (H₁)—states the anticipated change: “The campaign boosts sales by 15%.” These claims must contradict each other completely, leaving no overlap for clear conclusions.

Mathematically, H₀ always includes equality (=, ≤, ≥), while H₁ uses strict inequalities (>, 10% improvement”—creating a framework for precise evaluation.

Types of Errors: Type I and Type II

Two risks haunt every analysis. Type I errors occur when rejecting a true H₀—like falsely claiming a useless fertilizer improves crop yields. This “false alarm” wastes resources on nonexistent effects. Type II errors involve missing real patterns, such as overlooking a genuine sales boost from a redesigned website.

Consider these impacts:

- Medical research prioritizes minimizing Type I errors to avoid unsafe drug approvals

- Tech startups focus on reducing Type II errors to catch growth opportunities

Balancing these risks requires understanding each mistake’s consequences. A 5% significance level controls Type I errors, while larger sample sizes help prevent Type II oversights.

Key Concepts in Hypothesis Testing

In the realm of data-driven decisions, two statistical gatekeepers determine truth from coincidence. These tools help analysts quantify uncertainty and make choices backed by measurable evidence rather than intuition.

Significance Level and P-value

The significance level (α) acts as a risk management tool. Set before analysis, it defines the maximum probability of falsely rejecting a true null hypothesis. Most industries use α = 0.05, accepting a 5% chance of false alarms. Pharmaceutical studies often tighten this to α = 0.01 when lives are at stake.

P-values measure the strength of evidence against the null hypothesis. A result showing p = 0.03 means there’s a 3% chance the observed effect occurred randomly if no real difference exists. Lower values signal stronger evidence for change.

| Factor | Significance Level | P-value |

|---|---|---|

| Purpose | Predefined risk threshold | Observed evidence strength |

| Common Values | 0.05, 0.01 | 0.001 to 0.99 |

| Decision Rule | Reject H₀ if p ≤ α | Compared against α |

Marketing teams might accept α = 0.10 for preliminary campaign tests, prioritizing speed over precision. Conversely, safety engineers validating aircraft components use stricter thresholds to minimize risks. This balance between discovery and reliability shapes modern data strategies across sectors.

Setting Up Your Python Environment

A well-prepared workspace is the unsung hero of effective statistical analysis. Before diving into complex calculations, professionals establish reliable coding environments that ensure consistent results across teams and projects.

Installing Required Libraries

Modern data analysis relies on four core tools. NumPy handles numerical operations, SciPy provides statistical functions, pandas manages datasets, and matplotlib visualizes trends.

Install these using pip or conda:

pip install numpy scipy pandas matplotlib

Environment management prevents version conflicts. We recommend virtual environments for isolating project dependencies. For team projects, share a requirements.txt file listing exact package versions.

| Library | Core Function | Import Syntax |

|---|---|---|

| NumPy | Array mathematics | import numpy as np |

| SciPy | Hypothesis tests | from scipy import stats |

| pandas | Data manipulation | import pandas as pd |

| matplotlib | Visual exploration | import matplotlib.pyplot as plt |

Choose tools that match your workflow. Jupyter Notebooks allow interactive exploration, while IDEs like PyCharm streamline larger projects. Consistent formatting using PEP8 standards improves collaboration.

This setup creates repeatable processes. Clear documentation transforms individual experiments into scalable solutions—whether evaluating marketing strategies or optimizing manufacturing workflows.

Formulating Your Research Questions and Hypotheses

Clear research begins with razor-sharp questions. Analysts convert vague inquiries like “Does this work?” into measurable statements that guide precise investigations. This translation process separates impactful studies from aimless data dives.

Defining the Null Hypothesis

The null hypothesis (H₀) acts as an anchor for comparison. It represents default assumptions or existing knowledge – “Our chatbot response time averages 2 minutes” or “Feature X doesn’t affect user retention.” These statements always use equality operators (=, ≤, ≥) to establish testable baselines.

Effective H₀ construction requires three elements:

- Specific population parameter (mean, proportion)

- Clear comparison value or condition

- Mathematically precise formulation

Crafting the Alternative Hypothesis

Contrasting H₀, the alternative hypothesis (H₁) states expected changes. It answers: “What effect are we trying to prove?” Direction matters – “Customer satisfaction exceeds 80%” uses >, while “Conversion rates differ” employs ≠ for two-tailed tests.

| Characteristic | Null Hypothesis | Alternative Hypothesis |

|---|---|---|

| Purpose | Status quo assumption | Research claim |

| Mathematical Form | μ = 15, p ≤ 0.1 | μ ≠ 15, p > 0.1 |

| Equality Condition | Always present | Never present |

| Example | Drug has no effect | Reduces symptoms by 20% |

Manufacturing teams might test H₀: “Defect rate ≤ 3%” against H₁: “Defect rate > 3%” when evaluating production changes. This structured approach prevents confirmation bias – letting data confirm or challenge theories objectively.

Data Preprocessing and Assumption Checking

Before analyzing patterns, analysts verify their tools. Data validation acts as quality control for statistical methods—ensuring results reflect reality rather than flawed inputs. Three pillars support reliable analysis: independent observations, normal distribution, and consistent variance across groups.

Normality Tests (Shapiro-Wilk)

The Shapiro-Wilk exam evaluates data distribution shapes. Small samples (n

from scipy.stats import shapiro

stat, p = shapiro(sample_data)

print(f'P-value: {p:.4f}')

Variance Homogeneity (Levene Test)

Group comparisons require equal variance for accurate conclusions. Levene’s technique checks this assumption across multiple datasets. Unlike stricter tests, it performs well with non-normal data.

from scipy.stats import levene

stat, p = levene(group1, group2)

print(f'P-value: {p:.4f}')

Teams use these diagnostics to choose analytical paths. Parametric tests demand both normality and equal variance. When assumptions fail, nonparametric methods like Mann-Whitney U preserve result credibility. Proper validation separates professional analysis from guesswork.

Parametric Testing Methods in Python

In data-driven decision-making, certain tools stand out for their precision. Parametric methods offer structured approaches for comparing numerical data when key assumptions hold true. These techniques shine in scenarios requiring rigorous comparisons of group differences or relationships between variables.

T-Test Applications

The t-test family tackles three common challenges. One-sample versions compare experimental results to known benchmarks—like testing if customer satisfaction scores exceed industry standards. Paired tests analyze related measurements, such as pre-and-post campaign performance.

Independent t-tests evaluate distinct groups, like comparing conversion rates between website versions. Python’s scipy.stats.ttest_ind() handles these comparisons efficiently, returning both test statistics and probability values.

Interpreting Parametric Results

Effective analysis requires understanding outputs. The t-statistic measures difference magnitude relative to data variability. P-values indicate result rarity under the null assumption—values below 0.05 typically signal significant findings.

Consider confidence intervals: a 95% range excluding zero strengthens evidence for real differences. Teams use these metrics to make critical choices, from launching new features to optimizing manufacturing processes. Proper interpretation transforms raw outputs into strategic insights.

Mastering these methods equips professionals to validate theories with mathematical rigor. When data meets parametric assumptions, these tests become powerful allies in separating signal from noise.

FAQ

How does Python simplify statistical analysis for hypothesis testing?

Python offers libraries like SciPy, statsmodels, and NumPy, which automate complex calculations for significance levels, p-values, and test statistics. These tools enable efficient data processing, reducing manual errors and accelerating decision-making in research.

What’s the difference between Type I and Type II errors?

A Type I error occurs when rejecting a true null hypothesis (false positive), while a Type II error involves failing to reject a false null hypothesis (false negative). Balancing these risks depends on context—like prioritizing sensitivity in medical trials.

When should I use a Shapiro-Wilk test in data preprocessing?

Apply the Shapiro-Wilk test to check if a dataset follows a normal distribution. This step is critical before using parametric methods like t-tests, which assume normality. Non-normal data may require non-parametric alternatives like the Mann-Whitney U test.

How do I choose between a one-tailed and two-tailed test?

Use a one-tailed test when predicting a specific direction of effect (e.g., “Group A will score higher”). A two-tailed test is appropriate for detecting any difference without directional assumptions, offering broader but less targeted insights.

What’s the purpose of the Levene test in hypothesis testing?

The Levene test assesses whether groups have equal variances—a key assumption for ANOVA and t-tests. If variances differ significantly, consider using Welch’s t-test or transforming the data to meet homogeneity requirements.

Can I perform hypothesis tests on small sample sizes in Python?

Yes, but small samples reduce statistical power, increasing the risk of Type II errors. Non-parametric tests like the Wilcoxon signed-rank test are more robust here, as they don’t rely on strict distributional assumptions.

How do I interpret a p-value of 0.06 at a 5% significance level?

A p-value of 0.06 suggests insufficient evidence to reject the null hypothesis at the 5% threshold. However, context matters—this result might still warrant further investigation, especially in exploratory research or with high-stakes outcomes.

Which Python library is best for running t-tests?

SciPy’s ttest_ind (independent samples) and ttest_rel (paired samples) functions are widely used. They return t-statistics and p-values, while Pandas helps organize data for seamless integration with these tests.

Why is defining the null hypothesis crucial in research?

The null hypothesis establishes a baseline for comparison, framing the default assumption (e.g., “no effect”). Clearly defining it ensures tests target specific questions, reducing ambiguity and anchoring results in measurable outcomes.

What steps ensure reproducibility in hypothesis testing workflows?

Use Jupyter Notebooks for documenting code, set random seeds with NumPy, and share raw data alongside cleaned versions. Version control tools like Git enhance transparency, allowing others to validate or replicate your analysis.