Behind every major business decision – from healthcare breakthroughs to marketing strategies – lies a simple yet powerful statistical tool. Developed over a century ago, this method remains the most widely used technique for uncovering hidden relationships in categorical data.

Analysts use these calculations to answer critical questions: Do vaccination rates vary by region? Are customer preferences linked to age groups? By comparing observed results with expected outcomes, professionals gain evidence-based clarity in uncertain scenarios.

The methodology’s endurance stems from its adaptability. Karl Pearson’s original 1900 framework now supports AI algorithms, clinical trials, and social research. Modern applications range from optimizing e-commerce product categories to validating machine learning models.

Key Takeaways

- Identifies relationships between categorical variables with mathematical precision

- Forms the backbone of hypothesis validation across industries

- Transforms raw survey results and demographic data into strategic insights

- Requires large sample sizes for reliable conclusions

- Empowers data-driven decisions without advanced statistical training

This guide demystifies the process through real-world examples, showing how teams implement these analyses efficiently. Readers will learn to interpret results confidently – separating random fluctuations from meaningful patterns.

Introduction to Chi-Square Tests

In data-driven industries, uncovering hidden patterns between qualitative factors often separates strategic wins from missed opportunities. These analytical methods shine when working with categorical variables like survey responses, demographic traits, or product preferences – data points sorted into clear groups rather than numerical scales.

Imagine a beverage company comparing regional soda preferences. By applying this approach, analysts could determine if flavor choices correlate with climate zones – transforming raw sales data into targeted marketing plans. The core principle measures gaps between actual observations and theoretical expectations if no connection existed.

Practitioners across fields rely on these techniques to validate hypotheses about relationships in non-numerical data. Healthcare researchers might examine vaccine effectiveness across age brackets, while retailers analyze how packaging colors influence buying habits. The process requires comparing frequency distributions – counting how often specific combinations occur versus predicted outcomes.

What makes this methodology indispensable? Its ability to handle real-world categorical data without complex calculations. From political polling to clinical trials, professionals use it to confirm or challenge assumptions about group interactions. A social media team might test whether post engagement varies by weekday, while biologists study habitat preferences in wildlife populations.

By focusing on distinct categories rather than continuous metrics, these tests reveal connections that other analyses miss. They empower teams to make evidence-based decisions about customer segmentation, policy effectiveness, or product feature prioritization – all through systematic comparison of what’s observed versus what’s statistically expected.

History and Development of Chi-Square Tests

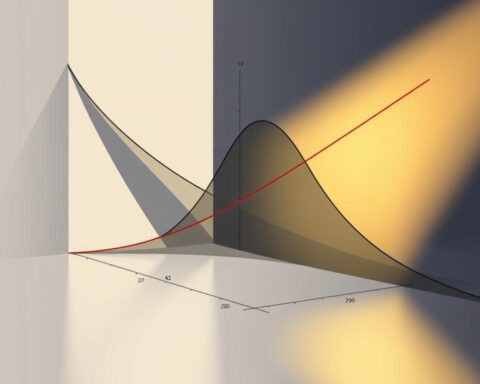

The birth of modern data analysis came through a statistical revolution at the turn of the 20th century. Before 1900, researchers relied on methods designed for biological studies, assuming all data followed normal distribution patterns. This approach often failed to explain real-world complexities in agricultural yields, genetic traits, and population studies.

Karl Pearson changed this paradigm through meticulous observation. While analyzing biological datasets, he noticed frequent skewness – results clustering asymmetrically rather than forming perfect bell curves. His 1900 paper introduced a groundbreaking framework for comparing observed frequencies against theoretical expectations.

| Aspect | 19th-Century Approach | Pearson’s Innovation |

|---|---|---|

| Data Handling | Assumed normal distribution | Allowed for skewed distributions |

| Analysis Focus | Continuous numerical data | Categorical frequency comparisons |

| Validation Method | Visual pattern matching | Mathematical hypothesis testing |

Pearson’s work proved that under specific conditions, calculated values would follow a predictable distribution pattern as sample sizes grew. This discovery enabled researchers to mathematically validate whether deviations in their data occurred by chance or reflected meaningful relationships.

The methodology’s adaptability fueled its rapid adoption. Early applications in genetics and sociology paved the way for modern uses in machine learning validation and market research. By transforming categorical analysis from guesswork to rigorous science, Pearson’s framework remains essential in data-driven decision-making today.

Understanding Chi-Square Test Concepts and Terminology

What determines whether a new product succeeds or collects dust on shelves? The answer often lies in how well teams analyze categorical relationships. These non-numerical groupings – like customer age brackets or regional dialects – form the building blocks of pattern detection.

Categorical variables sort information into clear buckets. Think survey responses (yes/no/maybe) or demographic traits (urban/rural). Unlike temperature readings or revenue figures, these variables have fixed options without inherent order. A streaming service might use them to compare genre preferences across subscription tiers.

Three core concepts drive this methodology:

| Concept | Real-World Example | Purpose |

|---|---|---|

| Observed Frequency | Actual votes for political candidates | Documents real-world occurrences |

| Expected Frequency | Theoretical equal voter distribution | Establishes comparison baseline |

| Contingency Table | Marketing channel vs. age group matrix | Visualizes variable relationships |

When analyzing two categorical variables, statistical independence becomes crucial. Imagine a bookstore checking if genre preferences differ between ebook and print readers. If no connection exists (independence), fantasy novel choices wouldn’t predict format selection.

The test statistic quantifies gaps between actual and projected results. Larger deviations suggest stronger relationships. Analysts combine this value with degrees of freedom – calculated from category counts – to determine significance levels.

Mastering these terms transforms raw data into strategic insights. Teams can objectively assess whether apparent patterns reflect meaningful connections or random noise, creating clearer paths for data-driven decisions.

Statistical Foundations and Formulae

At the heart of pattern recognition lies a mathematical framework that converts raw counts into actionable insights. This approach measures gaps between reality and theoretical expectations through precise calculations.

Key Components of the Formula

The equation χ² = Σ(Oi – Ei)² / Ei acts as a magnifying glass for data discrepancies. Each symbol plays a critical role:

- Oi: Actual counts from surveys or experiments

- Ei: Theoretical values assuming no variable relationships

- Σ: Summation across all data categories

Consider a political poll comparing voter preferences across regions. Observed frequencies show real voting patterns, while expected frequencies reveal what would happen if geography didn’t influence choices. Analysts calculate Ei by multiplying row and column totals, then dividing by overall sample size.

Understanding Degrees of Freedom

This crucial adjustment factor prevents skewed interpretations in complex datasets. For contingency tables, it’s calculated as:

(Rows – 1) × (Columns – 1)

A 3×4 table analyzing product preferences across age groups would have (3-1)(4-1) = 6 degrees of freedom. This value determines how much natural variation exists when comparing categories, ensuring accurate significance thresholds.

These calculations transform subjective observations into objective evidence. By standardizing differences across scales, professionals can confidently distinguish random noise from meaningful patterns – whether evaluating marketing campaigns or medical trial outcomes.

Types of Chi-Square Tests

Organizations face countless decisions daily that depend on understanding group-based patterns. Two distinct methods help analysts navigate these scenarios while maintaining statistical rigor.

Validating Single-Variable Distributions

The goodness-of-fit test examines whether observed data matches theoretical predictions. Analysts use this approach when working with one variable divided into categories. For example:

- Quality control teams testing if defective product rates match industry standards

- Biologists verifying whether plant species distribution follows climate models

This method answers critical questions about alignment between expectations and reality. Retailers might apply it to check if actual sales proportions across stores match projected figures.

Exploring Variable Relationships

When investigating connections between two variables, the test of independence becomes essential. Marketing teams frequently use this to determine if ad campaigns perform differently across age groups. Key applications include:

- Healthcare researchers analyzing treatment effectiveness by patient demographics

- Educators studying test score patterns across different teaching methods

This approach reveals whether variables operate independently or show measurable interactions. Financial institutions might use it to assess if loan approval rates correlate with applicant regions.

Choosing between these methods depends on research goals. Single-variable analysis suits distribution validation, while two-variable examination uncovers hidden relationships. Both methods transform categorical data into strategic insights through systematic comparison of observed versus expected outcomes.

When and Why to Use Chi-Square Tests

Decoding patterns in categorical data requires strategic analytical tools that transform hunches into validated insights. Professionals use chi-square tests when working with distinct groups like customer segments, survey responses, or biological classifications – scenarios where traditional numerical analysis falls short.

Marketing teams frequently apply this method to determine whether two variables – like age brackets and product preferences – show meaningful connections. A beverage company might analyze if regional climate zones correlate with flavor choices, turning raw sales data into targeted campaigns. Healthcare researchers similarly rely on statistical validation to compare treatment outcomes across patient demographics.

Three key scenarios demand this approach:

- Confirming if observed data matches theoretical expectations

- Investigating relationships between non-numerical categories

- Making decisions with large sample sizes (500+ responses)

Social scientists use these techniques to explore connections between education levels and voting behaviors, while manufacturers test whether defect rates align with quality standards. The method’s strength lies in handling categorical variables that other analyses can’t process – like yes/no survey answers or demographic labels.

When teams need objective proof to support strategic choices, this analysis provides clarity. It answers critical questions: Do packaging colors influence purchase decisions? Is employee turnover linked to departments? By comparing real-world observations against expected outcomes, organizations base decisions on evidence rather than intuition.

Step-by-Step Guide to Performing Chi-Square Tests

What transforms raw survey data into actionable business strategies? A systematic approach to validating categorical relationships. Professionals follow a clear process to perform chi-square analyses that turn hunches into evidence-based decisions.

Defining Hypotheses and Organizing Data

Every analysis begins with a null hypothesis – the assumption that no relationship exists between variables. For example, a retailer might hypothesize that packaging color doesn’t influence purchase rates. Data gets organized into a contingency table, categorizing responses by both variables (like color options and sales figures).

Calculating the Test Statistic

Analysts compare observed counts with expected values using the formula:

X² = Σ [(O – E)² / E]

Expected frequencies derive from row/column totals, revealing what results would look like if variables were independent. This step-by-step guide simplifies calculations for teams without advanced statistical training.

Degrees of freedom adjust for table complexity, while p-values determine significance thresholds. By mastering these steps, professionals confidently separate random noise from meaningful patterns – whether optimizing ad campaigns or validating clinical trial outcomes.

FAQ

What’s the difference between a goodness-of-fit test and a test of independence?

A goodness-of-fit test evaluates whether observed data aligns with a hypothesized distribution for a single categorical variable. A test of independence assesses whether two categorical variables are related, using contingency tables to analyze their association.

When should someone avoid using this statistical method?

Avoid using it if expected frequencies in any category drop below five, as this violates assumptions. It’s also unsuitable for continuous data or when analyzing more than two variables without advanced adjustments.

How do degrees of freedom impact the results?

Degrees of freedom depend on the number of categories or variables analyzed. They determine the critical value from the distribution table, influencing whether the null hypothesis is rejected. Incorrect calculations here can skew conclusions.

What real-world scenarios benefit most from these analyses?

Market researchers use them to study consumer preferences across demographics. Biologists apply them to genetic inheritance patterns, while social scientists analyze survey responses to identify trends in voter behavior or policy opinions.

Why is the null hypothesis critical in these evaluations?

The null hypothesis assumes no relationship or difference between variables. Rejecting it—via a sufficiently large test statistic—suggests evidence for an alternative hypothesis, driving actionable insights from observed patterns.

Can this approach handle small sample sizes effectively?

Small samples risk unreliable results due to low expected frequencies. Tools like Fisher’s exact test often replace the standard method in such cases, ensuring more accurate interpretations of sparse data.

What software simplifies performing these evaluations?

Programs like SPSS, R, and Excel’s Analysis ToolPak automate calculations. They generate test statistics, p-values, and contingency tables, reducing manual errors and streamlining hypothesis testing workflows.