It starts with a single message that looks urgent but feels off. Many professionals remember the moment they realized a trusted sender had been spoofed. That small doubt can ripple into lost hours, breached accounts, and shaken confidence.

This introduction frames why content-aware defenses matter now: industry data shows phishing rose sharply in 2023, with credential-focused attempts spiking nearly tenfold. Static lists still help, yet they fail against fast, novel attacks that change by the hour.

Readers will find a practical approach rooted in real data: lexical signals like TF‑IDF often outperform some semantic methods on certain corpora, while embeddings add context where words diverge. We present methods that keep humans in the loop, measure outcomes, and integrate with existing security tools without disrupting users.

Key Takeaways

- Phishing volumes surged in 2023; credential-targeting rose dramatically.

- Static lists help but do not stop zero-hour attacks; content analysis fills gaps.

- TF‑IDF and clustering offer pragmatic, explainable signals for fast triage.

- Semantic embeddings add depth—trade-offs exist between lexical and semantic models.

- Operational design must include thresholds, drift monitoring, and analyst feedback.

Executive Summary: Elevating Email Security with NLP in the Present Threat Landscape

The current threat landscape demands content-aware defenses that read beyond headers and signatures.

Context: Reported volume rose 58.2% in 2023 while credential attempts jumped 967%. Secure gateways saw a 52.2% bypass increase in early 2024. APWG logged 1,286,208 attacks in Q2 2023, with 23.5% hitting financial firms.

This summary outlines a pragmatic approach: extract lexical and semantic features, apply thresholded scoring, and route high-confidence cases to quarantine or enrichment workflows. The goal is measurable improvement in detection without disrupting users.

An academic baseline shows practical gains: 79.8% accuracy via TF‑IDF and 67.2% for semantic similarity on benchmark data. Those figures illustrate that simple, explainable techniques deliver fast value alongside deeper models.

- Integrates with Secure Email Gateways and SOC pipelines to add a content decision layer.

- Prioritizes precision/recall trade-offs, drift monitoring, and human validation.

- Scales across departments and meets governance needs while improving analyst efficiency.

| Metric | 2023 | Early 2024 |

|---|---|---|

| Volume change | +58.2% | — |

| Credential attempts | +967% | — |

| SEG bypass rate | — | +52.2% |

Key takeaway: Organizations can deploy content-focused solutions incrementally, measure gains with clear metrics, and adapt thresholds as the threat evolves.

Why Phishing Persists and Evolves: Context for the United States

Criminal groups now deploy rapid, multi-channel campaigns that exploit routine trust signals.

The United States faces a heightened risk profile after a 58.2% surge in 2023. Rapid tool adoption lowered the cost to craft convincing message templates. As a result, credential harvesting attempts rose 967% and yield quick monetization for attackers.

Multi-channel vectors compound the problem. Traditional emails remain common, but QR-based quishing, SMS (smishing), and voice (vishing) add fast infection paths. Deepfake-enabled impersonation also increases success rates.

Secure Email Gateway detections are losing ground; bypass growth reached 52.2% in early 2024. Consumers and external customers often lack corporate filters, leaving users more exposed than employees.

- Attackers exploit urgency, brand trust, and authority to convert routine contact into fraud.

- Credential-focused attacks scale easily, shifting defenders to continuous verification and identity controls.

- Effective defense requires correlated signals across emails, mobile, and web to stop blended attacks.

Conclusion: Content-focused analysis and faster automation are essential to keep pace. Rapid, explainable classification plus human review closes gaps that lists and static checks cannot.

Limits of Traditional Defenses: Blacklists, Whitelists, and Secure Email Gateways

Static defenses still win rapid skirmishes, but they lose ground in fast-moving campaigns.

Blacklists and whitelists deliver speed and low friction. They block known threats quickly and keep operations smooth. However, they react to indicators after attacks are already underway.

Attackers mutate domains, URLs, and sender identities faster than lists update. Zero-hour attacks exploit that window, leading to missed detection and increased successful attacks.

SEG Blind Spots and Where Lists Fall Short

Secure Email Gateways provide coarse control but show blind spots. SEGs miss QR redirects and links delivered through social channels or SMS. Bypass rates rose 52.2% in early 2024, highlighting operational gaps.

Manual list curation is resource-heavy. Policies based only on allow/deny lists risk over-blocking legitimate information or under-blocking sophisticated threats.

- List methods are reactive; zero-hour threats find short-lived openings.

- SEGs need content inspection to detect semantic intent beyond metadata.

- Automated learning reduces dependence on reports and scales curation.

Practical step: Integrate content-based scoring into SEG pipelines to prioritize review and enforce adaptive controls. Baseline models such as TF‑IDF show promising accuracy and explainability; see the related study for academic context.

| Defense | Strength | Weakness |

|---|---|---|

| Blacklist/Whitelist | Fast, simple | Reactive; misses zero-hour attacks |

| Secure Email Gateway | Centralized control | Blind spots for external channels; rising bypass |

| Content Scoring (lexical) | Explainable; TF‑IDF baseline | Needs tuning; must integrate with workflows |

State of the Art: From Machine Learning to Natural Language Processing for Phishing Detection

Contemporary detection efforts pair proven statistical methods with newer contextual encoders to balance speed and depth.

Classical machine learning remains a reliable foundation. TF‑IDF features with Naïve Bayes, SVM, or logistic regression provide fast, explainable baselines. These methods often deliver high accuracy in controlled tests and are easy to audit.

Ensembles—Random Forests or gradient-boosted trees—reduce variance and improve generalization. They shine when feature sets include lexical cues and engineered indicators. Yet they may miss subtle intent without semantic signals.

NLP Advances and Explainability

Recent work adds sentence embeddings and contextual encoders like BERT and RoBERTa. These models capture tone, intent, and discourse that keyword methods miss. They enable deeper analysis but demand more compute and data.

Explainability drives adoption: WEPI-style labels map outputs to analyst-friendly cues—urgency, identity mismatch, and request type. Hybrid pipelines that combine TF‑IDF signals with embeddings often balance clarity and performance.

| Approach | Strength | Key Trade-off |

|---|---|---|

| TF‑IDF + Linear models | Fast, explainable | Limited semantic depth |

| Ensembles (Random Forest) | Robust generalization | Harder to interpret |

| Contextual encoders | Detects subtle intent | Compute and data intensive |

Rigorous evaluation must handle class imbalance, domain shift, and threshold tuning. In practice, teams favor hybrid techniques that retain auditability while improving detection of sophisticated threats.

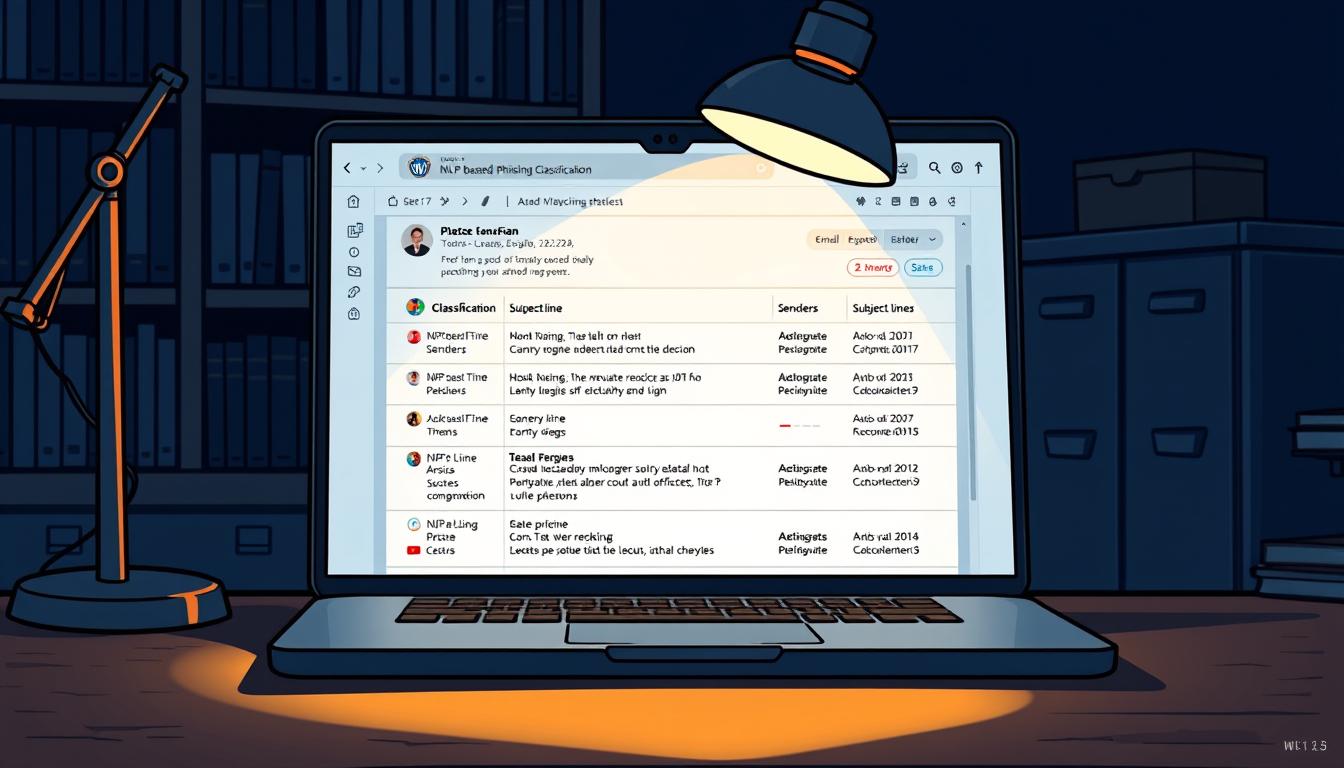

Main Use Case Overview: AI Use Case – NLP-Based Phishing Email Classification

This section frames objectives and success criteria for an end-to-end content scoring and response system.

Scope: Define an inbox-aware NLP capability that augments gateway controls and produces explainable signals for security operations. The design standardizes scoring, labels, and API outputs so risk scores feed SEGs and SIEM/SOAR workflows.

Whitepaper scope, objectives, and success criteria

Objectives: Improve detection at zero hour, reduce reliance on static indicators, and enable adaptive response via confidence thresholds. The stack supports both classical models and contextual encoders to balance accuracy and auditability.

- Success criteria: measurable lift in precision/recall versus baseline filters and fewer false positives.

- Operational goals: faster analyst triage and WEPI-style labels that map to analyst actions.

- Integration: APIs to SEGs and SOC tools so risk scores trigger quarantine, banners, or ticket enrichment.

Governance includes periodic evaluation, drift checks, and human-in-the-loop validation. Training pipelines use mixed corpora and sampling to mirror real inbox distributions.

| Metric | Target | Baseline |

|---|---|---|

| Precision lift | +10% | Baseline filters |

| Triaged alerts / hr | ↓ analyst time | Current queue |

| Explainability | WEPI labels | — |

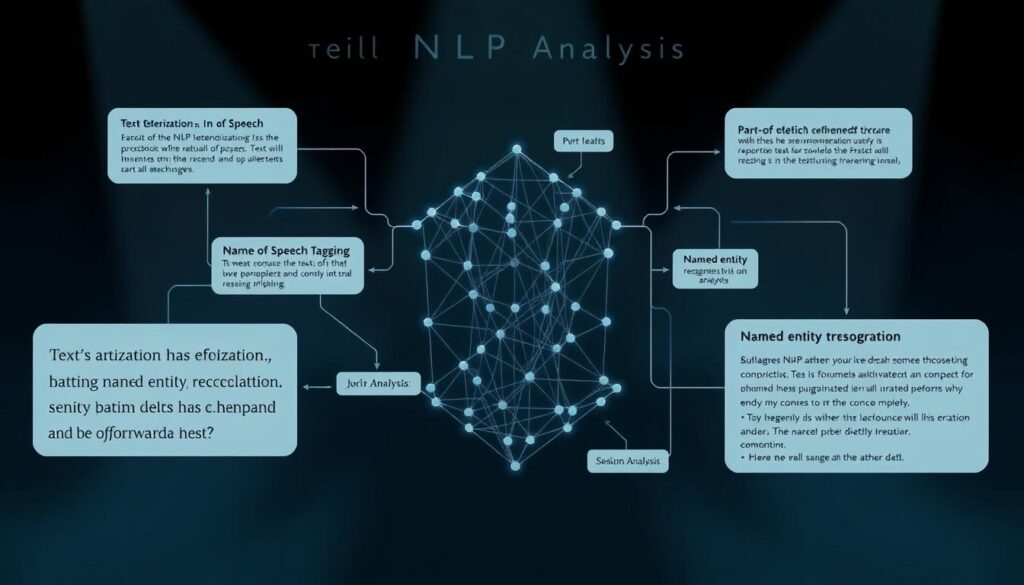

NLP Pipeline Design: Feature Extraction and Representation

A compact, modular pipeline turns raw messages into auditable signals for rapid triage. It begins with strict preprocessing: tokenization, lowercasing, stopword removal, and lemmatization to normalize text for consistent representation.

TF-IDF weighting for lexical cues

TF‑IDF highlights discriminative words and yields fast, interpretable features. These features support keyword mining and initial scoring with low latency.

Semantic similarity via sentence embeddings

Sentence embeddings—computed with the Universal Sentence Encoder—measure cosine similarity to known templates. This captures paraphrase and subtle intent that lexical signals miss.

K-means keyword clustering and selection

K-means over word vectors surfaces representative clusters and aids feature selection. Cluster centroids and cosine matrices are logged for audit and analyst review.

“Combine lexical strength with semantic breadth: TF‑IDF for explicit signals; embeddings for paraphrase detection.”

- Feature sets are versioned to allow rollbacks and experiments.

- Dataset tuning—stoplists, bigrams, domain terms—reduces noise.

- The design supports incremental adoption: start with TF‑IDF, add embeddings and clustering later.

For further reading on language processing techniques and datasets, see this overview at PhilArchive and a practical primer at Miloriano.

Three-Phase Methodology for Phishing Email Classification

A structured, three-phase workflow turns raw message content into governed signals for fast decision-making.

Phase 1 — Keyword extraction and semantic grouping. TF‑IDF highlights high-value tokens. K‑means over word or sentence vectors groups related terms into centroids. This dual view yields resilient features that survive template churn.

Phase 2 — Anchor with curated datasets and similarity thresholds

Embed messages with a sentence encoder and compute cosine similarity against a curated corpus of malicious samples. Derive breakpoint thresholds that separate benign content from high-risk matches.

Phase 3 — Test, tune, and operationalize thresholds

Evaluate on held-out emails and live streams. Tune tiered decision points—informational, suspicious, high risk—to balance precision and recall for business risk.

“Combine lexical discovery with corpus-anchored similarity and real-world testing to build a reliable, explainable detection pipeline.”

- Each phase produces versioned artifacts: keyword lists, centroids, and thresholds for auditability.

- The process is iterative: re-cluster and re-threshold when new campaign themes appear.

- Similarity computations use efficient embedding pipelines; cosine measures are easy to monitor.

- Integration with SOC workflows is built into Phase 3 so alerts carry context for fast triage.

| Phase | Core output | Primary benefit |

|---|---|---|

| Phase 1 | Keywords, clusters | Explainable, low-latency signals |

| Phase 2 | Thresholds, similarity scores | Contextual anchoring to malicious templates |

| Phase 3 | Operational rules, tiered actions | Balanced detection and analyst efficiency |

Benchmarks: TF‑IDF baselines reached ~79.8% accuracy while semantic similarity scored ~67.2% on the referenced dataset. Together they form a hybrid strategy that improves real-world detection and reduces misses on zero-hour templates.

Datasets, Annotation, and Weak Explainable Phishing Indicators (WEPIs)

A reliable dataset strategy anchors model trust and analyst confidence.

The annotated corpus contains 940 messages drawn from Enron, a fraudulent phishing corpus, and internal mail. This mix mirrors production distributions so models see both benign patterns and attack templates.

Annotation used 32 WEPI labels mapped to four linguistic scopes: signature, sentence, message, and word/phrase. Annotators aimed for Cohen’s kappa ≥ 0.8 to ensure label reliability and consistent calibration.

Key dataset practices

- Combine benign and malicious corpora to reflect real inbox balance and to reduce false positives.

- Leverage signature blocks as verifiable sources—emails, domains, and titles can be cross-checked with metadata.

- Apply class weighting, careful sampling, and threshold tuning to address label imbalance without overfitting.

WEPI labels act as weak but explainable signals: urgency, action requests, and identity mismatches. Message-level tags often align with SOC playbooks and aid analyst triage.

“The combination of WEPI labels and NLP models closes the loop between machine detection and human education.”

| Element | Role | Operational benefit |

|---|---|---|

| Corpus mix (940 msgs) | Training / validation | Realistic class balance |

| 32 WEPI labels | Granular supervision | Explainable signals for review |

| Inter-annotator checks | Quality control | Model calibration & trust |

Model Choices and Baselines: From TF-IDF to BERT/RoBERTa

Practical model choices trade off interpretability, throughput, and sustained operational effort.

TF‑IDF baselines are fast to deploy, cost-effective, and transparent. They suit regulated environments and initial rollouts where analyst trust matters.

Contextual encoders such as BERT and RoBERTa capture tone and subtle intent. They improve recall on nuanced phishing templates but add compute and MLOps demands.

- Hybrid stacks stage TF‑IDF as first-pass filtering and escalate ambiguous messages to heavier models.

- Explainability is non-negotiable: surface top contributing terms and WEPI-like cues to guide analysts.

- Model selection must reflect infrastructure, privacy, and time-to-detect constraints.

“Threshold tuning often yields larger gains than swapping architectures; pick sensible breakpoints before adding complexity.”

| Approach | Strength | Trade-off |

|---|---|---|

| TF‑IDF + linear | Explainable, low cost | Limited semantic depth |

| Contextual encoders | Detects subtle tone | Higher compute, ops burden |

| Ensemble / hybrid | Balanced signals | Harder to debug |

Benchmarks must report precision/recall/F1 and ROC-AUC on consistent splits. Ongoing analysis of drift and campaign change determines when to refresh features or retrain learning models.

Performance Results and Benchmarking

Benchmarks reveal where straightforward lexical signals outpace deeper semantic representations on real-world corpora.

Reported accuracies show a clear split: TF‑IDF analysis reached 79.8% accuracy, while semantic similarity methods scored 67.2% on the same dataset. Some classical models have exceeded 90% on specific corpora, but those figures depend heavily on class balance and sampling.

Thresholds, precision, and recall

Accuracy alone can mislead. Teams should prioritize precision, recall, and F1 because missed attacks carry asymmetric costs versus false alerts.

Heuristic cosine breakpoints can bootstrap deployment; over time, learnable thresholds and Bayesian updates reduce manual tuning. Calibration curves and PR plots offer more actionable insight under imbalance.

- Performance varies with corpus composition—lexical overlap favors TF‑IDF, paraphrase-heavy sets favor embeddings.

- Cross-validation with stratification and bootstrapping stabilizes reported metrics when positive attempts are scarce.

- Benchmark stacks under identical preprocessing, splits, and metrics before drawing conclusions.

“Report precision and operational metrics—alert volumes, analyst time, and user reports—to capture end-to-end value.”

| Metric | Insight | Action |

|---|---|---|

| Accuracy | Misleading alone | Report with PR/F1 |

| Thresholds | Business-dependent | Calibrate per workflow |

| Stability | Varies by split | Use stratified CV |

Operationalizing the Classifier in Enterprise Environments

Successful enterprise rollouts prioritize low-latency scoring, clear routing, and measurable feedback loops.

Place the classifier adjacent to Secure Email Gateways so borderline messages receive a second, content-aware pass. This lets the system re-score, append a banner, or quarantine items based on policy-aligned thresholds without adding user friction.

Integration with existing SEGs and SOC workflows

Integrate outputs into SIEM and SOAR to enable automated investigations, URL detonation, and staged user notifications tied to confidence bands. Route high-confidence threats to auto-block; soft-warn lower-confidence items; escalate ambiguous cases to SOC queues.

Adaptive thresholding and continuous learning loops

Adaptive thresholds refine sensitivity using analyst dispositions and user reports. This continuous learning loop updates decision breakpoints without full retraining and reduces drift over time.

- Capture rich telemetry—top terms, similarity scores, and WEPI labels—for forensic reviews and tuning cycles.

- Provide explainability panels showing contributing terms and matched indicators to speed analyst triage.

- Define fail-open / fail-close behaviors to preserve mail continuity during outages or model updates.

- Align retention and privacy controls with corporate policy to limit storage of sensitive message content.

- Maintain SLOs for latency and throughput so processing keeps pace with business hours and mail flow.

“Operational design must balance protection, continuity, and analyst confidence: score fast, explain why, and learn from decisions.”

| Function | Benefit | Operational note |

|---|---|---|

| SEG adjacency | Low-latency re-score | Handles 0‑hour templates |

| SIEM/SOAR outputs | Automated triage | Integrate confidence bands |

| Adaptive thresholds | Reduced false alerts | Leverages analyst feedback |

Beyond Email: Behavioral Analytics, Anomaly Detection, and Incident Response Acceleration

Behavioral telemetry ties message signals to real user actions, revealing coordinated campaigns across channels.

Security teams should combine content signals with identity and network logs to reduce blind spots. Cross-channel analytics link web, SMS, and app telemetry so coordinated attacks surface earlier.

Behavioral baselining spots unusual actions: odd login sequences, rapid link clicks, or unexpected device changes. Those patterns often correlate with phishing attempts and fraud.

- Fuse heterogeneous data for faster containment and clearer incident timelines.

- Score risk with user context—role, history, and transaction patterns—to prioritize true threats.

- Enrich content signals with domain intelligence: lookalike registrations and brand impersonation.

“A behavior-led view shifts defense from message-first to actor- and journey-centric decisions.”

Automated playbooks should pivot from detection to takedown, user outreach, and session revocation when content risk and anomalies align. Consistent taxonomy and case management keep multi-channel investigations efficient and measurable.

| Capability | Benefit | Operational note |

|---|---|---|

| Cross-channel analytics | Fewer blind spots | Correlate email, web, SMS |

| Behavioral baselining | Early anomaly detection | Profile per user role |

| Automated playbooks | Faster containment | Integrate takedown & outreach |

Governance, Risk, and Compliance: Human-in-the-Loop and Training Alignment

Bridging model rationales and awareness programs makes detection more actionable for everyday users. WEPI outputs convert model signals into concrete training moments. That link helps security teams teach why a message looks risky and how to react.

Aligning WEPI-driven models with security awareness training

WEPI-aligned outputs map urgency, requests, and identity mismatches to learning modules. Trainers can show real examples from the 940-message corpus to reinforce lessons.

- Surface contributing terms and labels to make alerts teachable.

- Integrate model feedback into campaigns so users see their progress.

- Use limited, anonymized excerpts to protect privacy while delivering details.

Bias, drift, and periodic evaluation protocols

Governance requires scheduled reviews: quarterly threshold checks and semiannual dataset refreshes. Human-in-the-loop workflows validate edge cases and add labels for retraining.

| Review | Action | Frequency |

|---|---|---|

| Thresholds | Adjust breakpoints | Quarterly |

| Dataset | Refresh samples | Semiannual |

| Audit | Update model cards | Annual |

Document data lineage, annotation standards, and model changes for compliance. Balance storage and role-based access so privacy and useful information coexist. These steps keep detection robust, fair, and explainable for stakeholders.

Conclusion

Real gains come from combining lightweight lexical baselines with targeted contextual signals. A measured, content-first approach strengthens defenses without heavy disruption. Start with fast TF‑IDF baselines, add embeddings and WEPI-aligned cues, and tune thresholds with analyst feedback.

Key advantages: improved detection, clearer triage, and practical solutions that integrate with SEGs and SOC tooling. Blend models and behavioral signals to extend protection beyond email into coordinated channels. Continuous learning and governance keep measures current as attackers pivot.

Adopt a pilot, benchmark, tune cycle: protect users with explainable outputs, reduce exposure to rising phishing threats, and scale thoughtfully as maturity grows.

FAQ

What is the primary goal of an NLP-based approach to detect phishing messages?

The primary goal is to analyze message text and contextual cues to identify malicious intent before users interact with links or attachments. This involves extracting lexical and semantic features, measuring similarity to known threats, and producing explainable signals that integrate with existing email security gateways and SOC workflows.

Which linguistic features matter most for reliable detection?

High-impact features include token patterns (TF-IDF), n-grams, sender-recipient mismatches, URL lexical anomalies, and semantic embeddings that capture intent. Combining lexical cues with sentence-level similarity and WEPI-style indicators produces more verifiable results than any single signal.

How do classical baselines compare with contextual transformer models?

Classical baselines—TF-IDF, Naïve Bayes, SVM, and ensembles—remain fast and interpretable but can miss nuanced phrasing. Transformer-based models like BERT or RoBERTa offer stronger semantic understanding and higher recall at the cost of compute and harder-to-explain decisions. A hybrid strategy often yields the best operational balance.

What datasets and annotation practices are recommended?

Build combined corpora that include diverse benign business communications and labeled malicious samples. Address imbalance via oversampling and careful validation. Annotate with multi-level indicators (signature, sentence, message, word) to support weak explainable phishing indicators (WEPIs) and facilitate human review.

How should organizations handle zero-hour and polymorphic campaigns?

Rely on semantic similarity and behavior signals rather than static lists alone. Continuous model updates, adaptive thresholds, and a human-in-the-loop review process help detect novel campaigns. Integrating anomaly detection and cross-channel risk signals (e.g., smishing, quishing) further reduces blind spots.

What are practical integration points for enterprise deployment?

Key integration points include Secure Email Gateways, SIEMs, SOAR platforms, and endpoint agents. Provide explainable indicators to SOC analysts, enable adaptive thresholding, and feed feedback loops for continuous learning to maintain relevance in production environments.

How do teams balance precision and recall in production?

Tune operational thresholds based on business tolerance for false positives and incident response capacity. Use precision/recall curves and F1 metrics from benchmark tests, then apply gradual rollout with monitored feedback. Adaptive thresholding permits dynamic trade-offs during active campaigns.

What governance and compliance issues should be considered?

Ensure models and annotations align with privacy regulations and internal policy. Regularly evaluate for bias and drift, document WEPI mappings, and keep human reviewers in the loop for appeals and model correction. Maintain audit trails for labeled decisions and retraining events.

Can semantic clustering and keyword extraction reduce manual workload?

Yes. K-means clustering of keywords and TF-IDF-based extraction surface recurring malicious motifs and high-risk terms. These outputs speed triage, guide rule updates, and enable focused retraining on phishing-only datasets to improve detection without excessive human intervention.

What metrics matter when benchmarking models?

Focus on precision, recall, F1 score, and false positive rate in representative production-like datasets. Also measure latency, resource consumption, and explainability—how often the model provides actionable indicators (WEPIs) that analysts can verify.

How does cross-channel detection improve resilience?

Combining signals from email, SMS, voice phishing, and QR-based attacks allows correlation of adversary behavior across vectors. Behavioral analytics and user profiling help flag compromised accounts or repeated targeting patterns that single-channel systems often miss.

What operational steps ensure continuous improvement?

Establish feedback loops from SOC investigations, schedule periodic retraining with newly labeled samples, monitor drift, and update thresholds after red-team exercises. Coordinate model changes with security awareness training to align detection signals with user education.