There is a quiet worry behind many campus doors: a student who seemed engaged last month now misses a few key activities. That feeling — a mix of concern and resolve — motivates practical change.

The article describes how institutions can turn routine administrative records and LMS activity into clear signals advisors can act on. It frames the work as a pragmatic strategy to reduce dropout and support students while strengthening equity and financial stability for higher education.

Evidence shows many at‑risk patterns appear in the first semester. Tree-based ensembles like Random Forest and XGBoost often lead with tabular data; neural models can excel when patterns are nonlinear. Temporal features at weeks 4 and 8, plus a “studentship” aggregate, sharpen signals for timely intervention.

Key Takeaways

- Turn administrative and clickstream data into actionable early warnings for advisors.

- Tree ensembles and tuned neural models both have roles depending on data shape.

- Temporal windows and aggregated studentship features improve detection.

- Explainability—such as SHAP—builds trust with faculty and compliance teams.

- Operational integration matters: alerts must link to advising workflows and measurable supports.

Executive Summary: Improving Retention with Predictive AI in Higher Education

Data-driven frameworks reveal early patterns of disengagement so support teams respond before grades slip. This summary outlines a practical approach that pairs administrative records with learning activity to generate timely outreach and conserve advising resources.

Real-world benchmarks matter: a Moroccan ministry rollout reported 88% accuracy and recall, 86% precision, and 87% AUC after applying explainability (SHAP), imbalance handling, and defined intervention steps. Those results show strong practical performance for national-scale systems.

Blended data—pre-entry demographics, LMS behavior, and graded outcomes—feeds models that capture nuanced risk patterns. A time-based strategy provides predictions at four and eight weeks, giving advisors a window before major exams.

- Class imbalance is normal; resampling, weighting, and ensembles protect signal and reduce bias.

- SHAP transparency translates model factors into actionable guidance for advisors.

- Field-of-study differences require tailored thresholds and interventions.

Success mixes statistics with outcomes: improved advisor contact rates and higher pass rates. For a concise study overview, institutions can adapt this framework across U.S. campuses and platforms while keeping consent, anonymization, and equity monitoring central.

Search Intent and Reader Context in the United States

Campus stakeholders need clear guidance on which metrics matter most during a student’s first eight weeks. This section frames practical next steps for U.S. colleges and universities that seek timely, measurable action.

Who this study is for

Provosts, student success leaders, institutional researchers, CIOs, data scientists, and LMS administrators will find this brief useful.

These readers work with advising systems and common LMS ecosystems across American higher education. The focus is operational: how to convert routine logs into useful alerts that advisors can act on.

What you will learn right now

Practical takeaways include dataset design, cumulative feature engineering, temporal splits at 4 and 8 weeks, model selection, imbalance handling, and deployment patterns that trigger outreach.

Field-specific thresholds matter—STEM cohorts often need different rules than social science classes. SHAP-driven explainability helps translate model output into clear, student-centered actions for advisors.

Operational pilots should measure persistence gains, pass-rate changes, and return on investment tied to intervention workflows.

| Focus | Action | Metric |

|---|---|---|

| Data design | Aggregate weekly engagement (4w, 8w) | Model AUC, recall |

| Modeling | Tree ensembles + calibration | Precision / F1 |

| Operations | Embed alerts into advising workflow | Contact rate, persistence |

| Governance | Anonymize, bias checks, consent | Equity audit results |

U.S. campuses face roughly 30% attrition before year two; timely systems that combine cumulative features with clear workflows can change that trend.

Next: practical problem framing and objectives for pilot deployment.

Problem Statement: Early Student Dropout in E-Learning and Higher Education

Rising attrition among undergraduates is a measurable problem that demands data-driven triage and targeted support.

About 30% of U.S. students discontinue before their second year. That level of dropout harms learners, communities, and public finances.

Early indicators often appear in the first semester. Admissions scores, prior achievement, LMS engagement, and late or missing assignments correlate with persistence.

Modality shifts—fully online and blended courses—create richer traces of activity but complicate how we read engagement. More data can help, yet it also requires careful analysis to avoid false alarms.

Advising teams cannot manually profile every at‑risk student. Without prioritization, limited outreach misses the students who need help most. Automated alerts can surface candidates while preserving human judgment.

“Timely detection matters: interventions are most effective before grades and exams compound risk.”

Variability across programs means one threshold does not fit all; local calibration is essential. Equity must guide system design to reduce disparate dropout risk across demographic groups.

| Signal | Why it matters | Action |

|---|---|---|

| Admissions & prior grades | Baseline academic readiness | Targeted advising and prep modules |

| LMS engagement patterns | Real-time behavior and access | Automated alerts and outreach prioritization |

| Assignment timing | Procrastination or workload issues | Time-management coaching |

| Demographic disparities | Equity and access signals | Resource allocation and policy review |

Governance matters: clear rules on access, anonymization, and oversight are required before any predictive system is operational. That governance keeps interventions ethical and effective.

Case Study Objectives and Success Criteria

Success starts with objective criteria that link technical performance to real student outcomes. The project goal is simple: maximize early recall of at-risk students while keeping precision high enough to keep advisor workload realistic.

Balancing timing and accuracy matters. Earlier alerts give more time for outreach but can lower model performance; later windows boost metrics but shrink intervention time.

Early identification vs. model performance trade-offs

Teams should measure how predictive lift evolves from week 4 to week 8 and through exam periods. Studies show an aggregated studentship feature raised AUC for XGBoost at early windows.

Operational metrics tied to intervention impact

Statistical success measures include AUC-ROC, positive-class recall, precision-at-top-k, F1, and calibration. Those metrics feed operational KPIs: advisor contact rate, tutoring uptake, course completion, and term persistence.

- Set action thresholds that balance false positives against capacity and student experience.

- Use temporal milestones (4 weeks, 8 weeks, exam periods) to find where signal-to-noise is actionable.

- Run governed A/B tests to monitor incremental gains versus historical baselines.

“Top SHAP factors must map to interventions advisors can deliver; explainability turns analysis into practice.”

Finally, design faculty-aware dashboards segmented by department and course difficulty. Commit to equity reporting that documents narrowed persistence gaps and prevents algorithmic harm.

Data Foundations for Dropout Prediction

Predictive work begins with disciplined data collection: clear definitions, consistent fields, and governance that protects privacy.

Pre-entry records

Pre-entry variables—demographics, admissions scores, socio-economic indicators, and prior grades—establish baseline readiness and context.

These fields guide early triage and help adjust thresholds by program and cohort.

Learning-behavior logs

LMS clickstreams capture logins, submissions, forum posts, video views, and assignment timing. Such traces reflect cognitive and social engagement.

Consistent event naming and normalization across platforms help maintain feature quality for data mining and educational data mining tasks.

Achievements and outcomes

Final grades, exam A/B outcomes, failure counts, and mandatory-course flags enrich later-term models. They sharpen signals for assessment and operational decisions.

- Labeling: dropout is coded as two consecutive years of non-registration and incomplete program status.

- Governance: datasets spanned 2019–2022 with anonymization and ethics approval before analysis.

- Practical notes: address class imbalance, validate and normalize scales, and document data lineage to meet U.S. governance norms.

“Feature consistency across faculties reduces bias and improves cross-program analysis.”

Temporal Modeling: Building Early Signals Across the Semester

Organizing data by week and assessment lets teams test how soon useful signals emerge from routine learning records.

Start with clear time slices: 4 weeks, 8 weeks, Exam A, Exam B, and Semester 2. The 4-week window is the first point with reliable LMS activity. Eight weeks serves as a midpoint that often stabilizes trends.

Windowed feature design

Build cumulative features that preserve early signals and append later evidence. This lets a single model leverage a student’s evolving profile without discarding first impressions.

Operational uses and monitoring

Exam periods add graded evidence that recalibrates risk scores. Track prediction drift across windows so early probabilities align with later outcomes.

- Test windows: compare performance across 4w, 8w, and exam snapshots.

- Model strategy: choose separate models per window or one time-indexed model based on infrastructure.

- Program tuning: customize timing by department and assessment pacing.

“Temporal analysis helps prioritize outreach where it yields the greatest impact on persistence.”

| Window | Key feature set | Operational goal |

|---|---|---|

| 4 weeks | Login rate, activity counts, initial submission timing | Early triage; low-lift outreach |

| 8 weeks | Cumulative engagement, forum interactions, midterm grades | Stability check; prioritize advising |

| Exam A / B | Grades, assignment shifts, help-seeking events | Recalibrate risk; intensive supports |

Summary: a temporal approach aligns analysis, models, and operations so institutions can act earlier while tracking system performance and student outcomes.

Feature Engineering Techniques That Matter

Clustering courses by difficulty and aggregating engagement creates clearer early signals. This section outlines practical methods that turn varied course records and LMS logs into stable features for modeling.

Course difficulty clustering

Courses are grouped into four levels—easy to very hard—using k-means on average grade and failure rate. The cluster label replaces sparse per-course fields with aggregated cluster features.

Benefit: aggregation reduces sparsity, smooths semester variation, and boosts model stability across departments.

Studentship aggregation

Studentship folds LMS events into two indices: cognitive (assignments, quiz scores) and social (forum posts, peer help). Positive weights include above-mean assignment grades; negative weights include late submissions.

Weights are normalized per course to offset grading scale differences. This approach raised XGBoost AUC at early windows and tightened outreach timing for advisors.

- Normalize features to counter course-scale variability.

- Review importance with SHAP so engineered features match advisor intuition.

- Re-cluster periodically and handle missingness carefully so low-activity students are contextualized, not penalized.

- Build modular pipelines for reproducible experiments and faster iteration with common machine learning techniques.

For a deeper methodological reference and context on feature impact, consult this study on feature impact.

Modeling Approach and Algorithms

Effective modeling picks algorithms that match data shape, institutional goals, and operational limits.

Tree-based ensembles for tabular data

Random forest and XGBoost are reliable first choices for heterogeneous tabular datasets. They handle mixed feature types, missing values, and feature importance reporting well.

Random forest offers stability and easy interpretation through feature ranks. XGBoost often boosts performance after careful hyperparameter tuning and regularization.

Neural networks and deep learning

When interactions are complex and feature volume is high, neural networks can outperform tree ensembles on AUC and F1. Deep learning suits dense embeddings and fused signals from many sources.

However, these models demand more data, compute, and attention to overfitting; explainability tools should be added to keep advisors confident.

Time-series and sequence models

RNN and LSTM architectures capture temporal patterns in clickstream and weekly engagement. They excel where sequence order and timing matter—for example, repeated missed activities over weeks.

Combine sequence outputs with tabular features to preserve both temporal nuance and baseline context.

“Match the algorithm to the question: pick transparency when advisors need actionability; pick complexity when signal depth justifies it.”

| Algorithm family | Strength | When to pick |

|---|---|---|

| Tree ensembles (RF, XGBoost) | Robust, interpretable, fast to train | Heterogeneous tabular data; limited infra |

| Neural networks / deep learning | High capacity, captures complex interactions | Large feature sets; embeddings or fused inputs |

| Sequence models (RNN/LSTM) | Temporal dependency capture | Clickstream sequences and weekly time-series |

Operational advice: start with tree-based models, add sequence models if temporal signals justify complexity, and ensemble across families to improve generalization.

Validate with temporal cross-validation, calibrate outputs for advisor workflows, and monitor models in production for drift and bias.

Handling Imbalanced Data for At-Risk Students

Imbalanced class distributions are an inherent challenge when models flag students at risk of leaving their programs. Left unchecked, accuracy can be misleading: a model that always predicts persistence looks good but misses the students who need help.

SMOTE and resampling strategies

SMOTE and related oversampling synthesize minority examples so models learn realistic patterns. Apply SMOTE on training folds only and validate with untouched cohorts to avoid optimistic bias.

Class weighting and ensemble methods

Class-weighting shifts the loss function toward the minority class and improves recall without changing data volume. Combine weighted training with ensembles—Random Forest, XGBoost, LightGBM, or CatBoost—to balance variance and bias.

- Undersample cautiously: it reduces majority-class mass but risks losing important structure.

- Use cost-sensitive learning when missed interventions (false negatives) carry high harm.

- Tune thresholds per faculty or cohort to match advising capacity and reduce false alarms.

“Validate with precision-recall curves and class-specific metrics; document resampling choices for auditability.”

Evaluation Framework and Metrics

A focused evaluation plan links technical scores to real advising capacity and student outcomes.

Assessments should measure both classifier quality and operational effect. Technical metrics guide iteration; operational metrics decide deployment readiness. Benchmarks reported from a national study—88% accuracy, 88% recall, 86% precision, and 87% AUC—offer a realistic target for early pilots.

Accuracy, Precision, Recall, and F1 for imbalanced cohorts

Recall is primary: it catches at-risk students. Precision limits false alarms so advisors are not overwhelmed. F1 balances both for a single-number view.

AUC-ROC and class-specific reporting

AUC-ROC measures overall separability, while PR curves focus the positive class. Teams should publish confusion matrices by cohort, field, and demographics. Calibration checks ensure predicted probabilities align with true risk and support sensible thresholding for triage.

“Top-k precision is practical: it shows how many flagged students an advisor can reliably reach.”

| Metric | Operational use | Recommended target |

|---|---|---|

| Recall (positive class) | Catch at-risk students early | ≥ 85% |

| Precision (top-k) | Advisor queue accuracy | ≥ 80% |

| AUC-ROC | Overall separability | ~87% |

| Fairness (TPR parity) | Equity across groups | Within 5% gap |

Include temporal evaluation—4 weeks vs. 8 weeks vs. exams—statistical tests with confidence intervals, and benchmarking against historical baselines. Transparent reporting and cohort-level analysis build trust and focus continuous improvement of models and the broader system.

Explainability and Stakeholder Trust

Explainability anchors trust by showing advisors why a model highlights a particular student profile. Clarity turns scores into actions and helps faculty accept automated guidance as a useful part of advising practice.

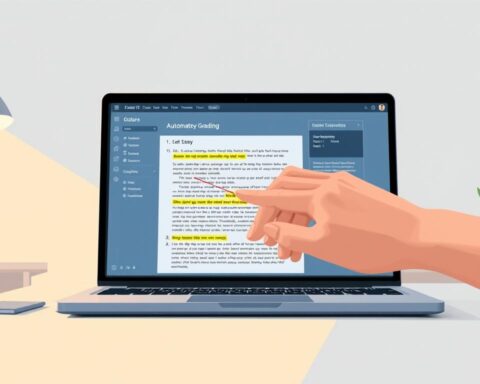

Applying SHAP to identify key predictive factors

SHAP quantifies each feature’s contribution to an individual prediction and aggregates global drivers across cohorts. A national-scale SHAP analysis made clear which LMS behavior, admissions fields, and achievement metrics mattered most.

Teams can use these explanations to run a simple analysis that maps top features to advisor-facing narratives. Cross-validate SHAP findings with practitioner experience to build confidence and refine thresholds.

Translating insights into actionable interventions

Explainability should feed a practical approach: translate model signals into next-best actions on dashboards. For example, high weight on late submissions suggests time-management coaching; low early logins suggests outreach and access checks.

Govern feature-level rules for sensitive attributes to avoid proxy harms. Train advisors to interpret outputs empathetically and align explainability artifacts with IRB and ethics protocols.

“Explainable models can sustain strong performance while making recommendations that advisors trust and act on.”

| Explainability step | Purpose | Outcome |

|---|---|---|

| SHAP per-student report | Show feature contributions | Advisor-ready rationale |

| Global feature audit | Detect unstable drivers | Policy and governance triggers |

| Advisor training | Bridge models and practice | Consistent, empathetic outreach |

For methodological detail on explainability at scale, see the SHAP study. Clear, governed explanations help students, advisors, and administrators accept predictive tools and improve operational performance.

AI Use Case – Early Dropout Prediction in E-Learning

A multi-faculty evaluation reveals how department-level datasets shape model performance and operational choices.

Datasets across faculties and departments

The study combined departmental records: computer science (n=1,666), physics (n=566), and psychology (n=1,172). Faculty aggregates included exact sciences (n=3,648) and social sciences (n=4,619).

Model performance patterns by field of study

Machine learning models showed varied performance by discipline. Exact sciences benefited from difficulty clustering; social sciences saw more stable signals at faculty level.

Studentship aggregation raised early AUC for XGBoost, especially where departmental counts were small and volatility high.

Impact of early LMS behavior on predictive lift

Early learning activity boosted lift most for first-year students adjusting to campus workflows. Assignment timing and login cadence were top drivers in several fields.

Teams should set department-specific thresholds, retrain models as curricula change, and report both field-level and institution-wide findings to academic leaders.

| Level | Sample | Key uplift |

|---|---|---|

| CS | 1,666 | Assignment timing |

| Physics | 566 | Difficulty cluster effect |

| Psychology | 1,172 | Forum & login signals |

| Faculty aggregate | Exact 3,648 / Social 4,619 | Stabilized models with studentship metrics |

“Combining department and faculty datasets stabilizes models and makes outreach planning more reliable.”

Key Findings: What Works and When

Results show how timing, data design, and departmental context shape reliable early warnings for student support. This summary highlights practical trade-offs and field differences that guide operational choices.

Early vs. late semester prediction performance

Precision improves later in the term, while earlier windows give more time to act. Institutions must balance higher accuracy with the value of early outreach.

Differences between exact and social sciences

Exact sciences often show clearer separation from structured assignments and deliver stronger early signals. Social sciences display broader variance and need tailored thresholds and contextual features.

Temporal patterns of dropout and intervention windows

Highest dropout counts cluster around transitions—finals and term breaks—so targeted outreach at those points yields higher marginal impact.

Other practical findings: studentship aggregates and difficulty clusters improve generalization; department-level retraining raises model performance; longitudinal data stabilizes results. Continuous learning loops that feed outcomes back into feature design and thresholding keep systems effective over time.

“Align intervention capacity with windows where marginal impact is highest; preserve early signals rather than overwrite them.”

Operationalizing Interventions in U.S. Institutions

A practical intervention plan turns risk scores into timely outreach, services, and measurable outcomes. This section describes how institutions map analytics to real advising work and student supports so efforts translate into improved retention.

Integrating alerts into advising and student success systems

Integrate SIS and LMS feeds for nightly refreshes and near‑real‑time alerts during critical weeks. Map risk scores to case creation, outreach templates, and escalation policies.

Apply precision-oriented prediction correctors to prioritize cases when staff capacity is limited. Ensure consent and data governance guide any automated workflow.

Designing targeted support for at-risk cohorts

Segment by cohort—first‑year, transfer, and online—and by field of study to tailor interventions. Offer tutoring, study skills coaching, financial counseling, mental health referrals, and time‑management resources.

Enable advisors with dashboards, SHAP explanations, and training on culturally responsive outreach. Use student-centered messages that emphasize support rather than surveillance.

“Operational success combines timely systems, clear playbooks, and feedback loops from advisors to data teams.”

Measure impact: track contact rates, service uptake, and course pass‑rate improvements. Feed results back to refine thresholds, features, and playbooks under institutional governance and U.S. policy standards.

Ethics, Privacy, and Equity Considerations

A robust governance layer keeps learner data protected while enabling timely support for students.

Data governance and anonymization practices

Access controls, minimization, and anonymization must be in place before any operational deployment. Limit raw records to a few authorized roles and keep identifiers separated from analytic tables.

Labels for dropout come from enrollment records over time; document how those labels were built so audits can trace misclassification and corrective steps.

Bias monitoring across demographic groups

Regular fairness checks compare recall, precision, and error rates across groups. That analysis helps detect disparate impact and guides threshold tuning by program.

Explainability artifacts support ethics boards and faculty oversight. SHAP-style summaries or feature audits link model factors to actionable interventions.

“Prioritize supportive, non‑punitive resources and transparency with students where policy allows.”

| Area | Practice | Outcome |

|---|---|---|

| Governance | Role-based access, logging | Reduced exposure of sensitive data |

| Anonymization | Pseudonymize identifiers; retain lineage | Auditable, privacy-preserving analysis |

| Fairness | Group-level metric monitoring | Equitable advisor action |

| Accountability | Opt-out policies & independent audits | Trust and legal compliance |

Limitations, External Validity, and Future Directions

Careful reflection on limits sharpens practical plans. This section outlines what teams must test before scaling and where research can strengthen real-world outcomes.

Generalizing across LMS platforms and modalities

Porting analytics between learning systems demands careful alignment of schemas and feature semantics. Different LMS platforms log events with varied names and granularity, so direct transfer often fails.

Practical steps:

- Map event taxonomies and validate feature parity across platforms.

- Run cross-campus pilots and benchmark with a held-out cohort before full deployment.

- Tune modality-specific features for MOOCs versus campus courses.

Enhancing models with historical and longitudinal data

Multi-year records stabilize signals and improve model performance over longer horizons. Returning students and cohort drift benefit from longitudinal analysis.

Future research should publish results in an international journal or at an international conference to aid reproducibility. Semi-supervised, transfer, and active learning methods can help when labeled dropout ground-truth arrives late.

“Rigorous external validation and shared reporting standards will move this approach from pilot to practice.”

| Focus | Action | Outcome |

|---|---|---|

| Portability | Schema mapping, pilot tests | External validity |

| Data | Multi-year archives, feature stores | Stability & performance |

| Research | Peer review, standardized reports | Comparability across institutions |

Conclusion

, Summing up, the path forward pairs robust models with clear governance and advisor workflows for measurable retention gains. This brief shows that predicting student dropout is feasible when teams combine temporal modeling, difficulty clustering, and studentship aggregation.

Practical next steps include selecting suitable machine learning algorithms—tree ensembles or neural networks—handling class imbalance, and calibrating thresholds to match advisor capacity. Explainability with SHAP and advisor dashboards builds trust and guides helpful interventions.

Ethics, privacy, equity monitoring, and MLOps are foundational. With longitudinal data, A/B testing, and careful validation across departments and platforms, higher education can scale what works and materially reduce student dropout.

FAQ

Who is this case study intended for?

This case study targets higher education leaders, student-success teams, learning engineers, data scientists, and policy makers who seek proven strategies to identify and support at-risk students using predictive modeling and educational data mining.

What will readers learn immediately?

Readers will learn practical steps for building models that flag students at risk of leaving courses—covering data sources, time windows, feature engineering, suitable algorithms like random forest and XGBoost, and how to convert model outputs into timely interventions.

What core problem does the project address?

The project addresses early student withdrawal from online courses in higher education by surfacing actionable signals from LMS activity, demographic and achievement records, and temporal patterns to enable fast, targeted support.

Which data sources are most valuable for predicting student attrition?

High-value sources include pre-entry demographics and prior achievement, LMS clickstreams and engagement logs, graded outcomes, assessment timelines, and cohort-level metadata such as course difficulty and modality.

When should institutions extract features for best early warning?

Useful extraction windows include the first 4 and 8 weeks of a semester, around major exams, and mid-semester checkpoints; combining cumulative and incremental features improves sensitivity to changing behavior.

Which modeling techniques work best for tabular educational data?

Tree-based ensembles—random forest and XGBoost—are strong baselines for tabular data. They balance predictive power and interpretability. Neural networks and sequence models (RNN/LSTM) help when temporal or richer interaction patterns matter.

How should teams handle imbalanced labels where dropout is rare?

Apply techniques like SMOTE or targeted resampling, class weighting, and ensemble strategies. Evaluate models with metrics suited for imbalance (precision, recall, F1, and AUC-ROC) rather than overall accuracy.

What evaluation metrics matter for operational impact?

Prioritize recall for early identification, precision to limit false alarms, and AUC-ROC for ranking risk. Also track intervention-specific operational metrics: referral uptake, retention lift, and cost per successful support.

How can model outputs gain stakeholder trust?

Use explainability methods such as SHAP to surface which features drive predictions. Present clear, contextual explanations to advisors and instructors and provide confidence intervals and actionable recommendations alongside risk scores.

How do you balance early detection with model accuracy?

Trade-offs are inevitable: earlier windows give less data but more time to act. Build multi-horizon models (4-week, 8-week, and exam checkpoints), tune thresholds by intervention capacity, and monitor real-world outcomes to adjust timing.

What feature engineering techniques reduce sparsity across courses?

Cluster courses by difficulty or content with k-means, create aggregated “studentship” metrics for cognitive and social engagement, and engineer cohort-normalized features to handle enrollment heterogeneity.

How should institutions integrate alerts into operations?

Feed risk scores into advising platforms and student-success dashboards with clear action playbooks. Automate low-effort nudges and reserve human outreach for high-risk, high-impact cases to maximize resource efficiency.

What privacy and ethical controls are essential?

Implement strict data governance: anonymization, role-based access, audit logs, and bias monitoring across demographic groups. Involve ethics boards and legal counsel when designing interventions that affect students.

Can models generalize across LMS platforms and disciplines?

Generalization varies. Models trained on one LMS or faculty may lose performance elsewhere. Improve external validity with transfer learning, standardized feature sets, and validations across multiple faculties and semesters.

What are realistic expectations for impact?

Expect modest predictive lift early in adoption and improving results as data accumulates. Success looks like higher advisor engagement, measurable retention gains in targeted cohorts, and refinements that reduce false positives over time.

Which operational metrics should teams track post-deployment?

Track model performance (precision, recall, AUC), intervention metrics (referrals made, support accepted), outcome metrics (retention, pass rates), and process metrics (latency from flag to outreach).