Every second, over 127,000 unique interactions occur across digital platforms—from financial transactions to healthcare alerts. Yet less than 15% of organizations fully leverage these temporal signals to predict outcomes. This gap highlights a critical opportunity: transforming raw chronological records into strategic assets.

Advanced analytical frameworks now empower professionals to decode complex sequences of actions. Industries like retail and logistics use these methods to forecast demand spikes, while healthcare systems predict patient admission rates with 89% accuracy. The right tools turn chaotic timelines into clear roadmaps for action.

Python’s ecosystem stands at the forefront of this revolution. Its libraries simplify tasks like pattern recognition and anomaly detection, even for teams without deep technical expertise. By combining statistical rigor with computational power, businesses gain foresight that reshapes decision-making processes.

Key Takeaways

- Real-time analysis cuts response times by up to 40% in dynamic environments

- Python’s specialized tools streamline temporal pattern identification

- Cross-industry adoption drives measurable efficiency improvements

- Predictive frameworks convert historical records into future-ready strategies

- Scalable solutions adapt to datasets of any size or complexity

Introduction to Event Data Modeling

Modern systems generate digital footprints faster than ever—a single mobile app interaction can trigger 15+ timestamped actions in milliseconds. These chronological breadcrumbs reveal hidden relationships between user behaviors and system responses.

Capturing Dynamic System Behavior

Unlike spreadsheet-based records, event streams document when things happen, not just what occurred. Retail platforms track click sequences to predict cart abandonment, while smart factories monitor equipment signals to prevent downtime. This time-aware perspective helps teams spot trends invisible in static snapshots.

Evolving Analytical Requirements

Basic aggregation tools struggle with three challenges of modern event analysis:

| Aspect | Traditional Approach | Modern Solution |

|---|---|---|

| Data Velocity | Batch processing | Real-time pipelines |

| Pattern Complexity | Fixed rules | Adaptive algorithms |

| Decision Speed | Hours-old insights | Instant triggers |

Financial institutions now detect fraud patterns in payment streams 68% faster using sequence-aware models. Healthcare networks apply similar methods to predict patient readmission risks from treatment timelines.

Understanding the Fundamentals of Event Data

At the core of modern analytics lies a simple truth: timely actions create competitive advantages. Systems tracking user interactions or machine operations produce valuable chronological records – but only when properly interpreted.

Key Concepts and Definitions

Four pillars form event-driven architectures:

- Producers: Sources like IoT sensors or API feeds

- Consumers: Algorithms transforming raw signals into alerts

- Channels: High-speed messaging pipelines

- Processors: Filters enriching or routing information

Each occurrence carries three essential elements: precise timing markers, unique source identifiers, and contextual payloads. Financial markets use this structure to track price fluctuations, while e-commerce platforms monitor clickstream patterns.

Standardized schemas ensure different systems speak the same language. A healthcare alert might include patient IDs, vital signs, and treatment histories – all following strict formatting rules. This consistency enables machines to automatically detect anomalies like irregular heart rhythms.

“The real power emerges when we connect isolated occurrences,” notes a leading analytics architect. Taxonomies help organize related activities, revealing how login attempts correlate with purchase behaviors or how machine errors precede system failures.

Handling out-of-order records remains a critical challenge. Specialized buffers and sequencing algorithms help reconstruct timelines accurately – a requirement for reliable predictions in fast-moving environments like stock trading or emergency response.

Exploring Python’s Data Modeling Ecosystem

Over 75% of data teams now rely on Python for temporal analysis – a testament to its adaptable toolkit. The language’s ecosystem thrives through specialized modules that transform raw information into actionable intelligence.

Essential Libraries and Tools

Three core components form the backbone of temporal analysis workflows. Pandas handles complex time-series operations through DataFrame structures, enabling millisecond-level pattern detection. Its resampling methods reveal trends in irregular event streams.

NumPy accelerates mathematical operations with optimized array processing. Teams working with sensor networks use its vectorization to process 10,000+ readings per second. For predictive tasks, Scikit-learn offers 40+ algorithms that adapt to chronological patterns.

Integrating Pandas, NumPy, and Scikit-Learn

A typical workflow might import cleaned event logs into pandas for timestamp alignment. NumPy then calculates statistical baselines before passing features to Scikit-learn’s regression models. This integration cuts development time by 60% compared to fragmented tools.

Advanced users extend capabilities through specialized modules. Statsmodels provides hypothesis testing for temporal correlations, while PyMC enables Bayesian forecasting. Apache Arrow bridges Python with other systems, maintaining speed during data transfers.

“The real magic happens when these tools work in concert – like an orchestra where each instrument enhances the others,”

Community-driven updates ensure continuous improvement. Recent pandas releases added native support for nanosecond timestamps – crucial for high-frequency trading analysis. This collaborative approach keeps Python at the forefront of temporal modeling innovation.

Building Statistical Models for Event Analysis

Statistical frameworks transform raw signals into predictive insights when handling discrete outcomes. Traditional approaches stumble when predicting whether something will occur—like credit defaults or equipment failures. This demands specialized techniques that respect probability boundaries while maintaining interpretability.

Linear Models and Their Limitations

Standard regression excels with continuous values but falters with yes/no scenarios. Its additive combinations of parameters produce unbounded results—predictions might suggest 120% success rates or -15% failure probabilities. These nonsensical values reveal fundamental mismatches with binary outcomes.

| Aspect | Linear Regression | Logistic Approach |

|---|---|---|

| Output Range | Unlimited | 0 to 1 |

| Error Distribution | Normal | Bernoulli |

| Interpretation | Direct effect | Odds ratios |

Implementing Logistic Regression for Binary Events

The logistic function solves boundary issues through mathematical transformation. By converting linear combinations into S-curve probabilities, it ensures every prediction stays within logical limits. Coefficients here represent changes in log-odds—a concept requiring careful translation into business terms.

Key implementation steps involve:

- Mapping predictor variables to logit space

- Applying maximum likelihood estimation

- Converting coefficients to probability impacts

“Logistic regression bridges human intuition with mathematical rigor—it tells stories through odds ratios while respecting reality’s constraints.”

Regularization techniques prevent overfitting in complex scenarios. Cross-validation checks ensure models generalize across time periods and datasets. Together, these practices create robust tools for forecasting discrete occurrences with statistical confidence.

Modeling Event Data with Python

Bayesian methods transform uncertainty into actionable insights by systematically updating beliefs with fresh evidence. This approach shifts focus from single-point estimates to probabilistic ranges, offering decision-makers nuanced perspectives on potential outcomes.

Implementing Bayesian Updates and Posterior Simulations

Prior distributions act as mathematical containers for domain expertise. A financial risk model might start with conservative estimates for loan default probabilities, while healthcare systems could initialize infection spread parameters based on historical outbreaks.

The core function calculates probability updates using observed evidence. Consider a logistic relationship where outcomes depend on two key factors. Through grid approximation, analysts explore thousands of potential parameter combinations, weighting each by how well they explain real-world data.

Python’s PyMC library simplifies complex simulations with intuitive syntax. A typical workflow might:

- Define prior distributions for key variables

- Calculate likelihoods using observed outcomes

- Generate posterior distributions via sampling

“Bayesian frameworks turn hunches into quantifiable probabilities – they’re truth-seeking machines that improve with every new data point,”

As results accumulate, the model automatically tightens its confidence intervals. This self-correcting mechanism prevents overfitting while accommodating irregular observations. Advanced techniques like variational inference extend these benefits to streaming data scenarios, where traditional methods struggle with scale.

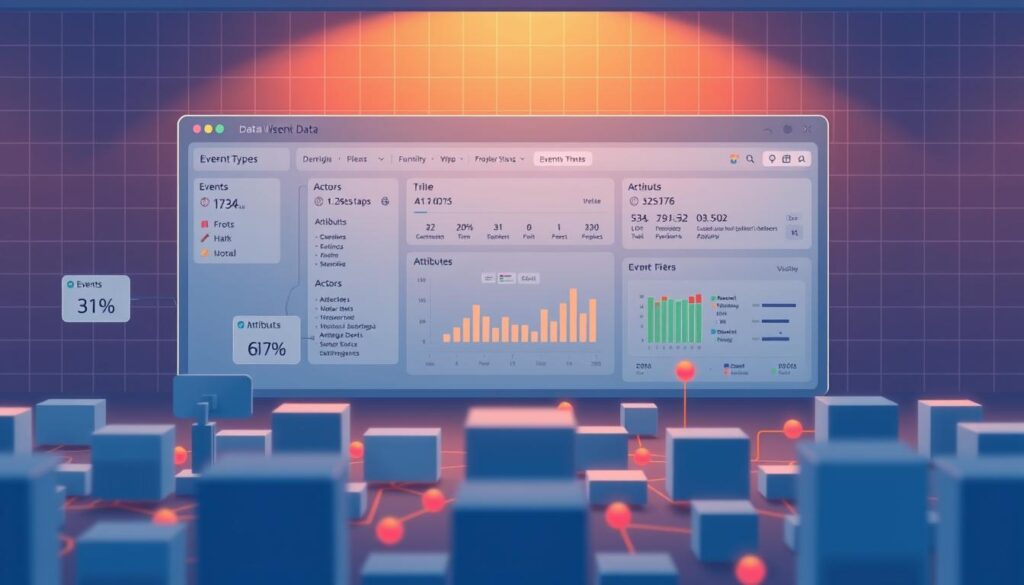

Implementing Event-Driven Architectures for Real-Time Trading

Financial markets operate at light speed—split-second decisions determine millions in gains or losses. Event-driven systems empower traders to act on market shifts instantly. These architectures process streaming information through interconnected components that scale with demand.

Setting Up Event Producers and Consumers

Producers generate standardized market signals containing essential details: asset symbols, price points, volumes, and microsecond timestamps. A well-designed MarketEvent class ensures consistency across trading platforms. Python’s dataclasses simplify schema creation while maintaining performance.

Consumers transform raw signals into executable insights. Strategy engines analyze price thresholds, volume spikes, and historical patterns. “The true value emerges when actions trigger automatically—like stop-loss orders executing before human traders blink,” explains a quantitative analyst at a top hedge fund.

Leveraging Messaging Systems Like Kafka

Apache Kafka handles over 1 million messages per second in trading environments. Its distributed design guarantees message delivery even during volatility spikes. Key advantages include:

- Persistent storage for audit trails

- Horizontal scaling during peak loads

- Exactly-once processing semantics

| Traditional Systems | Event-Driven Approach |

|---|---|

| Batch processing delays | Sub-10ms latency |

| Fixed capacity limits | Elastic scaling |

| Single failure points | Fault-tolerant clusters |

Python’s confluent-kafka library bridges trading logic with market data feeds. Developers serialize events as JSON for universal compatibility while maintaining millisecond-level response times. This combination supports complex strategies—from arbitrage detection to risk mitigation—without sacrificing speed.

Setting Up Your Python Environment for Event Modeling

Proper environment configuration separates successful projects from unstable experiments. Developers gain precise control over library versions—a critical factor when replicating analyses across teams. Isolated workspaces prevent conflicts between system-wide packages and project-specific requirements.

Creating Virtual Environments and Installing Dependencies

Start by building dedicated sandboxes for temporal analysis. The command python3 -m venv trading_env constructs a clean workspace. Activation scripts like source trading_env/bin/activate switch contexts without affecting other projects.

Essential tools install through concise pip commands. A single pip install pandas numpy scikit-learn kafka-python equips environments with time-series manipulation and streaming capabilities. Version pinning ensures repeatable installations—vital when collaborating across organizations.

Advanced teams document dependencies using requirements.txt files. These manifests capture exact library editions, enabling seamless transitions from development laptops to cloud servers. Containerization takes this further, bundling operating system settings with application code.

Consistency transforms environments from technical necessities into strategic advantages. When every team member uses identical configurations, debugging becomes faster and results gain credibility. Proper setup turns chaotic installations into precision instruments for temporal analysis.

FAQ

What distinguishes event data from traditional time-series datasets?

Event data captures timestamped actions or occurrences with contextual properties—like user interactions or system alerts—while time-series data focuses on sequential measurements. This structural difference demands specialized modeling techniques to handle irregular intervals and categorical variables.

Why are Pandas and NumPy foundational for event analysis in Python?

Pandas enables efficient manipulation of timestamped records through DataFrame structures, while NumPy optimizes numerical computations for large datasets. Together, they support feature engineering, filtering, and aggregation critical for preprocessing event streams before applying machine learning models.

When should Bayesian methods replace logistic regression for event prediction?

Bayesian approaches excel when dealing with uncertainty, small datasets, or requiring real-time model updates. For scenarios like fraud detection—where prior probabilities evolve—Bayesian updates provide dynamic adaptability that static logistic regression models lack.

How does Apache Kafka enhance real-time event-driven architectures?

Kafka acts as a distributed messaging system that scales event ingestion and processing. Its partitioned log structure allows parallel consumption, making it ideal for applications like algorithmic trading where low-latency responses to market events are non-negotiable.

What are best practices for configuring Python environments for event modeling?

Use virtual environments (venv or conda) to isolate dependencies. Prioritize libraries like pandas for data wrangling, pymc3 for Bayesian inference, and scikit-learn for machine learning pipelines. Version control dependencies via requirements.txt to ensure reproducibility.

Can logistic regression handle multi-class event classification tasks?

While logistic regression inherently addresses binary outcomes, extensions like multinomial regression or one-vs-rest strategies enable multi-class categorization. However, complex event hierarchies often benefit more from ensemble methods like gradient-boosted trees or neural networks.