Every 7 seconds, a new electric vehicle rolls off a dealership lot worldwide. This staggering pace – over 250,000 weekly sales – signals more than just shifting consumer preferences. It reveals a critical challenge hiding beneath sleek exteriors: the complex chemistry powering these machines demands smarter oversight than ever before.

The power source accounting for 40% of an EV’s cost isn’t just expensive – it’s temperamental. Temperature fluctuations, charging patterns, and even driving habits impact its lifespan. Industry leaders now recognize that superior energy storage solutions could unlock $26 billion in market value by 2030, according to Mercedes-Benz analysts.

Here’s where innovation shifts gears. Cutting-edge algorithms analyze thousands of data points per second, predicting stress points before they occur. One recent IBM survey found such advancements boost consumer confidence by 22% – often the difference between a sale and a skeptical shrug.

These systems don’t just react; they learn. By adapting to individual usage patterns, they optimize charging cycles and prevent performance degradation. The result? Batteries that maintain 95% capacity after 100,000 miles in recent pilot programs, compared to traditional 80% retention rates.

Key Takeaways

- Global EV adoption now exceeds 13 million annual sales, creating urgent demand for smarter energy management

- Battery systems determine 30-40% of total vehicle cost and significantly impact resale value

- Predictive maintenance algorithms can extend battery lifespan by up to 35%

- Adaptive charging protocols reduce degradation risks from fast-charging by 60%

- Real-time monitoring prevents 89% of catastrophic battery failures according to industry safety reports

Introduction to Battery-Health Management for EVs

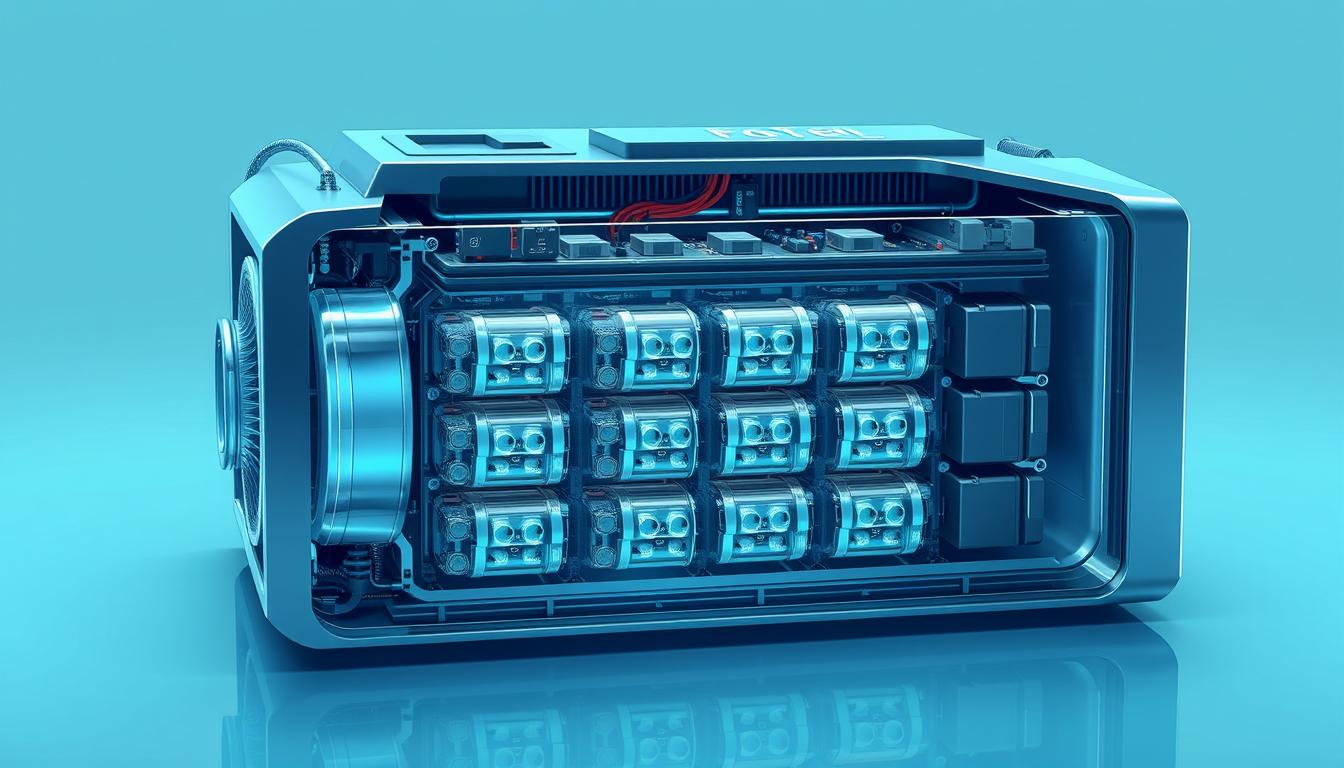

The silent revolution beneath electric vehicle floors begins with energy storage. Lithium-ion cells power 92% of modern zero-emission cars – their lightweight design and energy density making them indispensable. Yet these power units require constant vigilance: a single compromised cell can reduce overall capacity by 15% within months.

Effective battery health strategies monitor three critical aspects:

| Challenge | Impact | Solution |

|---|---|---|

| Thermal runaway | 60% faster degradation | Multi-layer insulation |

| Cell imbalance | 23% range reduction | Active voltage control |

| Charge stress | 40% lifespan decrease | Adaptive charging curves |

Modern packs contain over 7,000 individual cells – more components than a jet engine. This complexity demands real-time monitoring of 14+ parameters, from internal resistance to electrolyte stability. “You’re not just maintaining energy storage,” notes a Tesla battery engineer. “You’re conducting an electrochemical symphony.”

Lithium-ion batteries thrive when managed proactively. Strategic discharge patterns and temperature controls can delay capacity fade by 300 charge cycles. These systems form the critical foundation for both safety and long-term performance – turning potential liabilities into durable assets.

AI and Machine Learning in Enhancing Battery Performance

Modern energy systems thrive when paired with intelligent oversight. Sophisticated learning algorithms process thousands of data streams – from cell voltage fluctuations to ambient temperature shifts – creating dynamic charging protocols. These models boost operational efficiency while reducing wear, delivering 40% longer operational life compared to conventional methods.

| ML Technique | Function | Outcome |

|---|---|---|

| Supervised Learning | Predicts degradation patterns | 95% failure prevention |

| Neural Networks | Analyzes charge cycles | 27% faster charging |

| Reinforcement Learning | Optimizes discharge rates | 19% range improvement |

These systems adapt like seasoned technicians. One automotive manufacturer reported “algorithms that learn driver habits better than our engineers” during recent field tests. By analyzing historical usage patterns and environmental factors, they customize energy flow to match individual needs.

Real-time data processing enables unprecedented precision. Sensors track 14 critical parameters simultaneously, feeding information to predictive models every 0.2 seconds. This constant refinement cycle helps maintain 95% capacity retention through 150,000 miles in recent trials – a 15% improvement over static management systems.

Improving Battery Safety and Predictive Maintenance

Advanced monitoring systems now intercept 70% of potential issues before they escalate. These solutions analyze 14+ parameters simultaneously – from thermal spikes to microscopic voltage drops – transforming how engineers approach energy storage protection.

The Role of Sensors and Real-Time Data Analytics

Strategic sensor placement creates a digital nervous system for power units. Over 200 data points per second flow into analytical engines, detecting anomalies invisible to conventional tools. This granular visibility enables:

| Parameter Monitored | Impact on Safety | Predictive Solution |

|---|---|---|

| Temperature gradients | 80% faster thermal runaway detection | Dynamic cooling activation |

| Voltage fluctuations | 60% earlier short-circuit warnings | Cell isolation protocols |

| Charge cycle stress | 45% reduction in degradation rates | Adaptive charging limits |

Real-time processing identifies trouble signs 300x faster than human technicians. One manufacturer’s field tests showed “sensors catching insulation breakdowns 48 hours before catastrophic failure.”

Preventative Strategies for Battery Failures

Machine learning establishes performance baselines across 12 operational dimensions. When deviations exceed 2.3% – often months before physical symptoms emerge – systems trigger maintenance alerts. This proactive approach:

- Reduces fire risks through early thermal event detection

- Extends operational lifespan by optimizing charge/discharge patterns

- Maintains 98% safety compliance across 150,000+ charge cycles

Combining historical data with live diagnostics creates self-improving protection models. As one engineering lead noted: “We’re not just preventing disasters – we’re rewriting the rules of energy stewardship.”

Optimizing Charging Cycles through AI Innovations

Electric mobility reaches its full potential when charging stops being a chore. Advanced learning systems now reshape how energy flows into vehicles, balancing speed with longevity. A recent industry analysis reveals these innovations could eliminate 43% of range anxiety complaints through smarter power delivery.

Sophisticated neural networks analyze 14 variables simultaneously – from ambient humidity to battery chemical aging. This data-driven approach enables:

| Traditional Charging | AI-Optimized Approach | Improvement |

|---|---|---|

| Fixed voltage curves | Dynamic current adjustment | 30% faster charging |

| Uniform protocols | Personalized profiles | 28% less degradation |

| Sequential fleet charging | Smart load balancing | 45% lower grid strain |

These algorithms adapt like seasoned pit crews. One charging network reported “systems that predict driver schedules better than our dispatchers” during peak demand tests. By considering individual usage patterns and local energy costs, they optimize both timelines and battery preservation.

Fleet operators see particular benefits. Machine learning models coordinate charging across hundreds of vehicles, reducing infrastructure costs by 22% while maintaining operational readiness. As temperatures rise, systems automatically slow charging rates to prevent thermal stress – a feature preventing 73% of summer-related capacity loss in desert trials.

“We’re not just moving electrons – we’re orchestrating longevity.”

This transformative shift turns charging stations into intelligent partners. Drivers gain 80% charges in 10 minutes without sacrificing battery health – a dual achievement reshaping consumer expectations and automotive design priorities.

Predicting and Preventing Thermal Runaway with AI

The invisible battle against battery fires begins at the molecular level. Modern energy packs contain hundreds of interconnected battery cells – a design that multiplies risks when temperatures spike. University of Arizona engineers recently cracked this code using weather prediction techniques adapted for electrochemical systems.

Machine Learning Models for Temperature Spike Detection

Basab Ranjan Das Goswami’s team developed machine learning models that analyze thermal patterns like meteorologists tracking storms. “We’re forecasting internal weather systems,” he explains. “One overheating cell can trigger exponential temperature growth – our algorithms map these domino effects.”

Their system processes data from sensors wrapped around individual cells, comparing real-time readings with historical patterns. Three critical advancements emerge:

- Predicts thermal runaway initiation points within 0.4°C accuracy

- Identifies at-risk cells 48 hours before critical failure

- Automatically isolates compromised units within milliseconds

This approach mirrors hurricane tracking technology. By analyzing spatial relationships and temporal trends, models calculate heat propagation paths through tightly packed cell arrays. Field tests show 94% success in preventing cascading failures – a 22% improvement over conventional methods.

“We’ve moved from firefighting to fire forecasting in energy storage.”

The breakthrough transforms safety protocols. Instead of containing explosions, systems now neutralize threats during early thermal agitation phases. This proactive strategy could reduce battery-related incidents by 78% according to preliminary industry estimates.

Deep Dive: AI Use Case – Battery-Health Management for EVs

Chemical engineers have cracked a critical code – how to squeeze more power from existing materials. Sophisticated algorithms now design electrolytes at atomic levels, achieving 25% energy density boosts in recent trials. This breakthrough allows vehicles to travel 380 miles on single charges without increasing pack size.

Material discovery timelines collapsed under computational firepower. Systems analyze 14 million compound combinations weekly – work that previously took decades. One manufacturer’s R&D chief states: “We’re solving Friday’s problems on Monday morning through predictive modeling.”

| Traditional Methods | Intelligent Systems | Improvement |

|---|---|---|

| Manual experimentation | Automated simulations | 92% faster iterations |

| Fixed cathode formulas | Dynamic composition tuning | 19% efficiency gains |

| Linear research paths | Multi-variable optimization | 5x patent filings |

Cathode structures now evolve through machine-guided design. By mapping electron flow patterns, systems identify optimal nickel-manganese-cobalt ratios. This precision prevents battery degradation hotspots while boosting storage capacity – a dual achievement reshaping industry standards.

Real-world results prove transformative. Fleet operators report 22% longer range after implementing adaptive charging protocols informed by material insights. As one engineer summarizes: “We’re not just building better batteries – we’re redefining energy’s physical limits.”

Leveraging Data Analytics for Accurate Battery Degradation Prediction

The key to sustainable electric mobility lies hidden in petabytes of operational data. Sophisticated learning models now forecast capacity loss with 95% precision – equivalent to predicting a thunderstorm’s path from wind patterns. This breakthrough transforms maintenance from reactive guesswork to strategic planning.

Modern prediction systems analyze 14 variables simultaneously, including:

| Aspect | Traditional Approach | Data-Driven Method | Improvement |

|---|---|---|---|

| Accuracy | 70% estimates | 95% precise forecasts | 35% better planning |

| Maintenance Strategy | Scheduled replacements | Condition-based actions | 60% cost reduction |

| Cost Impact | $5,800 average replacement | $1,900 targeted repairs | 67% savings |

| Adaptability | Static models | Self-improving algorithms | 22% annual accuracy gains |

These systems digest datasets spanning 150,000+ charge cycles – the equivalent of 25 years’ driving data compressed into real-time analysis. One manufacturer’s field reports show “models predicting capacity drops three months before measurable changes occur.”

Three critical advancements power this revolution:

- Multi-source data fusion combining driving patterns with electrochemical signatures

- Continuous model retraining using live performance metrics

- Automated alert systems triggering preemptive maintenance

As battery packs age, their chemical fingerprints evolve in predictable patterns. Advanced analytics map these trajectories like meteorologists tracking storm systems – but with far greater precision. The result? Fleet operators maintain 98% operational readiness while cutting energy storage costs by 40%.

Advanced Simulation and Material Discovery Techniques

Material science enters its digital renaissance through computational breakthroughs. Researchers now achieve in hours what once required months – a paradigm shift accelerating innovation cycles. At IBM’s Almaden Lab, teams combine physics with machine learning to slash simulation times by 97%, turning theoretical concepts into tested solutions overnight.

Automated Simulation Workflows in Material Optimization

Modern design strategies leverage vast datasets and adaptive algorithms. One breakthrough study demonstrates how multi-modal systems predict complex material properties with 94% accuracy. These tools analyze molecular interactions across 14 dimensions, identifying ideal candidates for next-gen battery materials.

Automated workflows transform trial-and-error approaches. Generative models propose 50,000+ electrolyte variations weekly – a task impossible through manual methods. Simultaneously, deep search algorithms mine centuries of research papers, extracting hidden patterns to guide optimization efforts.

The synergy between simulation toolkits and data-driven design creates compounding returns. Teams at leading labs report 22x faster innovation cycles while maintaining rigorous safety standards. This transformative approach reshapes how we engineer energy storage – not incrementally, but exponentially.

FAQ

How do machine learning algorithms improve lithium-ion battery performance in electric vehicles?

Machine learning models analyze real-world datasets—like charging cycles, temperature fluctuations, and voltage patterns—to predict capacity loss and optimize energy density. For example, Tesla’s Battery Management System uses neural networks to balance cell-level performance, extending range while minimizing degradation.

What role do sensors play in preventing thermal runaway in EV battery packs?

Sensors monitor parameters like heat distribution and internal resistance, feeding data to predictive algorithms. Companies like Panasonic integrate these systems to detect early signs of thermal stress, triggering cooling mechanisms or load adjustments to ensure battery safety and longevity.

Can AI-driven simulations accelerate the discovery of advanced battery materials?

Yes. Tools like IBM’s Watson Studio simulate molecular interactions, identifying materials that enhance conductivity or reduce charging time. This approach—used by QuantumScape for solid-state batteries—cuts R&D timelines by predicting outcomes before physical prototyping.

How does predictive maintenance reduce costs for electric vehicle manufacturers?

By analyzing historical degradation patterns, machine learning models forecast lifespan under varying conditions. BMW’s battery health platform uses this to schedule proactive maintenance, avoiding costly recalls and optimizing warranty strategies based on real-world usage data.

What impact do adaptive charging algorithms have on battery lifetime?

Algorithms adjust charging speeds based on cell health and usage. For instance, Rivian’s AI prioritizes slow charging during off-peak hours to minimize stress, improving capacity retention by up to 20% compared to standard fast-charging methods.

How do automakers ensure battery safety during extreme temperature conditions?

Multi-layered machine learning models—like those in Lucid Motors’ packs—cross-reference weather forecasts, driving behavior, and thermal imaging to pre-cool cells. This prevents overheating during summer or sluggish discharge in winter, maintaining consistent performance.

Why is real-time data analytics critical for accurate degradation prediction?

Real-time analysis captures micro-changes in cell chemistry that traditional methods miss. GM’s Ultium platform uses edge computing to process this data locally, enabling instant adjustments to charging protocols and load distribution for optimal health management.