There is a moment—late at night, with a pile of briefs and a looming deadline—when speed and care must meet. A practitioner felt that strain and turned it into a prototype. In a day, they created an agent that extracts images, text, and code from a Word document and formats a ready-to-review post.

The result is hopeful: teams can scale output without losing voice. This model pairs automated drafting and research with human QA to protect brand tone and factual accuracy. Quality and oversight remain non-negotiable; agents handle routine tasks, while people focus on strategy, marketing, and partnerships.

From intake to publication, the process shows where agents add leverage—in briefs, outlines, assets, and final formatting. The U.S. market values speed and compliance; this approach meets both with measurable results.

Key Takeaways

- Automated agents speed routine work while humans preserve brand voice and accuracy.

- A prototype proved that a Word-to-post pipeline is feasible in a single day.

- Pairing drafts with human QA raises output velocity without harming quality.

- The model fits U.S. needs for compliance, speed-to-market, and content sophistication.

- Success hinges on clear SOPs, tooling choices, and measurable ROI.

Why start an AI-powered blog writing agency now

Demand for fast, authoritative content has shifted from optional to strategic across U.S. marketing teams. Teams need reliable processes that free time for strategy while keeping standards high.

User intent and market demand in the present AI landscape

Businesses want articles that rank and convert with faster turnaround. Sprout Social reports a 72-hour quarterly saving on performance reporting when teams use AI tools—time reinvested in strategy and ideas.

Pain points agents solve for U.S. content teams

| Bottleneck | What slows teams | Where agents help |

|---|---|---|

| Topic ideation | Long cycles to find viable topics | Surface relevant topics from data |

| Research & briefs | Manual syntheses and source checks | Automate research and draft briefs |

| Editing & SEO | Inconsistent on-page choices | Real-time SEO suggestions and formatting |

Results include fewer context switches, faster publication cadence, and clearer visibility on performance—but human review must guard brand and compliance.

Positioning your service and defining your ideal clients

A clear target client and a performance promise cut through market noise. Start by naming the industries where accuracy and governance matter most. B2B SaaS, healthcare, fintech, and professional services reward precision and editorial discipline.

Define the ICP by complexity and compliance needs, not just company size. That lets teams tailor scope, research depth, and SME interviews to reduce revision rounds and protect brand voice.

Value proposition: faster cycles, measurable SEO lift, and fewer editorial corrections via an agent-backed QA pipeline. One marketing firm reported 30% less editing time, 25% better SEO rankings, and 40% fewer factual errors—clear evidence of tangible results.

“Clients value predictability: fewer rounds, consistent tone, and measurable traffic gains.”

Price transparently: per-article, retainers, topic clusters, or outcomes-based tiers tied to ranking milestones. Offer SLAs and re-optimization cycles to back claims and reduce buyer risk.

Niche focus and differentiation

- Specialize by vertical or stage (e.g., product-led growth) to command premium rates.

- Codify brand standards into workflows so each draft matches tone and claims.

- Educate buyers on co-creation: clients supply strategy and sources; the team operationalizes at scale.

| Pricing Model | Best For | Guarantee | Expected Outcomes |

|---|---|---|---|

| Per-article | Small marketing teams | Turnaround SLA | Faster publishing cadence |

| Monthly retainer | Ongoing content ops | Monthly deliverables | Predictable output and quality |

| Outcomes-based | Performance-led buyers | Ranking/traffic milestones | Measurable SEO lift |

| Topic clusters | Demand-gen programs | Cluster completion SLA | Improved topical authority |

Service menu and deliverables that sell

Package offers should read like product specs: clear, measurable, and easy to compare. Agents automate routine tasks—extracting images, text, and code from Word files and composing drafts into publication-ready Word documents for editorial review.

Tiered packages scale from briefs and outlines to full-production blog posts with graphics, internal links, and CMS formatting. Upgrade paths add cluster strategies, pillar pages, and multi-asset repurposing for newsletters and LinkedIn carousels.

- Agent-covered tasks: research summaries, outline generation, draft writing, metadata, and image suggestions to speed delivery.

- Quality checkpoints: editorial review, fact-checking, and final on-page seo optimization before handoff.

- Handoff package: CMS-ready HTML blocks, alt text for images, and internal link maps aligned to the website architecture.

| Package | Core Deliverables | Turnaround SLA | Best For |

|---|---|---|---|

| Starter | Briefs, outlines, 1 draft | 5 business days | Small marketing teams |

| Standard | First draft + SEO pass, images, meta | 7 business days | Growth-stage websites |

| Premium | Full-production blog posts, CMS formatting, link maps | 10 business days | Compliance-focused enterprises |

Optional add-ons include subject-matter interviews, simple on-brand visuals, monthly audits, rank tracking, and seasonal calendars that encourage longer engagements and steady ROI.

Core tech stack: language models, tools, and systems

Selecting the right stack is a strategic choice: it affects speed, cost, and trust. Teams should pick a path that balances performance with control while keeping client data safe.

Choosing models: OpenAI APIs vs. Hugging Face open-source

Hosted options like OpenAI (GPT-3.5/4) deliver low-latency, high-quality output with minimal ops overhead.

Open-source Transformers on Hugging Face give teams control, offline deployment, and cost tuning for heavy workloads.

Recommendation: Start hosted for speed-to-market; introduce open-source for verticals that need IP control or custom fine-tuning.

Frameworks and tools: LangChain, vector databases, and CMS integration

LangChain supports RAG flows: loaders split documents, embeddings store context, and vector search returns relevant passages.

Vector databases enable fast semantic search across documents and past client materials, improving research and reuse.

Integrations post structured HTML blocks and images directly to CMSs via API for reliable publication pipelines.

Security, privacy, and moderation essentials for client work

Security is table stakes: vault API keys, enforce rate limits, and redact PII before storage.

Audit logs, least-privilege access, and content moderation protect clients and meet GDPR needs.

Info flow: intake documents → embeddings in database → agent calls generate sections with traceability and memory for knowledge scaffolding.

| Area | Pattern | Why it matters |

|---|---|---|

| Model choice | Hosted vs. open-source | Latency, cost, and controllability |

| Search & memory | Vector DB + RAG | Anchors drafts in vetted data and research |

| Development | Staging, prompt versioning | Safe rollouts and reproducible generation |

Designing agentic workflows for end-to-end content creation

Teams must map a clear pipeline so every topic moves from intake to live with fewer delays.

Start by documenting the core steps: intake, scoping, research, drafting, QA, on-page optimization, and CMS publishing. Each step links to a role and an SLA so expectations are explicit.

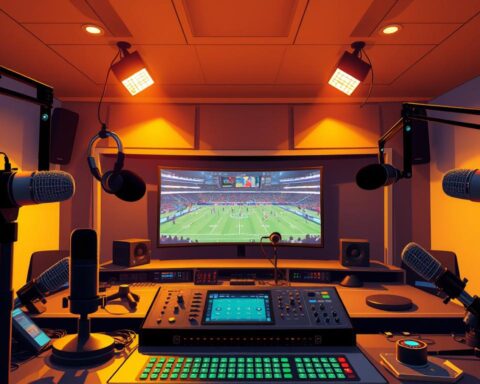

One practical workflow: an agent extracts text, images, and code from a Word document; composes the article in a template; and returns a ready-to-review Word file. This repeatable process saves time and reduces handoffs.

Automations for briefs, assets, and approvals

Use agents to auto-generate briefs with goals, audience, target keywords, and internal link suggestions before writing starts. That creates alignment and speeds approvals.

Automate tasks such as image suggestions, alt text, and formatting to keep posts consistent. Versioning and comment threads keep feedback structured and traceable.

- Map topic assignment to capacity planning so editors prioritize high-impact posts.

- Define approval checkpoints after brief, outline, and pre-publish QA to avoid bottlenecks.

- Connect the website CMS via API for scheduled publishing and internal-link updates.

| Pipeline Phase | Agent Role | Key Output | SLA |

|---|---|---|---|

| Intake & Scope | Outline architect | Brief with goals & keywords | 24 hours |

| Drafting | Drafting partner | Template-composed Word draft | 3 business days |

| QA & SEO pass | SEO optimizer | Edited draft, meta, alt text | 2 business days |

| Publish | Deployment agent | CMS-ready HTML and schedule | 1 business day |

Feedback loops close the system: performance metrics feed future briefs and adjust agent roles. Over time, the process tightens and content creation becomes both faster and more reliable.

Automating research with AI agents

When information volume grows, a multi-agent approach helps teams find, verify, and summarize what matters. This approach cuts manual time and produces briefs ready for editorial review.

Data gathering and brief generation

One agent crawls trusted sources and pulls recent data; another de-duplicates and flags bias. Together they convert raw documents into prioritized talking points, statistics, and citations.

Results: an actionable brief that lists angle, key subtopics, notable quotes, and link recommendations. The process includes freshness checks and vertical calibration for compliance-heavy industries.

Multi-agent source discovery and filtering

A multi-agent setup involves several specialized roles—source discovery, relevance filtering, and fact cross-referencing. These agents normalize varied formats using parsing tools so writers get consistent notes.

- Automates competitive landscape scans to expose content gaps by topics and format.

- Flags ambiguous claims for SME review to preserve veracity.

- Feeds research outputs into drafting UIs so evidence inserts without breaking flow.

| Agent Role | Primary Task | Key Output | SLA |

|---|---|---|---|

| Source Finder | Crawl trusted repositories | Indexed links & timestamps | 1 hour |

| Relevance Filter | Score and de-duplicate | Top 10 vetted sources | 30 minutes |

| Summarizer | Condense claims and data | Research brief w/ citations | 45 minutes |

| Compliance Checker | Apply vertical rules | Flagged issues & approved sources | 20 minutes |

Teams can learn more about orchestrating multi-agent workflows to scale this process and keep research reliable and timely.

AI-generated outlines, brand voice, and style controls

Effective outlines anchor content to user questions, audience goals, and discovered gaps. AI-derived plans weigh intent, audience, and competitive section patterns to shape clear sections and headings.

Agents convert briefs into detailed section plans with example angles, data prompts, and evidence placeholders. This makes drafts faster and more consistent.

Brand voice documents encode dos and don’ts, sentence length limits, claims policy, and approved terminology. Short-term and long-term memory store stylistic preferences so text stays consistent across series.

- Calibrate style controls for reading level, scannability, and narrative cohesion.

- Map H1–H3, lists, and callouts to SEO structure for better crawlability.

- Keep knowledge bases updated with references, messaging pillars, and glossary terms.

| Function | Agent Role | Output |

|---|---|---|

| Outline logic | Structure strategist | Section plan with intent & questions |

| Voice encoding | Style enforcer | Reusable prompts and tone rules |

| Memory | Context keeper | Persistent terminology & past preferences |

Draft generation and iterative refinement with agents

Agents can spin multiple draft directions in minutes, letting editors choose the clearest path forward. This approach turns initial generation into a low-cost experiment: different intros, CTAs, and narrative flows are compared before heavy editing begins.

Prompt engineering focuses on structure and signals: instruct the intro, H2/H3 order, examples, meta, and internal link cues. The technical guide includes prompt templates and a Flask API example for generating drafts with OpenAI or Hugging Face, plus post-processing steps for SEO metadata and HTML formatting.

Versioning and rapid iteration compress cycles. Agents produce multiple versions; editors run A/B tests and mark preferred variants. A tracked version system captures feedback so prompts improve over time.

- Choose the right model and reading level; seed drafts with vetted research snippets.

- Run automated checks for grammar, links, and citations before layout.

- Keep human checkpoints for tone, factual accuracy, and policy adherence.

- Calibrate style for scannability: short paragraphs, clear subheads, and semantic HTML for accessibility and SEO.

The net result: faster throughput of quality posts, fewer revision rounds, and a feedback loop that raises draft fidelity with each cycle.

RAG, semantic search, and vector databases for smarter content

Semantic search plus vector stores lets teams pull the exact passages that should inform a draft or a Q&A. RAG workflows load documents, split them into chunks, embed those chunks, and store vectors in a fast database for similarity search.

Pipeline essentials: document ingestion, chunking strategy, embedding model choice, and similarity thresholds define retrieval quality.

- Chunking: size passages to match context windows and avoid cutting sentences.

- Embeddings: pick models that suit your domain for better semantic vectors.

- Search config: top‑k and filters tune precision vs. recall for each topic.

- Caching: store embeddings and recent search sets to cut cost and speed iteration.

Use RAG for compliance-heavy subjects, product documentation, or long-form pieces that need cited sources. Grounding content in vetted repositories—white papers, support docs, and knowledge bases—reduces hallucinations and raises trust.

Governance and ops: enforce access controls, audit logs, and approval flows. Monitor retrieval performance and adjust chunking, embedding, or similarity thresholds to keep the system reliable as data and models evolve.

Quality control: editing, fact-checking, and SEO optimization

Systematic checks turn raw text and images into high-value, search-ready pages. Effective quality control reduces revision loops and protects brand trust.

AI tools cut basic editing time by about 30%, boost SEO rankings by 25%, and lower factual errors by 40% in final reviews. They automate grammar, clarity, and punctuation so editors focus on nuance and claims.

Grammar, verification, and link hygiene

Multi-stage checks run automated scans for style, broken links, and citation validity before human review. Research corroboration cross-references stats and flags weak sources for replacement.

On-page SEO and structure

Tools benchmark headings, keywords, meta elements, and internal link coverage against top search results. Standardized alt text for images and accessibility rules protect UX and search performance.

Building a lean QA pipeline

Documented reviewer roles and SLAs keep editors on high-value tasks. Post-publication checks—schema, performance, and link refreshes—close the loop and feed prompt updates for continuous improvement.

| QA Stage | Tools | Primary Task | Expected Outcome |

|---|---|---|---|

| Pre-edit scan | Grammar & clarity tools | Fix punctuation, tone, readability | 30% less editing time |

| Fact-check | Source cross-referencers | Validate statistics and claims | 40% fewer factual errors |

| SEO audit | On-page benchmark tools | Optimize keywords, meta, links | 25% better rankings |

| Post-publish | Performance & schema checks | Monitor page health and links | Ongoing relevance & link hygiene |

Operations: SOPs, tools, and performance measurement

A clear ops layer translates strategy into daily steps that editors, marketers, and agents follow. This section explains how documented systems and simple dashboards keep quality high and throughput steady.

Standard operating procedures for repeatable results

Codify the process for briefs, outlines, drafting, QA, and publishing so every task follows the same steps.

Store approved documents and source notes in a central database. Assign ownership, set SLAs, and list handoff rules to remove ambiguity.

Include development practices: change logs for prompts and models, test environments, and rollback procedures to reduce risk.

Analytics and reporting: tracking rankings, traffic, and conversions

Use performance dashboards that combine data from the website, search tools, and CRM. Datagrid exemplifies this approach: it unifies customer data, analyzes content performance, and automates competitive intelligence.

- Track rankings, traffic, assisted conversions, and cost per post.

- Schedule recurring audits for content decay, refresh priority, and internal link updates at the website level.

- Standardize report cadences for business stakeholders and tie metrics to budget and capacity planning.

| Area | Output | SLA |

|---|---|---|

| SOPs | Briefs, checklists, traceable documents | 24–48 hrs |

| Performance | Dashboards: rankings, traffic, revenue | Weekly |

| Security | Agent access controls & audit logs | Ongoing |

build, an, ai-powered, blog, writing, agency

A repeatable service model turns agent orchestration and editorial discipline into predictable publishing outcomes.

Core promise: an end-to-end capability to plan, produce, and optimize articles at scale for marketing teams. The offering pairs technical development with human review so quality and credibility stay intact.

As a managed service, the approach uses SLAs, analytics, and continuous improvement loops. Prompt libraries, evaluation sets, and staged workflows form the team’s development cadence—an operational edge that improves with use.

- Agents handle routine extraction, draft scaffolding, and metadata; editors protect nuance and accuracy.

- Repeatable templates and SOPs let posts ship predictably and reduce revision cycles.

- Transparent reporting and QA discipline build stakeholder confidence over time.

Offer pilot engagements to validate fit and speed onboarding. Train client teams on change management so collaboration is smooth and adoption is fast.

| Offer | Benefit | SLA |

|---|---|---|

| Pilot | Faster validation | 30 days |

| Managed Plan | Steady calendar & higher topical authority | Monthly |

| Governance | Ethical, secure operations | Ongoing |

Scaling systems: fine-tuning, caching, and multi-agent orchestration

Scaling content operations demands both technical choices and operational guardrails. Teams must pick the right tools and keep systems resilient so SLAs hold under load.

Model selection and style adaptation

Choose models per use case: hosted services for stability and latency; fine‑tuned open‑source models for domain nuance and cost control. Fine-tuning, prompt conditioning, and RLHF improve brand voice and reduce editorial edits.

Caching, batching, and cost/performance optimization

Reduce token spend and latency with cached prompts and embeddings, batch requests, and model quantization. Pruning less‑used layers and reusing recent search results cut time and expense while keeping retrieval accurate.

Platform integration and API-driven fulfillment

Wrap generation in microservices (Flask or similar) for clear contracts: timeouts, retries, and circuit breakers protect production traffic. Orchestrate agents for research, drafting, and QA in parallel, then merge outputs with rule-based conflict resolution.

- Select the right model for each task; test with evaluation sets before deployment.

- Maintain a prompt and template database to speed development and onboarding.

- Monitor performance: latency, token costs, and editorial revision rates to guide tuning.

- Integrate CMS and analytics via APIs so generation flows to publish with traceability.

Release cadence and change control matter: stage changes, run A/B comparisons on prompts and models, and keep a rollback plan to minimize disruption. Data-driven development keeps the system improving without surprising clients.

Conclusion

A short, pragmatic playbook helps teams turn agent experiments into steady traffic and revenue.

Summarize the playbook: define services, choose a minimal model stack, design agentic workflows, enforce QA, and measure outcomes with clear KPIs.

The one-day prototype and referenced code (OpenAI/Hugging Face, Flask, LangChain) show this is achievable. Results include less editing time, better rankings, and fewer factual errors when humans verify drafts.

Start with a pilot: one topic cluster, a research brief agent, and a drafting endpoint wired to your website CMS. Track signals, protect brand safety, and keep humans in the loop for tone and facts.

Next steps: set evaluation sets, reinvest editor feedback into knowledge bases, and scale once SEO and analytics prove the approach. Move now—momentum favors early adopters.

FAQ

What is the core opportunity in "Make Money with AI #126 – Build an AI-powered blog writing agency"?

The opportunity lies in combining language models, workflow automation, and editorial expertise to deliver high-volume, consistent content for businesses. By packaging research, drafts, SEO, and publishing into repeatable services, entrepreneurs and content teams can monetize scale and improved time-to-publish while maintaining quality and brand voice.

Why start an AI-driven content service now?

Market demand for timely, optimized content is high as companies chase organic traffic and thought leadership. Advances in models and tools reduce production costs and accelerate output. Early entrants who standardize processes, integrate safety and SEO, and define clear value propositions can capture client budgets shifting toward efficient content operations.

What user intent and market needs are shaping demand today?

Clients seek higher-quality briefs, faster turnaround, consistent brand tone, and measurable SEO results. They want teams that can handle ideation, research, and publish-ready drafts while minimizing back-and-forth. Agencies that solve for these intents—speed, accuracy, and performance—win recurring contracts.

What pain points do AI agents solve for U.S. content teams?

Agents automate repetitive research, generate structured outlines, surface relevant sources, and produce first drafts. They reduce human time on low-value tasks, improve consistency across posts, and enable scaling without linear headcount increases—helping teams meet aggressive editorial calendars.

How should a new service be positioned and who are ideal clients?

Position around measurable outcomes: traffic lift, lead generation, or reduced production cost. Ideal clients include SMBs, niche SaaS companies, and marketing teams at mid-market firms that value scalable content but lack in-house bandwidth or process maturity.

How to choose a niche, pricing model, and value proposition?

Select a niche with clear intent and repeatable content needs—e.g., fintech, health tech, or developer tools. Price by project, subscription, or performance (hybrid). Emphasize outcomes: faster time-to-rank, consistent voice, and turnkey publishing workflows to differentiate.

What should the service menu and deliverables include?

Offer packages that bundle topic research, SEO briefs, outlines, draft articles, image recommendations, metadata, and CMS-ready files. Include optional add-ons like fact-checking, link acquisition, or analytics reporting to increase ARPU.

Which core technologies are essential: models, tools, and systems?

Core stack typically combines a reliable language model API (OpenAI or similar), a workflow framework like LangChain, a vector database for embeddings, and CMS integration. Add automation tools for approvals, asset management, and monitoring to close the production loop.

When to choose OpenAI APIs versus Hugging Face open-source models?

Use OpenAI APIs for strong general-purpose performance, managed reliability, and speed to market. Consider Hugging Face open-source when data residency, cost control, or customization (fine-tuning) outweighs the engineering overhead of hosting and maintenance.

Which frameworks and integrations accelerate development?

LangChain for agent orchestration, vector DBs like Pinecone or Milvus for semantic search, and Zapier or n8n for workflow automation speed integration with CMSs such as WordPress or Contentful. These components reduce manual wiring and improve repeatability.

What security, privacy, and moderation essentials are required for client work?

Implement data classification, encryption in transit and at rest, and clear policies for PII handling. Add content moderation pipelines to filter unsafe outputs. Contractual terms and SOC2-like practices reassure enterprise clients about data handling and compliance.

How do agentic workflows support end-to-end content creation?

Agentic workflows automate stages: intake, research, outline, draft, edit, and publish. Each agent focuses on a task—topic discovery, source validation, or headline testing—while a coordinator enforces quality checks and handoffs to human editors when needed.

What automations reduce friction in briefs, assets, and approvals?

Use templates for briefs, automated image generation or selection, metadata autofill, and approval routing in project management tools. Notifications and versioned drafts help stakeholders review quickly and keep timelines predictable.

How can AI automate research effectively?

Agents can crawl selected domains, summarize findings, extract quotes, and produce structured briefs with source attributions. Combining multi-agent source discovery with relevance scoring yields focused, verifiable research that supports higher factual accuracy.

What is a multi-agent approach to source discovery and relevance filtering?

One agent gathers candidate sources; another filters by credibility and recency; a third synthesizes summaries and extracts citations. This division of labor improves throughput and reduces hallucination risk by cross-validating information across sources.

How should outlines, brand voice, and style controls be managed?

Create standardized outline templates that encode intent, audience, and SEO signals. Maintain brand voice documents and memory stores so models reference tone, terminology, and forbidden language. Combine automated checks with human editing to ensure fidelity.

What is the best practice for prompt engineering around structure, SEO, and readability?

Craft prompts that specify target audience, desired structure, keyword intents, and readability levels. Include examples and constraints—headings, word counts, and citation rules—to produce drafts that require minimal revision and align with SEO needs.

How are versioning and human-in-the-loop review handled during iteration?

Use a version control system for drafts and a clear QA checklist. Automate quick passes—grammar and SEO—then route to human editors for final checks. Track changes and rationale to speed future edits and maintain institutional memory.

When should RAG and semantic search be used for content production?

Use RAG when factual accuracy and domain specificity matter—technical guides, legal, or medical-adjacent content. Semantic search and vector stores help retrieve relevant passages, support citations, and reduce hallucinations by grounding responses in documents.

How do document loading, chunking, and embeddings work for similarity search?

Documents are split into chunks sized for model context windows, then converted into embeddings stored in a vector database. Similarity queries return the most relevant chunks for use in prompts or summarization, improving relevance and recall.

What quality control steps ensure accurate, SEO-ready content?

Implement grammar and style checks, factual verification against trusted sources, link hygiene audits, and on-page SEO validation (keywords, meta tags, heading structure). Combine automated scanners with editorial review to minimize errors.

Which on-page SEO elements must be included in deliverables?

Deliverables should include target keywords, meta titles and descriptions, structured headings, internal linking recommendations, and alt text. These elements help search engines index content correctly and improve discoverability.

How do SOPs, tools, and analytics support repeatable operations?

SOPs document each stage—intake, research, drafting, QA, and publishing—so teams replicate success. Tools for project management, monitoring, and automated reporting provide visibility into throughput, quality, and client ROI.

What metrics should be tracked for content performance?

Track organic traffic, ranking positions for target keywords, click-through rates, time on page, and conversion metrics tied to business goals. Regular reporting lets teams iterate on topics, formats, and SEO tactics based on data.

How can services scale via fine-tuning, caching, and multi-agent orchestration?

Fine-tune models on brand-specific data to improve voice adherence. Use caching and batching to reduce API costs and latency. Orchestrate multiple agents for parallelism—research, draft, and review—to increase throughput without sacrificing quality.

What are best practices for model selection, fine-tuning, and style adaptation?

Choose models that balance cost and capability for the task. Fine-tune incrementally with curated brand data and include rejection examples. Monitor drift and retrain periodically to maintain style and factual standards.

How does caching and batching optimize cost and performance?

Cache frequent responses (like boilerplate sections) and batch similar tasks to reduce API calls. Use smaller models for lightweight tasks and reserve larger models for high-value, creative, or sensitive outputs to control spend.

What platform integrations and API-driven fulfillment speed delivery?

Integrate with CMS platforms (WordPress, Contentful), analytics (Google Analytics, Search Console), and asset stores. Expose task endpoints via APIs so clients can trigger content workflows programmatically and automate publishing.

How should teams handle moderation and legal risk when producing content?

Embed content moderation filters, maintain provenance records for facts and sources, and include legal review for high-risk verticals. Clear terms of service and data processing agreements reduce client exposure and clarify responsibilities.

What are common barriers when launching this type of service and how to overcome them?

Common barriers include quality control, client trust, and model hallucinations. Overcome them with transparent workflows, sample work, strong QA processes, and by demonstrating measurable results through pilot projects and case studies.