There is a quiet thrill when a familiar level surprises you with a new twist. That moment—when a player discovers a fresh route or a dynamic event—captures why automatic creation matters for modern game design.

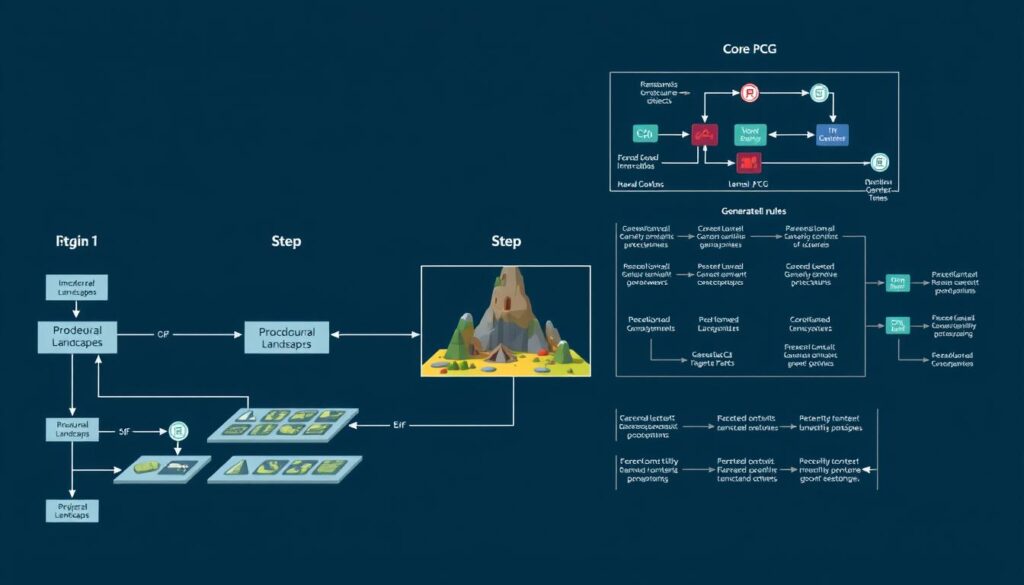

The article defines a central approach: leveraging algorithmic systems to build varied levels, assets, and scenarios that scale production while elevating player immersion. It frames this as a practical how-to guide covering fundamentals, algorithm selection, engine integration, testing, and measurement.

PCG becomes a strategic capability: it reduces repetitive work, speeds iteration, and unlocks design possibilities manual pipelines struggle to cover. Indie teams gain faster prototyping; larger studios multiply content velocity and consistency.

The section previews a toolbox—constructive, search-based, and machine learning models—and sets guardrails for art direction, tuning, and safety. Readers will find a future-ready blueprint to bring dynamic systems into a live game world while keeping creative intent intact.

Key Takeaways

- Algorithmic systems scale production and deepen player engagement.

- Readers will learn practical steps from fundamentals to engine integration.

- Hybrid human-plus-automated workflows speed iteration without losing craft.

- Different studios benefit: rapid prototyping for indies, tooling for larger teams.

- Constructive, search-based, and ML approaches each solve distinct problems.

- Art direction, difficulty tuning, and safety remain essential guardrails.

Why Procedural Content Generation Matters for Future-Ready Game Development

Future-ready studios decouple content volume from linear artist-hours to keep pace with market demand.

procedural content generation boosts replay value while reducing production cost and effort. The gaming industry now needs a steady stream of new assets, updates, and personalized moments. Systems that scale output let teams meet that demand without simply hiring more people.

Once algorithms and constraints are set, pipelines become sustainable. Teams iterate faster, ship varied content with consistent quality, and cut time to playtest. This reduces risk for ambitious scopes and supports earlier user testing.

There is a clear business rationale: breadth of content, personalization, and post-launch updates drive retention and revenue. Designers can focus on rules, pacing, and the player journey while algorithms fill large but coherent variations.

“PCG is a force multiplier for environment dressing, side quests, and loot—while handcrafted work still defines hero moments.”

- Team enablement via shared schemas and tooling improves handoffs and speed.

- Metrics such as replayability, session length, and retention tie directly to varied pacing.

- For live services, data-informed iteration becomes a core capability for long-term revenue.

| Benefit | Studio Impact | When It Excels | When to Handcraft |

|---|---|---|---|

| Scale | Lower per-item time and cost | Environment dressing, loot pools | Signature story scenes |

| Speed | Faster iteration and playtesting | Side missions, procedural maps | Hero boss encounters |

| Personalization | Better player-tailored experiences | Adaptive rewards and pacing | Carefully authored narrative beats |

Strategic viewpoint: this approach is not merely a technique. It is an operating model that aligns development with the future of interactive entertainment—reducing time to content while raising the standard for player experiences.

The Evolution of AI in Gaming and Procedural Generation

What began as random rooms and corridors in the 1980s has grown into hybrid toolchains that shape entire game worlds.

Early adoption came from roguelikes such as Beneath Apple Manor and Rogue. Those titles proved a key idea: simple rules plus controlled randomness can create replayable design.

Then machine learning expanded the palette: GANs and LSTMs enabled textures, music, and level layouts rather than only tile placement. Reinforcement learning improved layout fitness and agent interaction.

From roguelikes to neural networks: a brief timeline

- 1980s–2000s: constructive rule systems for dungeons and maps.

- 2010s: search-based and hybrid methods; higher-quality procedural work in modern engines.

- 2019–2023: academic focus—FDG (40%), CoG (33%), AIIDE (24%)—formalized benchmarks and reproducibility.

- Recent years: transformers and large models aid narrative beats, dialog, and rapid design ideation.

Workflows shifted from offline generation to online, adaptive systems that personalize during play. Telemetry now informs fitness functions, closing the loop between data and design.

Team roles also evolved: designers author constraints, engineers manage pipelines, and machine learning practitioners tune models. The current frontier pairs GANs, RL, and large models to balance quality and control.

Persistent challenges remain: fairness, readability, and narrative cohesion. The next sections move from this history toward hands-on frameworks builders can apply today; for a wider historical overview see the historical overview.

AI Use Case – Procedural Content Generation in Games

Modern studios harness algorithmic pipelines to craft levels, quests, items, and ambient systems that match a game’s mechanics and art.

This approach frames generation as a design partner rather than a replacement for craft.

Scope is clear: these systems supply maps, art variants, mechanics, and music while primary narrative beats and brand-defining scenes remain hand-authored. That balance preserves identity and player expectations.

Practical outputs vary by genre: roguelite dungeons that recombine rooms, open-world biomes stitched from tilesets, puzzle variants that reuse core rules, and encounter tables that scale with player progress.

Value appears across production phases: rapid concept exploration, grayboxing for layout tests, vertical slices for polish, and post-launch drops to sustain engagement.

Quality is guarded by constraints—tile adjacency rules, spawn affordances, pacing budgets, and difficulty curves tuned by playtest telemetry. These safeguards make automated output reliable and readable.

Tooling raises iteration velocity: designers adjust constraints or models, regenerate variants, and curate results without low-level coding. Versioned schemas and deterministic seeds support debugging and review.

- Infrastructure notes: schema versioning, seed control, and review pipelines.

- Personalization hooks: link generation to player profiles for tailored pacing and rewards.

- Next steps: the following sections present a practical blueprint for implementing robust systems.

“Systems that scale output let teams meet demand while keeping handcrafted moments central to the player experience.”

PCG Fundamentals: What to Generate and When to Generate It

Deciding what a system should make—and when—is the first design decision for any scalable pipeline.

Break assets into five practical categories so teams assign owners and pipelines clearly.

Content categories and owners

- Game bits: textures, sounds, vegetation, object properties — art and audio teams; GAN-based textures are a common example.

- Game space: maps, roads, worlds — level designers and tools engineers; WFC suits tile-based map work.

- Scenarios: stories, quests, conversations — narrative designers; LLM-authored side quests are useful here.

- Game design: mechanics, spawn rules, balancing — systems designers; search-based spawn balancing fits this slot.

- Derived content: ambient NPC chatter, in-world news, barks — live ops and writers; ambient dialog systems supply micro-variations.

Online vs. offline

Choose offline for heavy batches and predictability. Use online for micro-variation that adapts during play.

| When | Best for | Owners |

|---|---|---|

| Offline | Regions, bulk assets, level templates | Art, tools, QA |

| Online | Encounters, loot drops, NPC barks | Live ops, systems |

Treat seeds and constraints as versioned assets linked to level templates. Add collision, pathfinding, and readability checks to stop bad output. Keep designers in the loop: they curate candidates, log failures, and refine rules so generated material supports core game loops and the intended player journey.

The Algorithm Toolbox: From Search-Based Methods to Machine Learning

Different algorithm families solve distinct design needs—optimization, pattern fidelity, or expressive variety.

Search-based methods

What they do: evolutionary algorithms, simulated annealing, PSO, and MCTS iteratively refine candidates by generate–evaluate–mutate loops.

They excel when developers need concrete goals: path length, puzzle solvability, or balanced encounters.

Constructive and constraint approaches

Wave Function Collapse and grammar systems build layouts fast with strong adjacency and style guarantees. They give high control and repeatable results for maps and levels.

Machine learning approaches

Markov chains suit light patterns. Autoencoders and GANs handle tiles and textures. LSTMs and transformers shape sequences, dialog, and character lines.

Hybrids and LLM-backed layers

Combining systems often outperforms a single method: WFC layouts → GAN texture pass → LLM narrative hooks. Learned evaluators can guide evolutionary search.

“Pick the simplest method that meets quality and control needs; scale to hybrids only when the single method falls short.”

| Family | Best for | Trade-off |

|---|---|---|

| Search-based | Optimized levels, puzzles | High compute, tunable |

| Constructive | Tile layouts, style consistency | Fast, predictable |

| ML & transformers | Textures, dialog, variation | Data needs, runtime costs |

Step-by-Step: Implementing Procedural Content Generation in Your Game

Begin with measurable design pillars that tie generation to player progression and difficulty targets.

Define goals and constraints

Translate high-level goals into clear metrics: pacing budgets, target completion rates, and expected player emotions at key beats.

Then convert those into schemas—tile taxonomies, encounter tags, traversal rules, and narrative states—so developers and designers share a single source of truth.

Select and prototype algorithms

Prototype search-based methods for optimization tasks. Use constructive grammars for layout control. Apply ML models—GANs and LSTMs—for textures and sequence work, and LLM layers for narrative hooks.

Integrate into the engine

Build adapters that ingest seeds and constraints, emit artifacts to a content database, and tag assets for curation. Log seeds and support reproducible builds.

Playtest, measure, iterate

Automate validation: navmesh checks, spawn safety, solver confirmation, and readability metrics. Keep human gates—designer review queues and rapid re-gen tools.

“Instrument playtests with telemetry—completion rates, retries, and dwell times—to refine fitness functions and parameter ranges.”

Designing Personalized Gameplay with AI

Designers can translate player signals into adjustments that tune challenge and reward without breaking narrative flow.

Adaptive difficulty and pacing driven by player behavior

Systems should monitor simple signals: success rates, input precision, and route selection. These signals let the game nudge pacing and difficulty toward the player’s current skill.

Smoothing matters: apply caps and gradual shifts to avoid sudden difficulty whiplash. When signals are noisy, the system falls back to conservative defaults set by designers.

Dynamic quests, encounters, and rewards tuned to preferences

Quests can adapt objectives, NPC roles, and loot based on recent actions and stated preferences. This keeps missions relevant and increases replay value for players.

Designers keep control by limiting variation scope and ensuring story beats remain intact.

Real-time customization vs. precomputed variants

Real-time tailoring offers low-latency personalization but needs stronger safeguards for fairness. Precomputed variants give consistency and easier QA at the cost of storage and scope.

“Personalization should feel earned, readable, and reversible.”

| Approach | Latency | Control | Best fit |

|---|---|---|---|

| Real-time | Low | Medium | Adaptive encounters, loot tables |

| Precomputed | High | High | Region layout variants, major quests |

| Hybrid | Medium | High | Mixed pacing; player-tailored rewards |

Respect player privacy: anonymize analytics and honor opt-outs while still running A/B tests to validate uplift. Human oversight remains essential—designers set bounds; systems execute within them to improve gameplay experiences and drive sustainable game development.

Building Game Worlds with PCG: Levels, Maps, and Systems

Crafting vast, varied worlds calls for pipelines that enforce navigation, pacing, and visual coherence. Teams must treat terrain, roads, and level topology as linked systems rather than isolated assets.

Terrain, roads, and layout generation for immersive worlds

Terrain synthesis should balance scale and readability: heightfields with layered noise create believable hills and valleys while preserving pathing corridors for characters and vehicles.

Road networks can be grown with agent-based or procedural spline methods to follow natural slopes and connect landmarks. Biome placement should respect navigability and sightlines so players can orient quickly.

Level topology and progression: constraints, affordances, and readability

Topology patterns—loops, branches, and critical paths—define exploration rhythm. Loops encourage optional exploration; branches create choice; critical paths drive narrative beats.

Local rules (WFC or grammar systems) enforce alignments: doorways, platform gaps, and cover spacing. Readability heuristics—landmarks, lighting cues, and affordance signals—help players parse each level fast.

- Distribute loot, enemies, and resources along risk/reward curves tied to player progression.

- Art-direction hooks: tileset constraints, palette rules, and prop budgets keep spaces on-style.

- Test at scale with simulation agents to validate reachability and encounter density across many levels.

| System | Primary Goal | Runtime Strategy | When to Precompute |

|---|---|---|---|

| Terrain | Believable topology | Chunked heightfields, LOD | Large regions, fixed biomes |

| Roads | Navigation and flow | Agent growth + splines | Major arteries and story routes |

| Level Layout | Pacing and readability | WFC/grammar + validators | High-risk, high-polish encounters |

| Systems Layer | Risk/reward balance | Telemetry-tuned distribution | Stable progression segments |

“Design constraints should live in tooling; engineering validates runtime budgets so generated levels remain playable and performant.”

AI Mechanics That Shape Gameplay: Pathfinding and Decision-Making

Smart navigation and deliberate decision systems turn layouts into meaningful play moments.

Navigation meshes and hierarchical pathfinding let agents traverse complex environments without hogging CPU. Local meshes handle fine movement; high-level graphs plan routes between objectives. This split keeps performance steady across large worlds.

Smarter navigation and enemy behavior through decision models

Designers choose decision models by role: utility systems for emergent choices, behavior trees for readable states, and planners or MCTS for deep tactical moves.

These systems react to player actions while honoring game design goals. Integrating telemetry lets developers tune parameters and avoid degenerate tactics.

Balancing unpredictability and fairness in gameplay loops

Bound randomness with telegraphed cues, cooldowns, and predictable recovery windows. This keeps encounters surprising but solvable, preserving perceived fairness.

Link adaptive ramps to skill indicators—enemy accuracy, retreat thresholds, and aim assist bands—so difficulty scales without feeling arbitrary.

- Tie encounter placement to terrain affordances so foes flank along valid routes.

- Simulate thousands of runs to find edge-case exploits and balance pacing.

- Model sightlines, noise propagation, and group dynamics for stealth and social play.

“Instrument outcomes—time-to-first-failure, recovery success, and perceived fairness—to calibrate both content and behavior responses.”

Efficiency and Resource Optimization for Studios

Studios that streamline pipelines cut weeks from production and free teams to focus on craft. Automation compresses grayboxing, validation, and variant generation so time resources go further.

Shared schemas and light tools make collaboration fast. Designers, engineers, and producers use the same constraints, prompt libraries, and packs to reduce back-and-forth. This shortens handoffs and boosts iteration velocity.

Indie teams bootstrap with templates and editor plugins to ship prototypes quickly. Enterprise studios build platform-scale generators that serve multiple titles and lower marginal cost per variant.

Governance matters: automated checks, review queues, and sign-offs keep quality high while scale grows. CI pipelines can generate, validate, and package assets for release builds.

- Quantify time-to-content gains with telemetry and sprint comparisons.

- Capture knowledge via documented constraints and exemplars to survive turnover.

- Reserve sandboxes and A/B tests so developers can trial new generators safely.

“Start with constructive methods, mature to search-based optimization, then add ML and hybrid orchestration.”

Driving Replayability and Player Engagement with Procedural Systems

Keeping runs interesting requires both big structural variety and small, moment-to-moment changes.

Variability in routes, encounters, and rewards refreshes sessions without overwhelming players. Macro-randomization—world seeds, rotating quest pools—sets a new backbone each run. Micro-variations—enemy modifiers, loot tiers, and encounter offsets—deliver instant novelty.

Tie progression to generated offerings: meta unlocks and rotating modifiers encourage experimentation and reward player choices. Event calendars and seasonal rule sets create rhythms that spark social talk and return visits.

Fairness, adaptation, and measurement

Balance surprise with guarantees: pity timers, resource floors, and checkpoints protect satisfaction. Adaptive generators should mirror player actions and favored playstyles, shifting challenges that complement skill.

Instrument engagement KPIs—run count, session gap, quit points—to refine variability. Curate featured seeds and community challenges to highlight intriguing permutations and drive competition.

“Design layers that respect player agency while nudging exploration.”

| Layer | Example | Player Impact |

|---|---|---|

| Macro | World seeds, quest pools | Long-term novelty, fresh routes |

| Micro | Enemy mods, loot tiers | Moment-to-moment surprise |

| Progression | Meta unlocks, rotating modifiers | Encourages replay and choice |

| Events | Seasonal rules, featured seeds | Community engagement |

- Offer difficulty lanes and assist toggles so different skill levels share the same content with tuned intensity.

- Close the loop: surface telemetry and player feedback to tighten constraints and event design over time.

- For deeper methods and reproducible studies, consult the research overview.

Ethical and Strategic Considerations in AI-Driven Game Design

Teams must treat algorithmic outputs as authored artifacts with defined ownership. That framing helps studios manage legal, privacy, and safety risks while keeping design intent intact.

Intellectual property and originality

Clarify provenance for training sources and datasets to reduce IP exposure. Document licenses and include asset lineage in builds so legal reviews can trace origins quickly.

Player data privacy and responsible personalization

Minimize personal data collection and anonymize telemetry. Provide clear consent controls so players can opt into personalization or decline without losing core experiences.

Implementation risks and guardrails

Separate PCG pipelines from NPC behavior research to limit cross-risk. Enforce safety layers: blocked terms, lore consistency checks, and moderation queues before release.

“Define rollback plans, kill switches, and incident protocols before wide deployment.”

| Risk | Mitigation | Owner | When to Act |

|---|---|---|---|

| IP ambiguity | Document datasets; license audits | Legal & tools | Design review, pre-launch |

| Privacy leaks | Minimize, anonymize, consent | Data team | Telemetry onboarding |

| Unsafe outputs | Filters, moderation, narrative checks | Design + QA | Continuous, before live ops |

| Bias in characters | Dataset curation; diverse review panels | Content leads | Iteration and audits |

- Align contracts to clarify rights for generated assets.

- Train designers and engineers on ethical policy and tooling.

- Treat ethics as ongoing: monitor metrics, player feedback, and incidents.

Measuring Success: Evaluating Procedural Content Quality

A robust evaluation pipeline links algorithmic scores to how players actually play. That bridge makes sure generated levels meet design goals and support long-term engagement.

Fitness functions, constraint checks, and simulation-based testing

Define quantitative fitness functions per asset type—path length variance for levels, solvability for puzzles, and density for encounters.

Automate constraint validation: collision-free paths, spawn safety, and resource placement rules run in CI to catch regressions early.

Use simulation agents to stress-test level layouts at scale and surface dead ends, difficulty spikes, or pacing issues.

Player-centric KPIs: retention, session length, and satisfaction

Triangulate automated scores with player data—retention curves, session length, and satisfaction surveys—to measure real impact on gameplay experiences.

Establish dashboards that join seed, constraints, and model version with outcome metrics. Run A/B seeds and parameter sweeps to isolate what improves engagement without harming fairness.

- Combine numeric checks with expert reviews for readability and flow.

- Monitor drift after updates and gate releases behind quality thresholds.

- Integrate analyzing player telemetry to refine fitness functions and the decision framework for when to regenerate or retire variants.

“Measure, iterate, and align metrics to player outcomes—technical scores only matter when they improve the game.”

The Future of AI and PCG in Game Development

Hybrid stacks are reshaping how studios stitch systems, art, and narrative into living game worlds.

Combined methods—GANs, reinforcement learning, and large language models—are converging to deliver higher quality and tighter editorial control. WFC-style scaffolds will set layout rules, learned evaluators will guide search, and language models will author quests and ambient lore.

Trends toward hybrid models

Expect layered pipelines where each method plays a clear role. Machine learning handles texture and sequence tasks while search methods tune playability. This mix raises fidelity without losing designer intent.

Emerging roles and workflows

New roles will appear: model curators, constraint designers, and data ops staff embedded with level and systems teams. Tooling will be interoperable—node editors for constraints, prompt libraries, and reproducible seeds.

- Continuous loops: live telemetry shapes fitness and versioned models.

- Platform concerns: edge inference, caching for service-backed models, and cost-aware deployment.

- Education: cross-training helps designers and engineers collaborate faster.

The market impact is clear: faster content cycles, richer personalization, and higher player expectations. Studios that treat these methods as a disciplined capability—not a one-off experiment—will capture the future advantage.

“The studio that builds repeatable pipelines and governance will win sustained reach and player trust.”

Conclusion

The closing point connects strategy to measurable outcomes: designers and engineers should treat generation as a repeatable studio capability that scales creative ambition.

Start with clear goals and simple rules. The toolbox—constructive rules, search-based optimization, ML models, and LLM-backed narrative layers—gives teams practical options to produce varied, high-quality assets and gaming experiences.

Implementation needs robust schemas, validation checks, and iterative playtesting. Track telemetry and fitness functions so teams spend less time fixing and more time refining player-facing systems over time.

Ethics and governance are non-negotiable: protect data, document provenance, and keep human curation as a final gate. Done well, these practices deliver fair, replayable gameplay experiences that sustain engagement and shape the future of game development.

FAQ

What is procedural content generation and why does it matter for future-ready game development?

Procedural content generation (PCG) creates game assets, levels, and scenarios algorithmically rather than by hand. It matters because it scales content, reduces production time, and enables greater replayability. Studios can deliver varied experiences to players while saving artist hours and accelerating iteration.

How has the technology behind algorithmic generation evolved over time?

Generation began with simple rule-based systems in roguelikes and tile grammars, then progressed to search-based methods and grammar systems. Recently, machine learning models—Markov chains, autoencoders, GANs, LSTMs, and transformer-style models—have expanded what can be created. Hybrid systems that combine classical algorithms with learned models now offer the best trade-offs for quality and control.

Which types of game elements are best suited for generated production?

Common targets include levels and maps, terrain, NPCs and encounters, quests, item variants, textures, and music fragments. Derived content like difficulty curves, economy parameters, and narrative beats can also be generated to support personalized pacing and long-term engagement.

When should generation happen offline versus during live gameplay?

Use offline generation for large, compute-heavy assets and precomputed variants—this fits open-world assets, global level layouts, and vetted quest trees. Use online or real-time generation for adaptive encounters, dynamic rewards, and difficulty tweaks tied to player actions. The hybrid approach mixes both: precompute templates then refine them live.

What algorithm classes should developers consider first?

Start with constructive and constraint-based methods (tile grammars, Wave Function Collapse) for predictable structure. Add search-based techniques (evolutionary algorithms, MCTS) for optimization under constraints. Introduce ML models—autoencoders, GANs, and transformers—when examples exist and you need stylistic or high-dimensional outputs. Combine methods when control and creativity both matter.

How do creators choose the right model for a content task?

Match the algorithm to the problem: use grammars and constraint solvers for spatial layouts; search or optimization for balancing and fitness-driven goals; generative models for textures, dialogue, or music. Consider data availability, compute cost, and the required level of editorial control before committing.

What practical steps should a team follow to implement generation in a game?

Define clear goals and constraints tied to player experience and scope. Prototype chosen algorithms on small assets. Integrate outputs into the engine with proper schemas and tooling. Then playtest, measure against KPIs, and iterate with automated tests and human review—preferably using an agile cycle.

How can teams ensure generated content meets quality and safety standards?

Use fitness functions, constraint checks, and simulation-based validation to filter outputs automatically. Combine automated tests with curator review and player feedback loops. Add guardrails for offensive or unintended content and maintain traceability for intellectual property checks.

What role does personalization play, and how is it implemented?

Personalization adapts difficulty, pacing, and rewards to player behavior to increase retention. Implement it by collecting lightweight telemetry, building player models, and tuning generation parameters. Respect privacy by anonymizing data and offering clear consent options.

How can smaller studios scale generation without large budgets?

Focus on high-impact areas—level templates, procedural encounters, or loot systems—where automation saves the most time. Use open-source tools and lightweight ML models, and leverage constructive methods that need less compute. Prioritize tooling that integrates with existing pipelines to reduce overhead.

What metrics should teams track to measure success of generated systems?

Track player-centric KPIs like retention, session length, completion rates, and satisfaction surveys. Monitor technical metrics such as generation time, failure rate, and constraint violations. Use A/B tests to compare generated variants against handcrafted baselines.

Are there legal or ethical concerns when using generated assets?

Yes. Teams must manage copyright risks when models are trained on external data, ensure generated content does not infringe third-party IP, and maintain transparency about personalization. Protect player privacy and avoid manipulative mechanics that exploit behavioral data.

When do hybrid systems outperform single-method approaches?

Hybrid systems excel when a project needs both strict control and creative variety. For example, combine grammars for layout coherence with learned models for aesthetic detail. Hybrids reduce failure cases of pure ML approaches while delivering richer outputs than pure rule systems.

How can studios prepare workflows for future advancements in generation technology?

Invest in modular tooling, reusable data pipelines, and robust telemetry. Train teams on both classical algorithms and modern machine learning. Cultivate playtesting cultures and maintain evaluation frameworks so new models can be validated quickly and safely.