There are mornings when the scale of what we discard feels personal. A city’s refuse tells a story: choices, habits, missed opportunities. Readers who care about environmental outcomes often feel both urgency and hope.

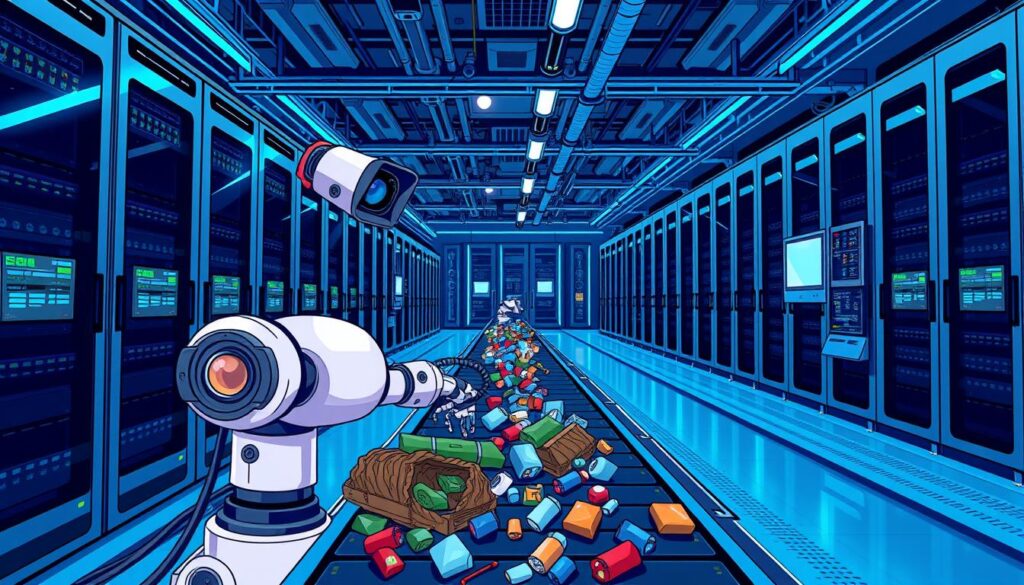

This introduction frames a practical path forward. It explains how computer vision and edge computer systems turn chaotic image streams into clear signals. Those signals guide sorting on fast conveyors and reduce hazardous manual work.

The world generated 2.01 billion tons of municipal solid waste in 2016 and is on track to rise sharply. Modern systems combine cameras, lighting, inference, and mechatronics to pick waste items in real time. That blend of data and vision helps recycling facilities meet tighter standards and protect workers.

Key Takeaways

- Vision-driven systems improve safety and throughput over manual sorting.

- Image-based detection lets operations classify plastic, metal, and paper on the fly.

- Edge computers and sensors enable real-time decisions on conveyors.

- Continuous field data closes the loop and improves future performance.

- Adopting holistic technologies turns pilots into production-grade systems.

Why Waste-Sorting Robots Matter Now: Efficiency, Accuracy, and Safety in U.S. Recycling

Recycling lines in the United States must adapt as material streams grow and standards tighten. Rising volumes make manual sorting costly and inconsistent. Facilities face higher contamination and lower bale quality when people try to sort many different types of items at speed.

From rising waste volumes to stricter regulations

Municipal waste will increase sharply by 2050, and that trend raises operating costs and regulatory pressure. Facilities must meet tighter specs or face rejections and lower prices for recyclable materials.

How computer vision reduces contamination and boosts recovery rates

Camera arrays and real-time algorithms scan images on the line, classifying materials and sending fast pick commands to sorting systems. The result: higher accuracy, fewer misclassifications, and improved recovery rates.

- Efficiency: systems keep pace with industrial conveyors, raising throughput.

- Quality: better identification leads to cleaner bales and higher market value.

- Safety: machines handle hazardous items, letting staff focus on supervision and maintenance.

Facilities also gain actionable data to track contamination trends and optimize shifts. For a practical view on cost reductions and field deployments, see this study on robotic sorting in recycling.

AI Use Case – Waste-Sorting Robots with Computer Vision: A Practical How-To Blueprint

A practical blueprint begins with clear targets—what materials matter, how fast the line must run, and the recovery rate to aim for. Define target categories (PET, HDPE, aluminum, paper), set throughput and accuracy KPIs, and tie them to bale specs and market value.

Hardware and mechanism

Select high-speed cameras, lenses matched to field of view, and controlled lighting to stabilize images across shifts. Add depth or IR sensors where mixed streams or occlusion hamper detection.

Choose actuators by item size and cycle time: robotic arms with grippers or suction for precise picks; air jets or diverters for rapid ejections on fast lines.

Data, preprocessing, and modeling

Capture diverse images and videos under varied lighting, soiling, and overlap. Maintain high-quality labels that reflect real plant variability.

Preprocess by normalizing exposure, reducing noise, and applying augmentation to simulate contamination and rotations. For models, weigh object detection (bounding boxes) versus segmentation for overlapping items; start with convolutional neural models and iterate.

Deploy initial inference at the edge to minimize latency and map predictions to deterministic actuator rules. Version data, models, and decision logic to ensure consistent operations and measurable gains in waste management reporting.

System Architecture: From Vision Models to Robotic Actuation and IoT Integration

Real-time pipelines turn conveyor images into deterministic pick signals that keep lines moving at scale. The streaming architecture captures images continuously, preprocesses them, and runs inference at the edge to meet millisecond decision windows. This minimizes latency and preserves line throughput for modern recycling operations.

Robotic control relies on quick localization, grasp selection, and path planning. Pick commands map model outputs to grippers, suction tools, or air jets based on item size and material. Cycle-time optimization and coordinated path planning help hit targeted performance—many systems reach up to 80 picks per minute while running 24/7.

Safety and process controls are mandatory. E-stops, light curtains, guarded zones, and interlocked PLC logic protect operators and tie directly into the control loop. These protections ensure high performance without compromising safety.

IoT sensors supply bin-level telemetry, line health, and predictive alerts. Central services aggregate data for fleet analytics, while edge computers handle low-latency detection and actuator commands. Integrating with existing PLC/SCADA systems uses standard protocols to enable reliable interoperability across facilities.

Continuous learning closes the loop: labeled production feedback refines models, reduces mispicks, and improves detection for challenging types of waste. The result is a resilient, scalable platform that ties vision outputs to recovery, contamination, and uptime metrics.

Training for Accuracy: Datasets, Model Iteration, and Performance Tuning

High-quality training data anchors reliable sorting and long-term gains in material recovery. Collect images and images videos across shifts, lines, and seasons to capture lighting variance, occlusion, and soiling. Diverse data reduces surprises when systems run under real plant conditions.

Labeling matters. Define clear categories and label conventions so models learn consistent boundaries between plastics, metals, and paper. Maintain train/validation splits by time or line to prevent overfitting to a single background.

Evaluation and metrics

Measure mAP, but track class-wise precision and recall too. Use confusion matrices to spot costly misclassifications and apply cost-weighted errors where market value differs by material.

Iterative training and continuous learning

Route mispicks into a curated dataset and fine-tune convolutional neural and other neural networks on hard examples. Retrain on a regular cadence and A/B test updates before plant-wide deployment.

Sensor fusion to raise detection quality

Combine vision outputs with NIR for polymer ID and XRF for metals. Fusion reduces visual ambiguity and improves material classification for mixed items.

- Augmentation: simulate glare, blur, dirt, and overlap.

- Hygiene: strict splits, versioning, and model logs.

- Impact: lower contamination, higher bale quality, and better yield.

| Challenge | Data Strategy | Metric | Outcome |

|---|---|---|---|

| Lighting shifts | Collect dawn/dusk samples; normalize exposure | Class-wise recall | Stable detection across shifts |

| Occlusion & overlap | Annotate partial objects; add segmentation labels | mAP & confusion analysis | Fewer missed picks |

| Similar-looking polymers | Fuse NIR/XRF channels with images | Precision on polymer classes | Reduced false positives for plastics |

| Production drift | Continuous feedback loop from mispicks | Model performance over time | Timely retraining and stable quality |

For a technical reference on model architectures and system-level lessons, consult this artificial intelligence based system paper.

Deployment and Operations: Edge Computing, Predictive Maintenance, and Quality Control

On-site inference shifts decision-making to the plant floor, removing network lag and stabilizing sorting outcomes. Edge computing keeps latency low so conveyors run at rated speed and picks occur within millisecond windows.

Deployment playbooks commonly package models in containers on the edge computer, include automated health checks, and use staged rollouts with clear rollback paths. This approach reduces disruption and speeds integration with PLC/SCADA systems.

Operational analytics track recovery rates, contamination, and pick distribution. Dashboards surface performance drift and help supervisors tune thresholds and vision decision policies. Regular spot checks and bale audits feed labeled data back into training pipelines to protect quality.

Predictive maintenance analyzes machine telemetry to spot bearing wear, actuator drift, or clogged nozzles before failures occur. Scheduled interventions cut unplanned downtime and improve energy efficiency per ton sorted.

Model drift is caught by comparing live outputs to human audits; when shifts appear, retraining is scheduled and deployed during low-volume windows. Staff training and documented change management ensure safe, measurable gains in waste management and recycling efficiency.

Industry Landscape and Applications in Recycling Facilities

Across North America, deployments show how targeted detection and analytics turn noisy streams into market-ready materials. Dozens of commercial installations now demonstrate that automated picking performance can rival experienced human sorters.

Robotic picking benchmarks and real-world adoption

Vendors such as AMP Robotics and ZenRobotics report systems that achieve up to 80 picks per minute while operating 24/7. These systems are live in hundreds of facilities and prove scalable for high-throughput lines.

Targeting high-value streams

Operators focus first on PET, HDPE, aluminum, OCC, textiles, and e-waste. These fractions deliver clear gains in bale value and are well suited to sensor fusion for polymer and metal detection.

Integration with existing lines: retrofits, safety, and compliance

Retrofits align pickers and optical sorters to existing conveyors and PLCs while preserving guarding and interlocks. Greyparrot-style analytics feed real-time composition reporting to support compliance and market reporting.

Practical advice: pilot one stream, tune models to local commodity demand, then scale across recycling facilities to accelerate adoption.

For a deeper look at instrumented sorting strategies, see smart recycling instrumentation.

Conclusion

A pragmatic end-to-end approach delivers steady improvements in throughput, uptime, and material value. ,

Artificial intelligence and computer vision elevate waste management by improving sorting outcomes and operational stability. Teams should define KPIs, pick appropriate hardware, train robust models, and deploy at the edge while tracking data continuously.

These steps yield cleaner bales, higher recovery, and less rework—benefits that improve revenues and compliance for recycling facilities. Start with one focused stream, verify gains, then scale systems and processes to optimize waste across lines.

Edge inference and predictive maintenance keep computers aligned to throughput targets. Governance—version control, audits, and safety interlocks—preserves trust. Organizations that operationalize these capabilities will lead sustainable processes and circular outcomes for materials and communities.

FAQ

What is the core benefit of deploying waste-sorting robots with vision systems in U.S. recycling facilities?

These systems improve throughput, reduce contamination, and protect workers by automating repetitive picks. Vision-guided machines identify materials faster than manual sorting, boosting recovery rates for PET, HDPE, aluminum, and cardboard while lowering operational costs.

Which materials can vision-based sorting reliably identify and separate?

Modern models can detect common recyclables such as clear and colored PET bottles, HDPE containers, aluminum cans, OCC (old corrugated cardboard), certain textiles, and components of e-waste. Accuracy improves when combined with infrared or XRF sensors for polymers and metals.

How do facilities prepare data for model training and validation?

Teams capture diverse images and video from conveyors, label items across lighting, occlusion, and soiling conditions, and apply augmentation to expand coverage. Datasets should represent mixed streams and rare items; continuous field annotations help close performance gaps.

What model types are best for mixed waste streams: detection or segmentation?

Object detection (CNN-based) performs well for discrete picks, while segmentation excels where precise outlines matter—such as flattened cardboard or nested plastics. Many deployments use hybrid pipelines to balance speed and granularity.

Where should inference run: cloud or edge?

Edge inference minimizes latency and keeps throughput high on busy lines. Running models at the edge also reduces bandwidth needs and avoids cloud dependence for real-time pick decisions; noncritical analytics can sync to cloud systems.

How do operators measure system performance after deployment?

Key KPIs include throughput (items/min), pick accuracy, recovery rate per material, contamination reduction, and mean time between failures. Use confusion analysis and mAP to spot categories needing retraining or sensor fusion.

What role do additional sensors play in improving classification?

NIR, XRF, and hyperspectral sensors distinguish polymers and alloy types that RGB imaging struggles with. Sensor fusion increases precision for difficult streams, reducing mispicks and improving downstream bale quality.

How is safety integrated into robotic pick-and-place on conveyor lines?

Systems combine soft grippers, safe speed-limited robots, light curtains, and redundant interlocks. Functional safety standards and risk assessments guide retrofits and new installations to meet OSHA and industry requirements.

How often should models be retrained to handle drift and new materials?

Retrain cadence depends on stream variability—monthly updates suit dynamic lines, while quarterly may suffice for stable feeds. Continuous learning pipelines that capture mispicks and operator corrections accelerate improvement.

What are common retrofit challenges when integrating vision pickers into existing MRFs?

Challenges include conveyor geometry, inconsistent lighting, limited mounting space for cameras, and legacy controls. Successful retrofits plan for tailored lighting rigs, mounting brackets, and PLC/SCADA integration to avoid line disruptions.

How do organizations validate ROI for automated sorting systems?

ROI analyses compare reduced labor and landfill costs, higher material recovery, improved bale quality premiums, and lower contamination penalties. Pilot projects with measured KPIs over weeks provide realistic financial projections.

What maintenance and monitoring practices keep systems performing at scale?

Implement predictive maintenance for cameras, actuators, and grippers; monitor model drift, camera focus, and conveyor vibrations. Remote diagnostics and automated alerts help teams intervene before performance drops.

Can small MRFs benefit from these technologies, or are they only for large facilities?

Small and mid-size facilities can benefit via modular, lower-cost pick stations or service models that offer robotic sorting as a managed service. These options reduce capital burden while improving recovery and safety.

How do privacy and data policies apply when recording images on sorting lines?

Facilities should define data retention policies, anonymize or avoid capturing worker faces where possible, and comply with workplace privacy regulations. Clear governance ensures ethical use of images and model training data.