While 83% of business leaders claim to use analytics daily, fewer than half understand how to identify true patterns in their numbers. This gap often stems from overlooking foundational statistical concepts that transform raw information into strategic assets.

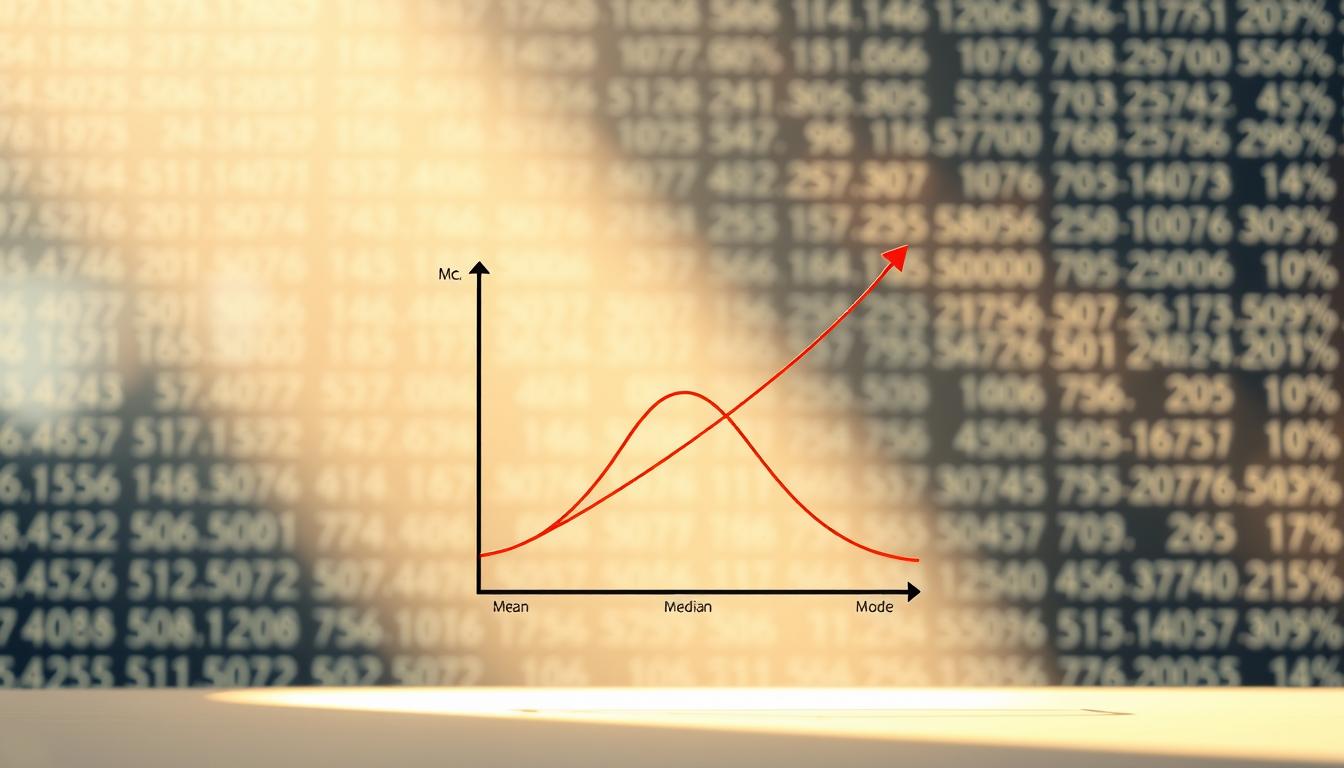

At the heart of data interpretation lie three powerful values: mean, median, and mode. These cornerstone calculations act as compass points in numerical landscapes, revealing hidden trends and outliers that shape decision-making. From healthcare research to stock market predictions, mastery of these tools separates surface-level observations from actionable intelligence.

Modern analysts don’t just calculate averages—they strategically select methods that align with their data’s characteristics. For instance, real estate agents might prioritize median home prices to counterbalance luxury market extremes, while educators could use mode to identify common learning challenges. Core statistical principles become particularly crucial when handling skewed datasets or quality control metrics.

Key Takeaways

- Three core values (mean, median, mode) serve distinct purposes in data interpretation

- Strategic selection depends on data type and distribution characteristics

- Proper application prevents distortion from outliers and skewed results

- Essential for comparing datasets and identifying meaningful patterns

- Forms foundation for advanced analytics and machine learning models

Introduction to Measures of Central Tendency

Every dataset tells a story, but without the right tools, its narrative remains hidden. Central tendency acts as a story decoder, revealing the most probable plot points in numerical sequences. This concept answers one critical question: What single value best represents an entire group of numbers?

An Overview of Data Analysis in Statistics

Statistical analysis begins by finding the gravitational center of information. Imagine sorting through 10,000 customer transactions—central tendency provides the anchor point around which other values cluster. Three primary methods achieve this:

- Mean: The arithmetic balance point

- Median: The positional middle ground

- Mode: The frequent flyer in datasets

These tools transform chaotic numbers into clear signals. Retailers use them to identify average purchase sizes, while meteorologists track temperature trends through seasonal medians.

The Role of Central Tendency in Modern Data Science

Today’s algorithms build upon these foundational concepts. Machine learning models often start by normalizing data distributions using central values. Consider these applications:

- Fraud detection systems comparing transaction modes to typical patterns

- Healthcare AI using median values to filter out anomalous lab results

Financial analysts particularly rely on these methods. “The median home price cuts through market noise better than any average,” notes a JPMorgan Chase market report. This strategic approach prevents billion-dollar portfolios from being skewed by extreme values.

Definition and Significance in Statistics

Data’s true power emerges when we decode its central message through statistical precision. This process begins by identifying the core representative value that simplifies complex numerical patterns into actionable intelligence.

What Is a Central Tendency?

A central tendency acts as a statistical anchor, providing one number that best summarizes an entire dataset. It answers critical questions like: What typical salary represents a company’s workforce? Which temperature value captures a month’s weather pattern?

Three primary methods achieve this:

| Method | Best For | Outlier Sensitivity |

|---|---|---|

| Mean | Normal distributions | High |

| Median | Skewed data | Low |

| Mode | Categorical data | None |

Key Concepts and Terminology

Professionals use specific terms to navigate statistical analysis effectively:

- Population parameters: True values for entire groups

- Sample statistics: Estimates from data subsets

- Distribution shape: Determines optimal calculation method

Choosing the right measure requires understanding a dataset’s structure. For example, economists analyzing income disparities often prefer medians to avoid distortion from top earners. This strategic selection ensures insights reflect real-world conditions rather than mathematical artifacts.

Understanding the Mean: Calculation and Considerations

In an era where data drives decisions, mastering the arithmetic mean separates casual observers from strategic analysts. This fundamental calculation serves as both compass and cautionary tale—a tool that reveals trends while demanding scrutiny of its limitations.

How to Calculate the Arithmetic Mean

The formula appears deceptively simple: sum all values and divide by their count. For population data, professionals use μ (mu), while sample data employs x̄ (x-bar). Consider five home prices: $200k, $250k, $300k, $350k, $2m. The mean calculates as:

($200,000 + $250,000 + $300,000 + $350,000 + $2,000,000) ÷ 5 = $620,000

This result demonstrates the mean’s vulnerability—a single luxury property skews the average upward, potentially misrepresenting typical market conditions.

Advantages and the Impact of Outliers

The mean shines in symmetrical distributions, providing perfect balance between high and low values. Its mathematical precision makes it indispensable for:

- Budget forecasting using historical spending averages

- Academic research analyzing controlled experimental data

- Quality control tracking production metrics

However, extreme values distort outcomes. The Wall Street Journal recently noted: “Median income figures better reflect household realities than means in economies with wealth concentration.” Analysts often run parallel calculations—comparing mean and median—to detect outlier influence before drawing conclusions.

Strategic use requires understanding your data’s story. When working with skewed distributions or potential anomalies, the mean becomes one chapter rather than the entire narrative.

Exploring the Median: Middle Value Insights

In urban planning departments across U.S. cities, analysts face a common challenge: housing price reports distorted by multimillion-dollar listings. This real-world scenario demonstrates why the median middle value often becomes the hero of data analysis. Unlike averages swayed by extremes, this method reveals what’s typical rather than theoretical.

Identifying the Median in Odd and Even Datasets

Calculating the median requires precise organization. Analysts first arrange numbers in ascending or descending order. For datasets with an odd number of observations:

| Dataset Size | Median Position | Example |

|---|---|---|

| 5 values | 3rd entry | $180k, $200k, $220k, $240k, $1.2m |

| 7 values | 4th entry | 12, 15, 18, 22, 25, 28, 31 |

Even-numbered datasets demand averaging two central values. A 6-home price dataset might show: $150k, $175k, $190k, $210k, $225k, $2.5m. The median becomes ($190k + $210k)/2 = $200k – far more representative than the $738k mean.

When to Prefer the Median Over the Mean

The Bureau of Labor Statistics consistently uses median income figures, recognizing that “averages become theatrical performances in unequal economies”. This approach proves essential when:

- Analyzing salary distributions with executive pay outliers

- Evaluating neighborhood home prices amid luxury developments

- Measuring customer service call durations with rare marathon sessions

Tech companies like Zillow and Redfin embed median calculations into their market reports, creating stable benchmarks that withstand market volatility. This strategic choice helps homebuyers ignore statistical noise and focus on achievable price ranges.

Analyzing the Mode: Frequency and Distribution

When customer surveys reveal conflicting preferences, analysts turn to a frequency-focused solution. The mode cuts through ambiguity by spotlighting the most recurrent value in any dataset. This approach proves invaluable when dealing with non-numerical information like product colors or survey responses.

Benefits of Using the Mode for Categorical Data

The mode shines where other methods falter. Marketing teams analyzing customer feedback categories—like “excellent,” “good,” or “poor”—rely on it to identify dominant sentiments. Retailers tracking popular shirt sizes or smartphone colors use this measure to optimize inventory.

Consider a bakery tracking daily sales:

- Croissant: 38 sales

- Sourdough: 112 sales

- Bagel: 112 sales

Here, the bimodal distribution (sourdough and bagel) guides production planning more effectively than averages could.

Understanding Limitations and Multiple Modes

While powerful, the mode has boundaries. Continuous datasets like temperatures or stock prices often lack clear peaks, making this measure ineffective. Analysts at Spotify noted: “Music streaming patterns sometimes show three popularity peaks—morning commutes, lunch breaks, and evening workouts.”

| Scenario | Mode Effectiveness |

|---|---|

| Customer shoe sizes | High |

| Annual rainfall measurements | Low |

| Social media reactions | Variable |

Strategic analysts combine mode with other methods when facing multimodal distributions. This layered approach reveals both common preferences and overall trends, providing complete decision-making frameworks.

Mastering Measures of Central Tendency in Data Analysis

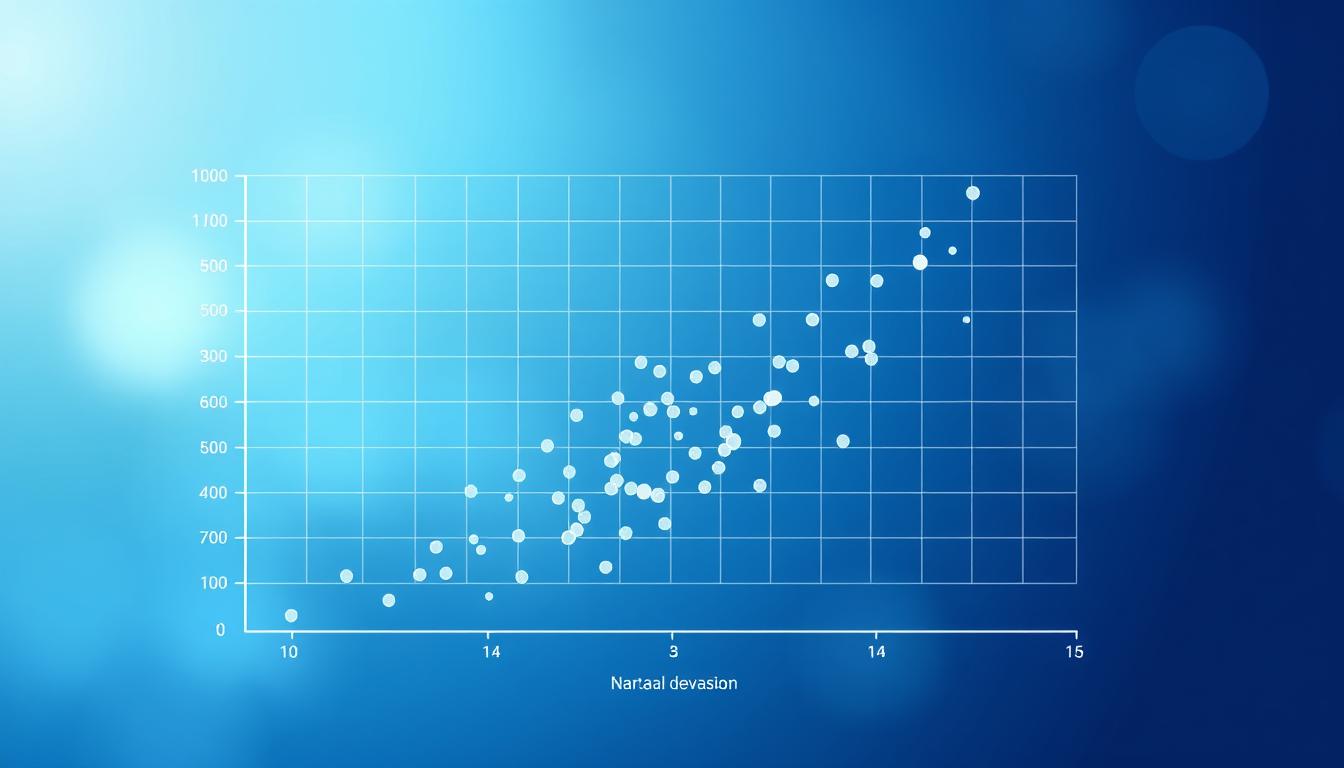

Transforming raw numbers into actionable strategies requires more than calculation—it demands contextual intelligence. Professionals across sectors now leverage these statistical anchors to cut through complexity and reveal operational truths.

Real-World Applications and Examples

Financial analysts routinely choose median household income over averages when assessing economic health—this approach neutralizes billionaire outliers that distort reality. Manufacturers tracking product defects might use mode to spotlight recurring quality issues needing urgent attention.

Symmetrical datasets offer rare alignment moments. In pharmaceutical trials, matching mean, median, and mode values validate normal drug response patterns. This triple confirmation builds confidence in research conclusions before FDA submissions.

Skewed distributions demand strategic pivots. Urban planners analyzing neighborhood incomes often find means pulled toward luxury high-rises, while medians reveal what typical residents actually earn. Tech companies like Zillow embed this understanding into housing affordability calculators used by millions.

The true power emerges when tools match data realities. Whether optimizing inventory through sales modes or benchmarking salaries via medians, these decisions transform abstract numbers into competitive advantages.

FAQ

When should analysts use the median instead of the mean?

The median is preferred when datasets contain outliers or skewed distributions. Unlike the mean, it isn’t influenced by extreme values, making it a reliable measure for income data, housing prices, or other scenarios where outliers distort averages.

How does the presence of outliers affect the mean?

Outliers disproportionately pull the mean toward the tail of a distribution. For example, a single high salary in a small team inflates the average, potentially misrepresenting typical earnings. In such cases, the median offers a clearer picture of central tendency.

What makes the mode useful for analyzing categorical data?

The mode identifies the most frequent category in non-numeric datasets—like survey responses or product preferences. It’s the only measure of central tendency applicable to nominal data, such as “favorite color” or “customer region.”

How do you calculate the median in datasets with even numbers?

For even-numbered datasets, arrange values in order and average the two middle numbers. If monthly sales are [12, 15, 18, 20], the median is (15 + 18)/2 = 16.5. This avoids bias toward either side of the data split.

Why might a dataset have multiple modes?

Bimodal or multimodal distributions occur when two or more values tie for highest frequency. Retailers might see dual sales peaks during holiday seasons, while voter surveys could reveal polarized preferences. Analysts note these modes to highlight diverse trends.

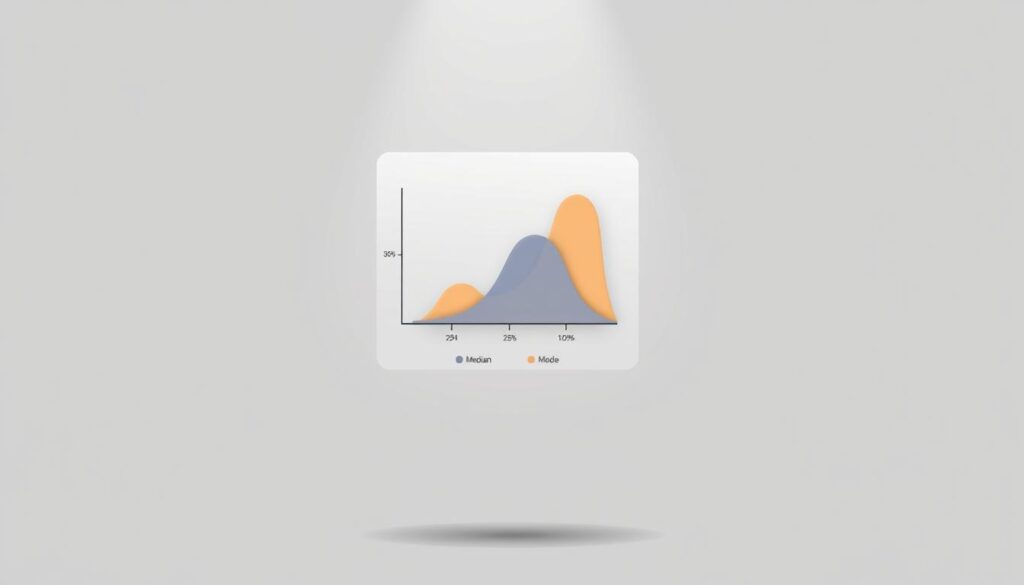

How do skewed distributions impact choices in central tendency?

In skewed data, the mean shifts toward the tail, while the median stays centered. For right-skewed data—like income—the mean exceeds the median. Analysts prioritize the median here to avoid overestimating “typical” values affected by extremes.