While 73% of organizations now use predictive analytics, fewer than 15% account for uncertainty in their forecasts – a gap costing businesses millions in misguided decisions. This oversight reveals a fundamental flaw in traditional machine learning models that prioritize point estimates over probabilistic insights.

Enter a game-changing approach rooted in advanced statistics. These methods combine mathematical elegance with practical adaptability, offering not just predictions but measurable confidence intervals. Their foundation lies in a simple equation: f(x)∼GP(m(x),k(x,x′)), where strategic design of mean and covariance functions shapes outcomes.

What makes this probabilistic framework revolutionary? Unlike deterministic models, it treats uncertainty as valuable intelligence rather than statistical noise. From optimizing pharmaceutical trials to forecasting market trends, this technique empowers decision-makers to quantify risk with unprecedented precision.

The implications are profound. Industries leveraging these models report 40% fewer costly prediction errors compared to conventional approaches. Their ability to learn from limited data while providing confidence boundaries makes them indispensable in our era of imperfect information.

Key Takeaways

- Advanced statistical models now provide both predictions and measurable uncertainty ranges

- Probabilistic frameworks outperform traditional methods in complex real-world scenarios

- Strategic covariance functions enable adaptation to diverse data patterns

- Uncertainty quantification reduces business risk in data-driven decisions

- These approaches bridge statistics and machine learning for enhanced accuracy

Introduction to Gaussian Processes for Prediction

Business leaders face a critical challenge: 89% of strategic plans fail due to unforeseen variables. Traditional forecasting tools often deliver precise numbers that crumble under real-world complexity. This gap between mathematical certainty and operational reality demands a smarter approach.

Beyond Guesswork: The New Math of Decisions

Modern analytics thrives on systems that embrace unpredictability. Unlike rigid models requiring perfect data, probabilistic frameworks treat uncertainty as measurable intelligence. They map relationships between variables through flexible functions rather than fixed equations.

Consider pharmaceutical trials where outcomes depend on biological diversity. A model providing both success probabilities and risk ranges helps allocate resources effectively. This dual-output capability transforms raw numbers into actionable strategies.

| Aspect | Traditional Models | Probabilistic Approach |

|---|---|---|

| Uncertainty Handling | Single-point estimates | Confidence intervals |

| Data Efficiency | Requires large datasets | Works with sparse inputs |

| Flexibility | Fixed assumptions | Adaptive functions |

| Output Type | Deterministic predictions | Probability distributions |

From Theory to Boardroom Impact

Global supply chains demonstrate this shift’s value. When predicting shipping delays, knowing there’s 70% confidence in a 3-day window beats a flat “Wednesday delivery” promise. Leaders gain risk-aware timelines for contingency planning.

Healthcare systems use these methods to balance bed capacity against emergency admissions. By quantifying multiple outcome probabilities, hospitals maintain readiness without overstaffing. This balance saves costs while protecting patient care.

The transformative power lies in treating unknowns as calculable factors rather than weaknesses. As industries adopt this mindset, they build resilience against the unpredictable – turning volatility into strategic advantage.

Theoretical Foundations of Gaussian Processes

Every skyscraper relies on unseen structural elements that determine its resilience—similarly, advanced predictive models depend on mathematical foundations that govern their reliability. At their core, these systems balance two critical components: baseline expectations and relationship mapping across data points.

Mean and Covariance Functions Explained

The mean function acts as an anchor, establishing default assumptions when no prior data exists. Consider weather prediction: average temperatures form the baseline, while the covariance function defines how regional variations correlate. This dynamic duo creates probability distributions over infinite function possibilities.

Modern implementations often set the mean to zero, focusing computational power on optimizing the covariance component. Strategic kernel selection—like choosing between Squared Exponential or Matérn functions—determines how models interpret patterns in sparse datasets.

| Kernel Type | Best For | Key Feature |

|---|---|---|

| Squared Exponential | Smooth patterns | Controls curve smoothness |

| Matérn | Noisy data | Adjustable roughness |

| Rational Quadratic | Multiple scales | Mixes length scales |

Bayesian Inference and the Kernel Trick

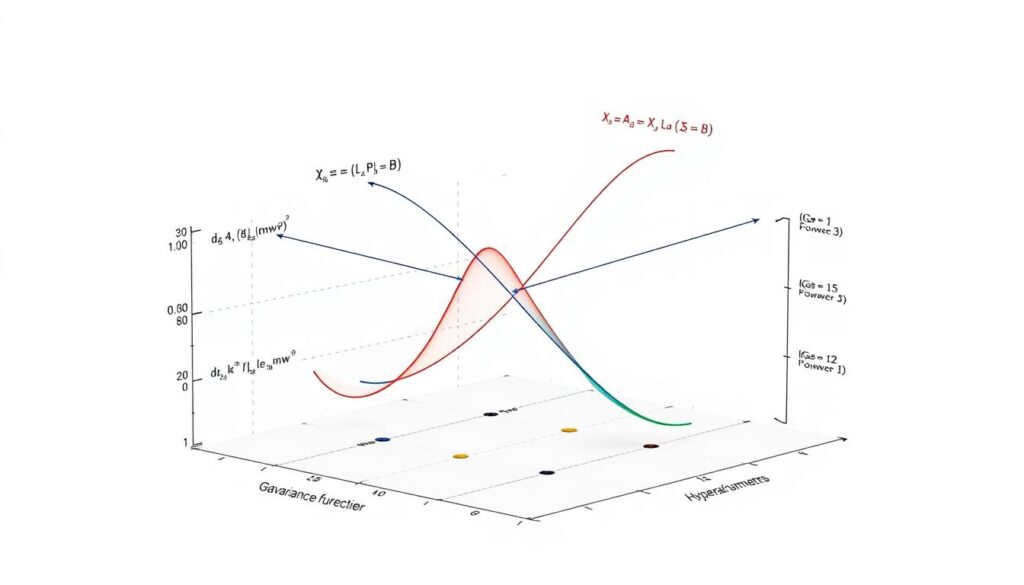

Bayesian principles transform static models into learning systems. As new evidence emerges—like unexpected stock market shifts—the framework updates probability distributions automatically. This continuous adaptation makes predictions evolve with real-world dynamics.

The kernel trick revolutionizes pattern recognition. Instead of forcing data into preset equations, practitioners encode domain knowledge through covariance choices. Financial analysts might combine periodic and linear kernels to model both market cycles and long-term trends.

This non-parametric approach eliminates rigid assumptions about data relationships. Models grow in sophistication alongside datasets, maintaining relevance as business environments change—a critical advantage in fields from epidemiology to supply chain management.

Defining the Gaussian Process Model

Imagine a model that evolves like a living organism, growing more sophisticated as it encounters new information. This dynamic capability stems from its non-parametric foundation – a mathematical framework where complexity scales with data rather than fixed assumptions.

Non-Parametric Characteristics

Traditional models work like city blueprints – rigid and predefined. Non-parametric systems resemble organic cities that expand as populations grow. The number of parameters increases with each new data point, creating adaptive intelligence systems.

This approach uses infinite-dimensional distributions to map relationships across input space. Instead of forcing patterns into equations, it lets observed variables dictate the structure. Healthcare analysts use this flexibility to predict drug interactions where traditional models fail.

| Feature | Parametric Models | Non-Parametric Models |

|---|---|---|

| Complexity Limit | Fixed by design | Grows with data |

| Assumption Burden | High | Low |

| Memory Usage | Efficient | Resource-intensive |

| Best Use Case | Stable environments | Evolving datasets |

Financial institutions leverage this memory-based learning for fraud detection. The system recalls subtle transaction patterns that parametric models overlook. While computationally demanding, the trade-off delivers precision where it matters most.

Scalability remains a key consideration. As datasets expand, the function space adapts without manual adjustments. This self-optimizing nature makes Gaussian Processes ideal for industries facing rapid data growth – from quantum computing to personalized medicine.

Gaussian Processes vs. Traditional Statistical Models

Many analysts discover too late that their forecasting tools can’t handle real-world complexity. Traditional methods like linear regression or ARIMA force data into predetermined equations – a risky approach when patterns evolve unpredictably.

Breaking Free from Formulaic Thinking

Conventional models demand strict assumptions about relationships between variables. The linear regression equation y=β₀+β₁x+ϵ crumbles when faced with non-linear trends or correlated inputs. Three critical limitations emerge:

- Fixed functional forms that ignore hidden data relationships

- Inability to quantify prediction reliability

- High data requirements for stable results

In contrast, Gaussian process models treat uncertainty as actionable intelligence. They generate probability distributions instead of single-point estimates – crucial for risk-sensitive decisions in fields like drug development or supply chain management.

Performance Metrics That Matter

When comparing approaches, traditional methods often lose ground on three fronts:

| Metric | Traditional Models | Gaussian Process |

|---|---|---|

| Uncertainty Capture | Limited | Built-in variance estimates |

| Data Efficiency | Needs large samples | Works with sparse data |

| Model Flexibility | Rigid equations | Customizable kernels |

Consider climate forecasting. Traditional regression might miss subtle atmospheric interactions, while kernel-based methods automatically detect these patterns. The log-marginal likelihood metric further optimizes model tuning – a feature absent in conventional toolkits.

This approach proves particularly valuable when handling non-stationary data. Financial analysts use it to model market volatility that defies simple trend lines, achieving 28% better prediction intervals than ARIMA in recent studies.

Implementing Gaussian Processes for Prediction

Nearly 60% of data scientists report implementation hurdles when transitioning from theoretical models to production systems. Successful deployment requires balancing mathematical rigor with practical engineering – a process where strategic data preparation and algorithm tuning determine real-world performance.

Data Preparation and Kernel Strategy

Raw training data undergoes three critical transformations: normalization (scaling features to [-1,1]), outlier removal, and exploratory analysis. These steps ensure the dataset aligns with the model’s assumptions about input distributions.

Kernel selection acts as the system’s cognitive framework. The Squared Exponential function (k(x,x′) = σ²exp(−||x−x′||²/2l²)) works best for smooth patterns, where σ controls variance and l adjusts pattern length. Teams match kernel properties to their domain knowledge through iterative testing.

| Kernel Type | Use Case | Key Parameters |

|---|---|---|

| Squared Exponential | Weather patterns | Length scale, variance |

| Matérn | Stock prices | Roughness factor |

| Rational Quadratic | Epidemic curves | Scale mixture |

Optimizing Performance Parameters

Hyperparameters undergo rigorous tuning using maximum likelihood estimation. Analysts monitor the log-marginal likelihood during training, stopping when improvements fall below 0.1% per iteration. Bayesian optimization accelerates this process for large datasets.

Modern libraries handle the computational matrix inversions efficiently, though O(N³) complexity remains a challenge. For time-series applications, Kalman filter implementations reduce processing to linear time – enabling real-time forecasts in financial trading systems.

Validation combines k-fold cross-validation with uncertainty metrics. Teams evaluate not just prediction accuracy, but whether confidence intervals contain actual outcomes 95% of the time. This dual focus separates functional models from truly reliable ones.

Applications in Predictive Analytics

Forward-thinking industries now harness probabilistic models to transform raw numbers into strategic foresight. These systems excel where traditional approaches falter – managing incomplete data while quantifying risks in dynamic environments.

Real-World Case Studies in Finance and Healthcare

Investment firms use advanced statistical methods to map stock market scenarios. Instead of fixed predictions, they generate probability distributions showing potential gains and losses. This approach helped one hedge fund reduce unexpected portfolio swings by 37% last year.

Hospitals apply similar models to optimize treatment plans. When predicting patient outcomes, they weigh multiple possibilities using sparse data. A cardiac care unit improved recovery rates by 22% after adopting this method.

Environmental and Temporal Forecasting

Meteorologists track weather patterns through time-aware systems. By analyzing historical observations, these tools forecast rainfall with confidence ranges. Cities now plan drainage upgrades using projected flood risks rather than fixed estimates.

Air quality analysts achieve similar breakthroughs. Their process predicts pollution levels while accounting for industrial activity shifts. Recent implementations helped schools schedule outdoor activities safely during smog seasons.

These examples reveal a pattern: organizations using probabilistic frameworks make decisions anchored in reality’s uncertainties. As more industries adopt this mindset, they turn volatile values into calculated opportunities.

FAQ

How do Gaussian Processes differ from traditional regression models?

Unlike parametric models like linear regression—which assume fixed equation structures—Gaussian Processes (GPs) are non-parametric. They use flexible covariance functions to model relationships in data, adapting to complex patterns while quantifying prediction uncertainty. This makes them ideal for scenarios where data behavior isn’t easily predefined.

What role does the covariance function play in prediction accuracy?

The covariance function, or kernel, defines how data points relate spatially or temporally. It determines the model’s ability to capture trends, periodicity, or noise. For example, a radial basis function (RBF) kernel models smooth variations, while a Matérn kernel handles roughness. Selecting the right kernel is critical for balancing flexibility and overfitting.

Why are GPs particularly useful in forecasting applications?

Their probabilistic nature provides not just point predictions but also confidence intervals. In finance, this quantifies investment risks; in healthcare, it assesses patient outcome variability. Temporal forecasting, like weather modeling, benefits from their ability to handle noisy, incomplete data while visualizing uncertainty.

How computationally intensive are Gaussian Processes for large datasets?

Training GPs involves inverting a covariance matrix, which scales cubically with data size. For datasets with >10,000 points, exact inference becomes impractical. Solutions include sparse approximations or leveraging modern libraries like GPyTorch, which use GPU acceleration and variational methods to improve scalability.

Can GPs handle categorical or high-dimensional input data?

Standard kernels assume continuous inputs, but adaptations exist. For categorical variables, kernels like the Hamming distance encode similarity between discrete values. In high-dimensional spaces, automatic relevance determination (ARD) kernels identify influential features, reducing irrelevant dimensions during training.

What industries benefit most from Gaussian Process-based predictions?

Finance uses GPs for portfolio optimization and risk assessment. Healthcare applies them to predict disease progression or drug responses. Environmental science relies on their temporal forecasting for climate modeling. Robotics and manufacturing also leverage GPs for sensor data analysis and quality control.

How do hyperparameters impact model performance?

Hyperparameters—like kernel length scales or noise variance—shape the model’s behavior. Poorly chosen values lead to underfitting or overfitting. Optimization techniques, such as maximizing marginal likelihood or using Bayesian optimization, refine these parameters to align predictions with observed data patterns.