While traditional statistics still dominate textbooks, a fundamental shift now shapes 83% of advanced predictive models across tech giants and research institutions. This evolution moves beyond rigid formulas to embrace uncertainty as a core component of decision-making.

Modern professionals increasingly adopt a dynamic approach where numbers tell stories rather than state absolutes. Instead of fixed answers, this method treats assumptions as evolving narratives that improve with new information. It mirrors how humans naturally refine beliefs through experience.

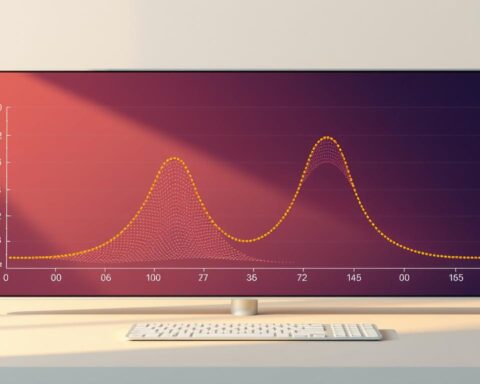

At its core, this framework uses probabilistic modeling to quantify confidence levels. Initial estimates (prior distributions) blend with fresh evidence through mathematical rules, creating updated perspectives (posterior distributions). This process enables clearer risk assessment in fields from drug trials to stock market predictions.

Three key advantages drive its adoption:

- Natural handling of incomplete data scenarios

- Transparent communication of confidence levels

- Seamless integration of multiple data sources

As data complexity grows, this adaptive strategy becomes essential for leaders making high-stakes decisions. The following sections reveal practical implementation strategies and real-world success stories.

Key Takeaways

- Modern data analysis prioritizes flexible thinking over fixed conclusions

- Probabilistic models quantify confidence levels in evolving scenarios

- Initial assumptions systematically improve with new evidence

- Complex data challenges become manageable through structured frameworks

- Transparent uncertainty communication enhances decision-making processes

Introduction to Bayesian Inference and Updating

Data-driven decision-making thrives on adaptability. Unlike static methods, modern statistical frameworks treat knowledge as fluid, evolving with each new piece of evidence. This approach aligns with how humans naturally refine opinions—starting with initial assumptions and adjusting them through observed outcomes.

Overview of the Concept

At its core, this method uses mathematical rules to blend existing knowledge with fresh observations. Imagine weather forecasting: initial predictions combine historical patterns with real-time satellite data. Each update sharpens accuracy while quantifying confidence levels through probability distributions.

The system operates like a learning loop. Initial beliefs form a starting point. New information enters the system. Mathematical integration occurs. Revised conclusions emerge. This cyclical process turns raw numbers into actionable insights.

Why It Matters in Data Science

In machine learning, models often face shifting data streams. Traditional approaches struggle with incomplete datasets or changing conditions. Probabilistic frameworks excel here—they handle uncertainty transparently while updating predictions incrementally.

Consider recommendation algorithms. They start with general user preferences (prior knowledge) and refine suggestions as browsing behavior unfolds (new evidence). This dynamic adaptation creates personalized experiences without manual recalibration.

Three critical advantages stand out:

- Clear communication of prediction confidence

- Seamless integration of expert knowledge

- Efficient handling of sequential data streams

Fundamental Concepts Behind Bayes’ Theorem

Every revolution in data science needs its mathematical engine. Bayes’ theorem provides precisely that—a systematic way to refine knowledge through evidence. This mathematical framework transforms vague assumptions into precise probabilities, creating a structured path from uncertainty to clarity.

Understanding the Prior

Priors act as launchpads for analysis. These probability distributions represent what we know—or assume—about parameters before gathering data. A marketing team might start with historical conversion rates. A medical researcher could use clinical trial baselines.

Choosing priors blends art and science. Experts might inject domain knowledge through informative distributions. When no clear assumptions exist, flat priors prevent bias. The key lies in balancing flexibility with computational practicality.

Exploring the Likelihood

Likelihood functions measure how well parameters explain observed data. Imagine testing a new drug: this component calculates how probable trial results are under different efficacy assumptions. It turns abstract numbers into evidence weights.

The formula P(H|D) = P(D|H) × P(H) / P(D) reveals the machinery. Here, likelihood (P(D|H)) scales prior beliefs by data compatibility. Conjugate priors simplify math by keeping posterior distributions in familiar forms—like using beta distributions for binomial data.

Three critical insights emerge:

- Priors anchor analysis without dictating outcomes

- Likelihoods ground models in observable reality

- Normalizing constants ensure probabilistic coherence

Together, these elements create dynamic knowledge systems. They allow professionals to quantify uncertainty while remaining adaptable—a necessity in our data-rich world.

Step-by-Step Guide for Bayesian Updating

Modern analytics thrives on adaptability through structured learning processes. This method transforms raw numbers into evolving insights by systematically combining existing knowledge with fresh observations.

Defining Initial Assumptions and Data Models

The journey begins with establishing starting points. Prior distributions capture existing understanding about parameters—like assuming a coin’s fairness before testing. For instance:

| Scenario | Prior Distribution | Observed Data |

|---|---|---|

| Coin Toss | Beta(1,1) | 7 heads, 3 tails |

| Product Conversion | Normal(0.15, 0.02) | 85 purchases/500 visits |

Likelihood functions then model how observed outcomes relate to parameters. The coin example uses θ⁷(1-θ)³ to represent head/tail probabilities. This combination creates measurable relationships between assumptions and evidence.

Revising Perspectives Through Evidence

New information reshapes initial estimates mathematically. Our coin test produces Beta(8,4) as the updated distribution—shifting probability mass toward higher head likelihoods. This revised perspective becomes the foundation for future analysis.

Three critical patterns emerge in practice:

- Clear visualizations of uncertainty through distribution curves

- Automatic weighting of conflicting evidence

- Progressive refinement across multiple data batches

Advanced implementations use computational tools when manual calculations become complex. These systems maintain the core principle: knowledge evolves through structured integration of evidence.

Comparing Bayesian Inference with Frequentist Methods

Statistical approaches diverge most visibly in how they handle unknowns. Where traditional methods view parameters as fixed targets, modern frameworks embrace variability as part of reality. This contrast shapes everything from hypothesis testing to predictive modeling.

Key Differences in Uncertainty Quantification

Frequentist methods treat unknown values as constants waiting to be discovered. Confidence intervals reflect sampling variability—what might happen if experiments repeated infinitely. A 95% interval means 95% of hypothetical intervals would contain the true parameter.

In contrast, probabilistic frameworks assign distributions to unknowns. Credible intervals directly state: “There’s a 95% chance the parameter lies here.” This interpretation aligns with how humans naturally assess risks and possibilities.

Three critical distinctions emerge:

- Parameters as variables vs. fixed quantities

- Probability as belief vs. long-run frequency

- Prior knowledge integration vs. strict data-only analysis

Computational demands also differ. Sampling methods like MCMC enable complex modeling but require more resources. Frequentist solutions often use faster optimization techniques—though at the cost of flexibility.

Decision-makers benefit from seeing full probability distributions rather than point estimates. This transparency allows evaluating multiple scenarios simultaneously. For evolving data environments, dynamic frameworks prove more adaptable than static models.

Practical Applications in Machine Learning and Data Science

Modern computational challenges demand tools that adapt as quickly as data evolves. Monte Carlo methods bridge theory and practice, transforming complex probability landscapes into actionable insights. These techniques power systems that learn incrementally while quantifying uncertainty—a critical capability for real-world decision-making.

Monte Carlo Sampling Techniques

Markov Chain Monte Carlo (MCMC) algorithms solve problems traditional math can’t crack. They build chains of probable parameter values through iterative exploration. Consider high-dimensional models like neural networks—MCMC navigates these spaces by:

| Method | Use Case | Advantage |

|---|---|---|

| Hamiltonian MC | Complex energy landscapes | Efficient trajectory calculations |

| No-U-Turn Sampling | Deep learning models | Automatic step-size adjustment |

| Gibbs Sampling | Latent variable models | Simplified conditional updates |

These methods enable spam filters to analyze word patterns and financial models to assess risk probabilities. Variational inference offers faster approximations but trades precision for speed.

Bayesian Updating in Model Training

Dynamic frameworks allow models to evolve with streaming data. A recommendation system might start with broad user preferences, then refine predictions through continuous Bayesian updates. This approach provides three strategic benefits:

- Automatic complexity control: Prevents overfitting through probabilistic regularization

- Uncertainty-aware outputs: Delivers confidence intervals with predictions

- Multi-source integration: Combines sensor data with historical patterns in robotics

Implementation requires monitoring chain convergence and computational load. Tools like PyMC3 and TensorFlow Probability bring these capabilities into mainstream workflows, making advanced statistical modeling accessible to practitioners.

Real-World Examples That Illustrate Bayesian Principles

Concrete scenarios bridge theory and practice in probabilistic reasoning. Let’s examine two tangible cases showing how evidence reshapes conclusions systematically.

Multifaced Dice Identification

A game designer tests three unusual dice: 4-sided, 6-sided, and 8-sided. Equal prior probabilities (33.3% each) assume fair selection. Rolling a 4 triggers the first update:

| Dice Type | Prior | After Roll 4 | After Roll 2 | After Roll 5 |

|---|---|---|---|---|

| 4-sided | 0.33 | 0.50 | 0.60 | 0.00 |

| 6-sided | 0.33 | 0.33 | 0.27 | 0.67 |

| 8-sided | 0.33 | 0.17 | 0.13 | 0.33 |

Subsequent rolls of 2 and 5 demonstrate sequential learning. The 4-sided die becomes impossible after rolling 5—a clear example of eliminating incompatible hypotheses through evidence.

Currency Probability Estimation

Consider testing a coin’s fairness using 10 flips (7 heads, 3 tails). Starting with uniform distribution Beta(1,1), the likelihood function θ⁷(1-θ)³ favors higher head probabilities. Calculations yield Beta(8,4) as the updated view.

“This conjugacy between beta and binomial distributions turns complex math into manageable updates—a workhorse technique in quality control and A/B testing.”

The mean estimate shifts to 66.7% heads. This mirrors how analysts update product conversion rates or machine learning models refine predictions. Both examples showcase posterior distributions evolving through accumulated data, providing actionable insights while quantifying uncertainty.

Advanced Bayesian Techniques: Bayesian Inference and Updating in Statistical Modeling

Cutting-edge methods now empower analysts to navigate complex systems with precision. These approaches blend mathematical elegance with computational power, turning theoretical concepts into practical tools for modern challenges.

Strategic Use of Conjugate Pairings

Mathematical shortcuts streamline analysis when distributions align naturally. The beta-binomial pairing transforms coin flip analysis—updating probabilities becomes simple arithmetic rather than complex calculus. Normal-normal combinations work similarly for measurement data, preserving familiar bell curves through updates.

Harnessing Stochastic Exploration

Modern computation tackles problems where formulas fall short. Markov chain methods create digital explorers that map probability landscapes. These algorithms:

- Start at random parameter values

- Propose jumps through uncertainty ranges

- Favor moves toward higher-probability regions

Hamiltonian techniques simulate momentum-driven paths through data mountains. No-U-Turn samplers automatically adjust step sizes, balancing detail capture with processing speed. Multiple chains run simultaneously verify result reliability—like cross-checking witness testimonies.

These methods transform abstract models into decision-making engines. They quantify confidence in predictions while adapting to new evidence—critical capabilities in fields from drug discovery to algorithmic trading. As data complexity grows, such tools become indispensable for separating signal from noise.

FAQ

How does prior belief influence Bayesian analysis?

Prior belief represents existing knowledge before observing new data. It acts as a starting point, allowing analysts to incorporate domain expertise or historical insights into their models. This contrasts with methods that rely solely on current datasets.

What distinguishes Bayesian from Frequentist uncertainty interpretation?

Bayesian methods quantify uncertainty as probabilities for hypotheses, reflecting degrees of belief. Frequentist approaches treat uncertainty as long-run frequencies—focusing on how results would behave under repeated experiments.

Can Bayesian updating improve machine learning workflows?

Yes. By iteratively refining models with new data, Bayesian updating helps avoid overfitting and enhances adaptability. Techniques like Markov Chain Monte Carlo enable efficient parameter estimation, even in complex neural networks.

Why are conjugate priors useful in practice?

Conjugate priors simplify calculations by ensuring the posterior distribution matches the prior’s family. For example, using a Beta prior with a Binomial likelihood yields a Beta posterior, streamlining updates without intensive computation.

How does the dice roll example explain likelihood functions?

Rolling a die multiple times generates observed outcomes. The likelihood function calculates how probable these results are under different fairness assumptions, helping update beliefs about the die’s true properties.

When should Monte Carlo sampling be used?

Monte Carlo methods approximate complex integrals or posterior distributions that lack closed-form solutions. They’re essential for high-dimensional models, like deep learning architectures, where exact calculations are impractical.

What makes Bayesian models adaptable to small datasets?

Priors inject external knowledge, reducing reliance on sparse data. This is valuable in fields like drug development, where early-phase trials have limited samples but existing research can guide inferences.

How do coin toss scenarios demonstrate posterior probabilities?

Observing heads or tails updates the probability of a coin being biased. Starting with a uniform prior, each flip adjusts the posterior, balancing observed evidence with initial assumptions to refine conclusions.