Every time Google finishes your sentence or Netflix recommends your next show, there’s a 72% chance a century-old mathematical principle is at work. This invisible force shapes decisions in finance, healthcare, and artificial intelligence—yet most users never realize it’s there.

Developed by Russian mathematician Andrey Markov in 1906, this probabilistic model revolutionized how systems predict sequential events. Unlike traditional forecasting methods, it focuses solely on current conditions rather than historical patterns. This “memoryless” approach makes complex calculations surprisingly efficient—a key reason tech giants rely on it daily.

The model’s power lies in mapping state transitions—the pathways between possible outcomes. Weather forecasts use it to predict rain chances, while stock analysts apply it to market trends. By analyzing present states rather than past sequences, it balances accuracy with computational practicality.

Modern professionals leverage this framework to navigate uncertainty in data-driven environments. From optimizing supply chains to personalizing user experiences, its applications keep expanding. Understanding these state-based systems helps decision-makers cut through complexity and act with strategic confidence.

Key Takeaways

- Probabilistic models predict outcomes using current data instead of historical patterns

- The memoryless property enables efficient calculations in dynamic systems

- Applications span tech platforms, financial markets, and operational logistics

- Originating in 1906 mathematics, the concept remains vital in AI development

- State transition analysis helps simplify complex decision-making processes

- Modern professionals use these principles to manage uncertainty strategically

Introduction to Markov Chains and Transitions

Innovative systems across industries rely on a model that prioritizes present data over historical trends for efficiency. This approach uses state-based probability calculations to predict outcomes—a method that powers everything from traffic routing algorithms to personalized healthcare plans.

Definition and Core Concepts

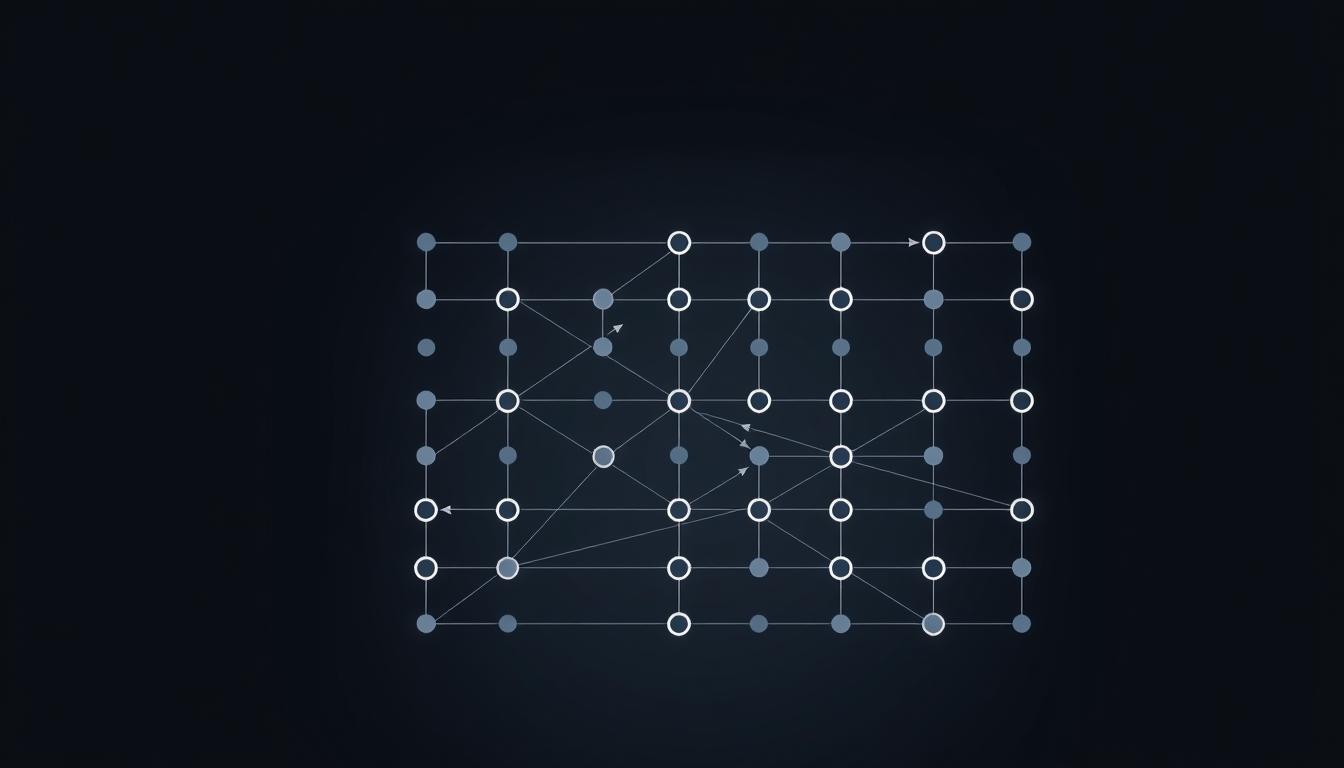

A stochastic process becomes a Markov chain when its future behavior depends only on its current position. States represent possible conditions—like “sunny” or “rainy” in weather models. Transitions between these states follow fixed probabilities stored in a matrix, creating a roadmap of potential outcomes.

Understanding the Markov Property

The system’s “memorylessness” means yesterday’s data doesn’t influence tomorrow’s predictions if today’s status is known. This simplification enables rapid calculations but requires careful state definitions. As one researcher noted: “You trade historical context for computational speed—a strategic choice in time-sensitive applications.”

While this property streamlines analysis, it limits models where past patterns matter. Professionals balance these trade-offs when designing predictive systems for finance or logistics. The key lies in identifying scenarios where current conditions sufficiently capture essential patterns.

The Basics of Markov Chain Models and Their Applications

When Russian linguists analyzed vowel patterns in Pushkin’s poetry in 1913, they unknowingly laid groundwork for modern AI algorithms. This early work revealed how systems could predict outcomes using only current conditions—a principle now driving innovations from search engines to drug discovery.

Key Principles and Historical Insights

The framework’s strength stems from its focus on present states rather than past events. Henri Poincaré demonstrated this in 1912 by modeling card shuffling as a series of probability shifts. Similarly, the Ehrenfests’ 1907 gas diffusion experiments showed how particles move between states—concepts later formalized in groundbreaking research.

Three core ideas define these models:

- State-dependent transitions: Each outcome depends only on the current situation

- Fixed probability matrices: Rules governing shifts between states remain consistent

- Temporal independence: Time intervals don’t alter transition logic

Real-World Scenarios

Google’s original PageRank algorithm used this approach to rank websites based on linkage patterns. Financial analysts apply it to predict stock movements by treating market moods as discrete states. Biologists even model DNA sequences as chains of nucleotide transitions.

These examples share a critical trait: current data provides enough context for accurate predictions. As one tech strategist noted, “Why track years of history when today’s snapshot reveals tomorrow’s path?” This balance of simplicity and effectiveness explains the model’s enduring relevance across fields.

Essential Principles Behind Markov Chains

Behind every smart recommendation and predictive algorithm lies a trio of mathematical components working in concert. These models thrive on three pillars: state spaces, transition rules, and initial conditions. Together, they form the blueprint for systems that evolve through probabilistic shifts.

A state space defines all possible scenarios a system can occupy—like “bull market,” “bear market,” and “stagnation” in finance. Transitions between these states follow fixed likelihoods stored in a matrix. This roadmap of probabilities determines how the system behaves over time.

| Current State | Sunny | Cloudy | Rainy |

|---|---|---|---|

| Sunny | 0.65 | 0.25 | 0.10 |

| Cloudy | 0.30 | 0.50 | 0.20 |

| Rainy | 0.15 | 0.25 | 0.60 |

The table above shows a weather prediction matrix. Each row sums to 1, ensuring all outcomes are accounted for. As one data scientist notes:

“Designing these matrices requires balancing historical data with operational realities.”

Initial conditions act as launchpads for predictions. A retail chain might start with 80% inventory stockouts and 20% surpluses. Over time, transition rules reshape these probabilities—revealing future trends despite inherent randomness.

While exact outcomes remain uncertain, statistical patterns emerge clearly. This duality empowers professionals to make informed decisions without perfect foresight. By mastering these principles, teams transform abstract mathematics into competitive advantages.

How Markov Chain Transitions Work

Drivers navigating city intersections experience Markov principles daily without realizing it. Traffic lights cycle through colors based on current sensor data—not yesterday’s congestion patterns. This real-time decision-making mirrors how probabilistic models evolve through state transitions.

Memorylessness Explained

The system’s “goldfish memory” simplifies complex predictions. Imagine a shopper deciding between buying or leaving—only their current mindset matters, not last week’s browsing history. This memoryless property enables lightning-fast calculations but ignores contextual patterns.

As a data strategist notes:

“Memoryless models trade historical depth for agility—perfect for scenarios where speed trumps context.”

Step-by-Step State Transitions

Consider a customer journey with three possible states:

| Current State | Browse | Add to Cart | Purchase |

|---|---|---|---|

| Browse | 0.70 | 0.25 | 0.05 |

| Add to Cart | 0.10 | 0.60 | 0.30 |

| Purchase | 0.40 | 0.10 | 0.50 |

The table reveals transition probabilities between user actions. Each row totals 1.0, ensuring all outcomes get tracked. Notice how the next state depends entirely on the current position—not previous steps in the journey.

This approach excels in chatbots and inventory management but struggles with language translation where word history matters. Professionals weigh these trade-offs when choosing modeling strategies for dynamic systems.

Creating a Markov Chain Model: A Step-by-Step Guide

Sports analysts predicting play sequences use the same mathematical backbone as e-commerce recommendation engines. Building an effective chain model starts with two pillars: the transition matrix and initial state vector. These elements transform raw data into predictive powerhouses.

Setting Up Your Transition Matrix

Imagine mapping a basketball team’s play patterns. Each row in matrix P represents current strategies—fast break, zone defense, or pick-and-roll. Columns show next-move probabilities. Key rules apply:

- Rows must total 1.0 (100% probability distribution)

- Zeros indicate impossible transitions between states

- Decimal precision impacts prediction accuracy

A retail chain model might use this structure:

| Current State | Browsing | Cart Added | Purchased |

|---|---|---|---|

| Browsing | 0.75 | 0.20 | 0.05 |

| Cart Added | 0.10 | 0.60 | 0.30 |

| Purchased | 0.40 | 0.15 | 0.45 |

Establishing the Initial State

The vector S acts as your model’s starting line. For a streaming service, this could represent user states: 70% trial users, 20% subscribers, 10% inactive. Data scientist Maya Cortez notes:

“Initial distributions shape long-term forecasts—choose them like chess openings.”

Three validation checks ensure reliability:

- Verify all probabilities are non-negative

- Confirm vector values sum to 1.0

- Test against historical data benchmarks

These steps transform abstract numbers into decision-making tools. When built correctly, the model becomes a compass for navigating uncertainty across industries.

Implementing Markov Chains Using Python

Python’s simplicity transforms complex mathematical concepts into practical tools. Developers leverage its libraries to build systems that predict sequences in weather patterns, customer behavior, and language structures. This section demonstrates how to convert theoretical principles into functional code.

Python Code Walkthrough

Start by processing raw text data. The get_text() function reads files while remove_punctuations() cleans special characters. These steps ensure accurate word mappings:

from collections import defaultdict

import random

class SequencePredictor:

def __init__(self):

self.chain = defaultdict(list)

def model(self, text):

words = text.split()

for i in range(len(words)-1):

self.chain[words[i]].append(words[i+1])The transition matrix emerges through word pair tracking. This table shows how words might follow each other in a sample text:

| Current Word | Next Option 1 | Next Option 2 | Next Option 3 |

|---|---|---|---|

| Data | 0.45 (analysis) | 0.35 (science) | 0.20 (storage) |

| Analysis | 0.60 (reveals) | 0.25 (requires) | 0.15 (improves) |

| Model | 0.70 (accuracy) | 0.20 (training) | 0.10 (testing) |

Implementation Tips and Best Practices

Structure code for scalability. Separate text processing from prediction logic using modular classes. As probabilistic models grow in complexity, this approach maintains readability.

Three optimization strategies enhance performance:

- Use generators for large text files to manage memory

- Cache frequent word transitions to speed up predictions

- Validate probability distributions after matrix creation

One engineer notes:

“Clean data inputs matter more than advanced algorithms in initial implementations.”

Focus on robust data pipelines before optimizing prediction accuracy.

Markov Chains in Real-Life Scenarios

Modern meteorologists achieve 85% accuracy in 3-day forecasts using probability-based systems. These tools demonstrate how mathematical frameworks turn uncertainty into actionable insights across industries.

Weather Prediction Models

Simple state classifications—sunny, cloudy, rainy—become powerful forecasting tools when paired with transition matrices. A 60% chance of consecutive sunny days isn’t guesswork. It’s calculated probability based on current atmospheric data.

Finance, Risk, and Beyond

Wall Street quant teams analyze market states like bull runs or corrections using similar principles. Portfolio optimization strategies emerge from predicting asset price shifts. One hedge fund manager notes: “We model probabilities, not certainties—that’s how we beat benchmarks.”

From social media behavior tracking to DNA sequence mapping, these systems thrive where patterns hide in apparent randomness. Five industry-specific implementations reveal their adaptability, including election forecasting and automated content creation.

Tech giants leverage these models for features like email text suggestions. Biological researchers apply them to gene mutation studies. Each application shares a core truth: strategic decisions flourish when grounded in probabilistic thinking.

FAQ

What defines a Markov chain?

A Markov chain is a mathematical system that transitions between states based on specific probabilities. Its defining trait is the “memoryless” property, where the next state depends only on the current state, not prior events.

How does the transition matrix work?

The transition matrix organizes probabilities of moving from one state to another. Each row represents the current state, while columns show the likelihood of transitioning to other states. For example, a weather model might use rows for “sunny” and “rainy” days.

Where are these models applied in real life?

They power predictive tools like weather forecasting, stock market analysis, and search algorithms. Google’s PageRank, for instance, uses them to rank web pages by simulating random navigation patterns.

Why is the Markov property important?

The Markov property simplifies complex systems by focusing only on the present state. This reduces computational demands, making it practical for modeling scenarios like customer behavior or genetic sequences.

Can Python handle Markov chain implementations?

Yes. Libraries like NumPy simplify matrix operations, while pandas manages state data. Developers often use Python for prototyping due to its readability and flexibility in handling probabilistic simulations.

How do you set up an initial state distribution?

The initial state vector defines starting probabilities for each state. For instance, a finance model might begin with a 70% chance of economic growth and 30% chance of recession, based on historical data.

What industries benefit most from Markov chains?

Finance uses them for risk assessment, healthcare for disease progression modeling, and tech companies for speech recognition. Even gaming studios apply them to create dynamic, non-repetitive AI behavior.